You are here

马哥 41_04 _Linux集群系列之二十二——keepalived详解 有大用

keepalived:

HA: 用来提供HA的重要的底层工具,

ipvs: 早期为了 ipvs 提供HA功能,ipvs本身是在内核中的, ipvs是规则,规则在内核中实现,只需要在当前系统上,只需要在某一个活动节点上,将规则生效,,,,,,ipvs并不依赖于后端任何的存储,只要规则存在,就可以了,keepalive就在这个基础上为 ipvs 提供了能够将director上的ip地址(VIP)在节点之间流转的功能

ipvs:--->VIP 在节点之间流转的功能,要实现流转,要依赖于vrrp协议(virtul route redundancy protocol 虚拟路由冗余协议),能将多个物理设备虚拟成一个物理设备,,最早vrrp协议的主要目的是解决局域网(lan网络)

vrrp本身与keepalive是没有关系的,它设计用来主要是为了解决局域网(lan网络),当客户端需要指定一个网关连接外部网络时, 这个网关的指定方式: 一般两种:动态和静态

动态:

动态指定的方式,动态配置的方式,让内网客户端获得一个网关地址跟外网主机进行联系,需要依赖于很多额外的技术,比如说提供arp动态功能的服务器端,提供arp动态功能的客户端,,,,,,,,当一个客户端需要跟某个外部网络进行联系的时候,它只需要发一个arp请求,这时候有个arp服务器端响应,最终arp服务器动态获得一个网关地址,,,,,,我们通过动态配置的方式让内网客户端也能够获取网关了,,,,这种好处就在于我们能够提供多个网关,两个网关哪个响应快,我们就让哪个真正负责来将用户所跟外网联系的报文给这个路由出去,由此它也能够实现提供所谓叫冗余的功能(路由冗余功能)(网关冗余功能),,,进行使得用户不会在一个路由器故障的时候导致无法与外网进行联系,,,,,,但是这种配置,我们说过需要客户端安装专门的arp的还需要做很多配置,所以比较麻烦

于是有了第二种方式,基于动态路由协议 rip2,ospf等协议,这个时候我们需要在我们的客户端上启用路由功能,也就意味着我们在客户端上必须要能够一种服务,这种服务就是能提供rip2协议或ospf协议,并且能够实现跟前端网关的交互在本地动态生成路由表,,,,这种方式也需要客户端做出额外的很多配置,而且配置还比较复杂

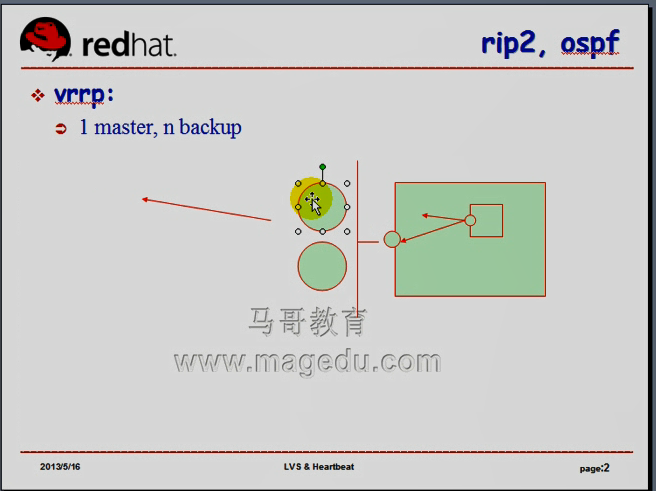

所以对于客户端而言,最简单的方式还是通过默认网关通过静态的方式来指定,,指定静态网关的坏处:万一网关挂了,将无法工作了(如下图图一),,,,,,,,,,,,,

图一

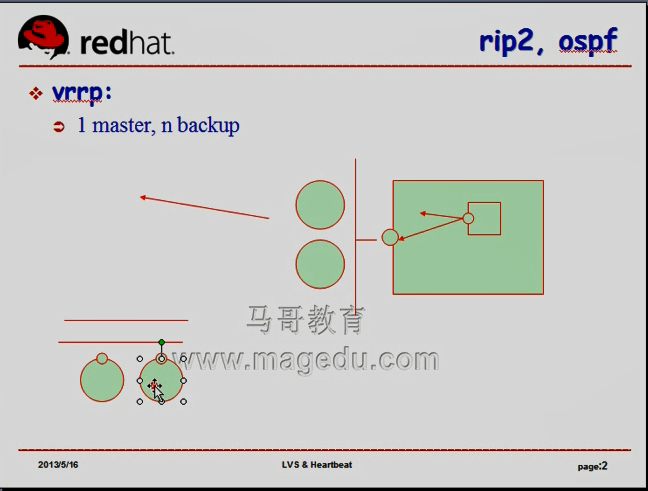

于是就需要有一种机制,在网关本身有一种冗余的功能,于是产生了vrrp,它能够将两台物理接口虚拟成一个接口(一个虚拟路由器),向外提供服务,向外提供的只有一个地址(如下图图二),客户端只需要静态指定其网关为这个地址即可,但是这个地址平时运行在哪个物理设备上,通地vrrp协议进行协调,它能够通过内部的选举或者其它的相关配置让我们的某一个地址运行在其中的某台特定的节点上,万一这个节点故障的时候,它还可以自动转移到其它的备用节点上,

图二

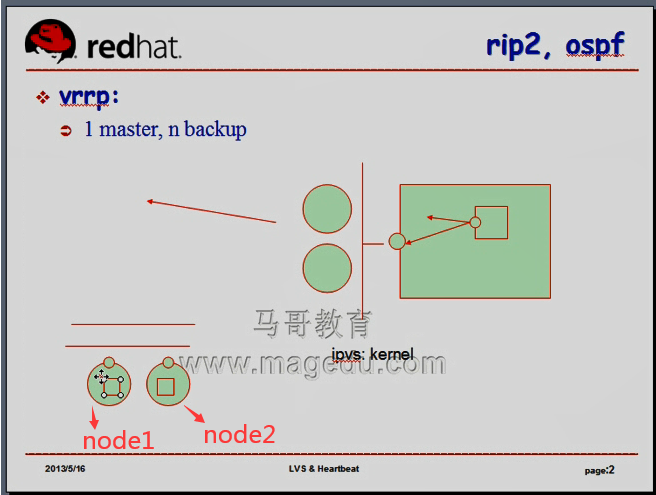

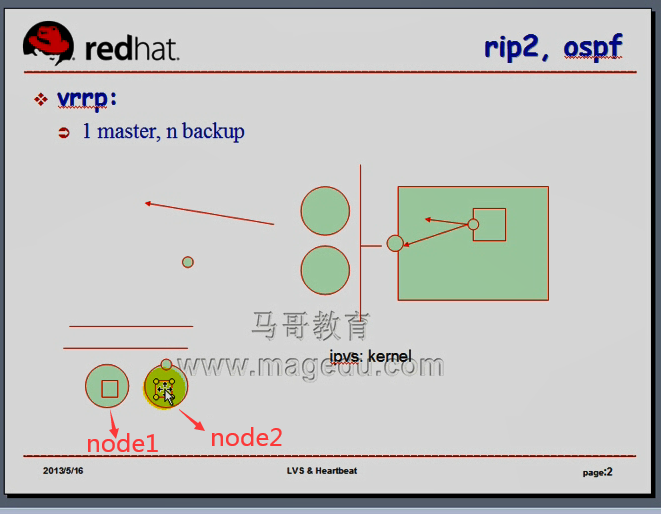

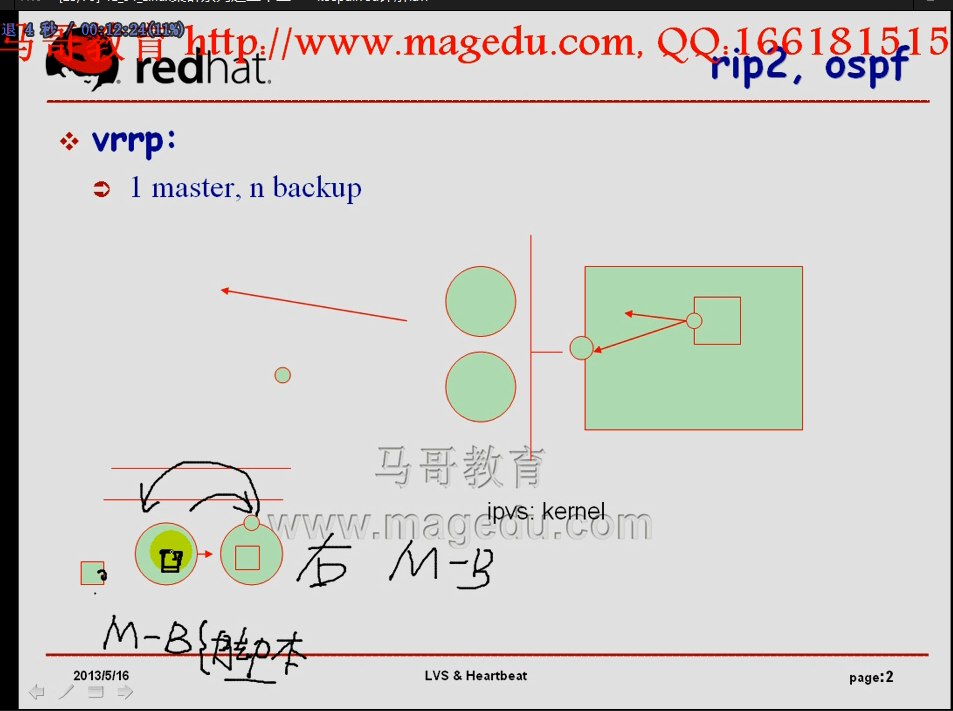

我们可以让节点 一主多从, 1 master, n backup 见下图图三 ,,,,,,,当然最常用的还是一主一从,毕竟这个从的,要始终处于空闲状态,,,,,,,,,,

当然vip也有其它的工作模式,比如类似于多主模型,但是这种多主是通过虚拟出来在两个物理设备上(或者在一组物理设备上)虚拟出来多个虚拟路由组

图三

,所以它的所谓的多组,见下图图四,它是在一组物理设备上,可以同时虚拟出来多组虚拟设备的,因为我们在同一组网卡设备上可以配置n个地址,所以我们只需要在网口的接口上,两台主机的eth0:0都对应于虚拟路由器1,eth0:1都对应于虚拟路由器2,

图四

在我们的keepalived上,keepalived本身就是实现了vrrp协议的一个linux上的服务软件,这个软件本身能够让我们的用户在linux的主机接口上配置虚拟地址(虚拟路由)的功能,而且能够基于vrrp协议来选举其中某一个节点使用当前的vip,并且在节点出现故障的时候,将vip能够按需要转移到其它节点上去,到底转移到哪个节点是依赖于其优先级的............

事实上我们真正实现一个高可用场景的时候,keepalived中我们所需要流转的不仅仅是ip地址,因为ip本身只是为了向外服务的,它(keepalived吗)不像网关一样,网关只要有ip就可以了,keepalived的目的主要是提供服务的高可用功能的,,,,,所以仅有ip的流动,仅有ip的飘移是不够的,还应该有服务的转移,,,,,,,对于ipvs来讲,早期它(是指keepalived吗?)只是为ipvs提供功能的,

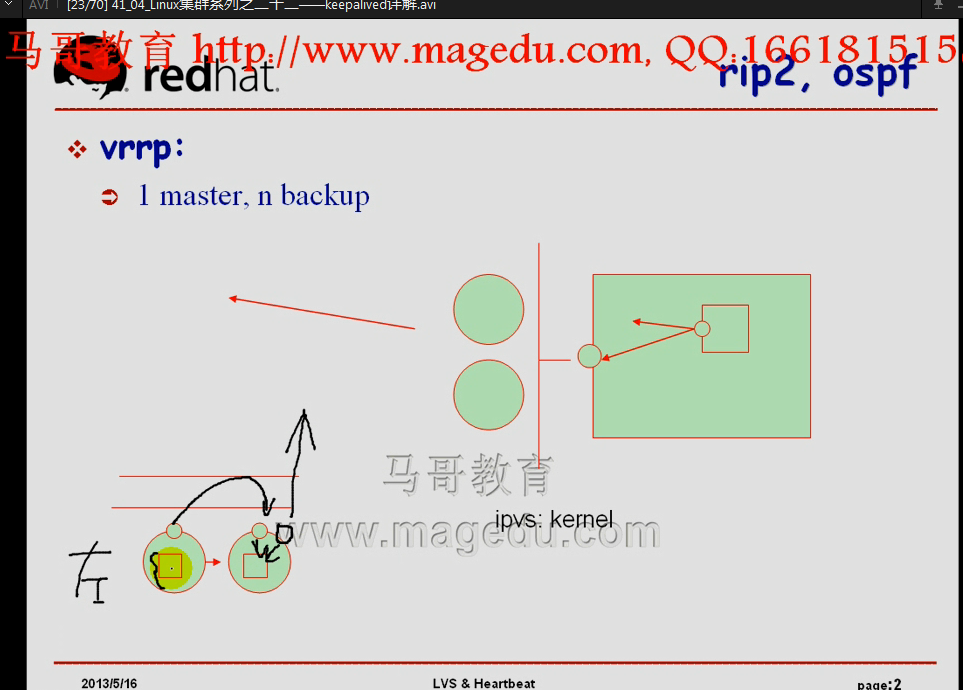

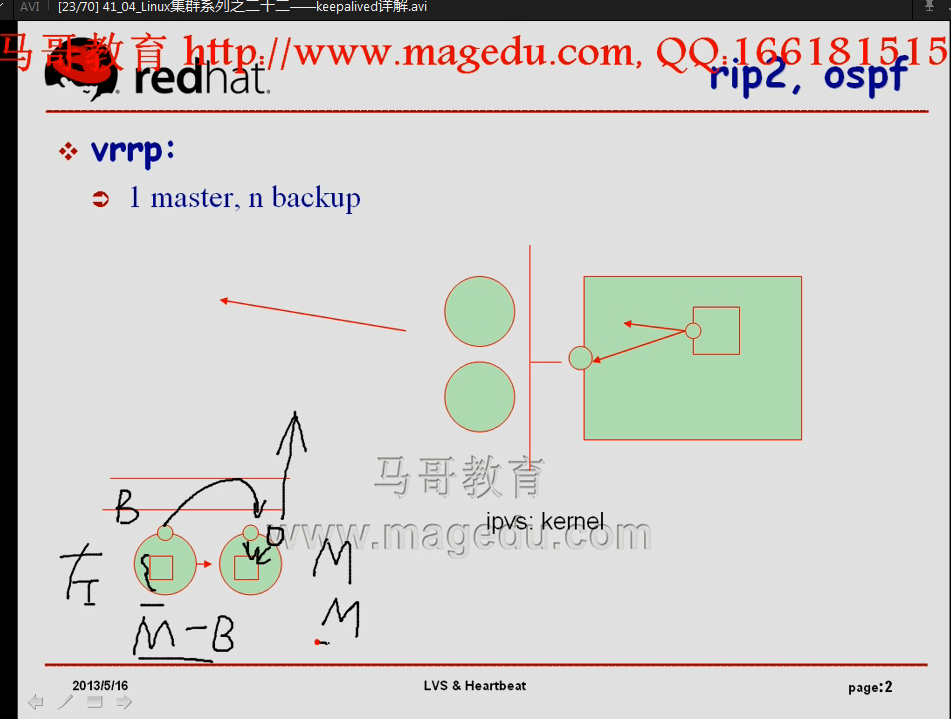

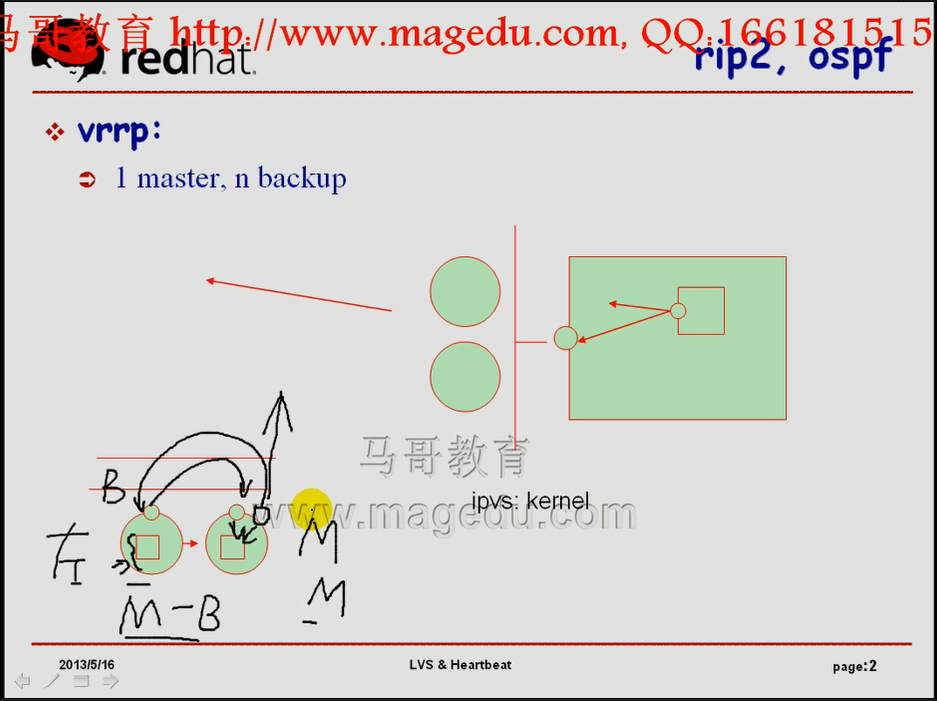

ipvs的转移,如下图,ipvs是内核 kernel 中的功能,是不用转移的,vip在哪个节点上工作,哪个节点就是活动节点,而另外一个节点非活动就是了,,,,,,,只不过在另外一个节点上,这些规则可以不用生效而已,或者说就算你配置了,它也不会起到任何作用的,,,,,,,由此我们可以让这些规则平时都处于正常的工作状态,但是这还远远不够,如下图,,,万一节点一故障了,ip应该飘移到节点二上来,,,,如果节点二上的ipvs规则没有配置上,就算ip飘移过来也没有用,,,所以这就意味着我们在实现节点间 ip 地址飘移的时候,还应该判断飘移的时候我们所创建的这个高可用集群本身还应该提供的其它服务的健康工作状态的

当ip地址飘移到右节点上(node2)的时候,右节点上的ipvs规则没生效,地址飘移过来的时候,照样没有任何作用,它的转发意义也没有任何实现,这个时候飘移过来的时候,也没办法进行工作,,,,,所以keepalived提供了额外的功能,能够让我们基于外部脚本或者命令的方式来检测我们的这样一个飘移后的高可用服务所依赖的除了ip地址之外的其它的服务或者功能,只要检测能成功,我们才飘移,,,,,检测不成功,就意味着对方可能不满足我们的条件,所以我们的地址很可能不飘移的,,,,,,,,,,,,只是说很可能不飘移,,,,,,,,,,,,,假如说左节点是活动节点,它要依赖于这个服务本身,还要配置VIP地址,我们仅有VIP,前提是不够的,万一这个服务出一故障了,实际它也是没有发挥什么任何正常工作的,,,,,,,,,,所以我们需要一个脚本始终监测着这样一个服务,万一这个服务出现故障了,仅有地址,事实上远远不够,因此只要服务出现故障,我们可以降低当前节点的优先级,一旦节点的优先级降低了,那么vrrp向外不停的进行通告的时候(vrrp的工作机制就是主节点不停的向其它从节点通告)自己仍然处于活动状态的,一旦它降低了自己的优先级,一旦它低于某个从的优先级,那么这就意味着它的通告信息当中告诉别人自己的优先级,而某一个从一比较,发现自己的优先级高,于是就把这个地址抢回来了,,,抢回来还不够,人家之所以优先级低是因为没有服务了,所以抢回来之后这边也应该有服务才行,那它怎么保证这个服务是存在的呢?首先我们可以先检测,或者是当节点vip地址飘移过来的同时,如果这个服务没启动,就启动这个服务好了,,,,,,,,,对左边节点来讲,它的服务停了,优先级降低了,所以地址就飘出去了,,,万一右节点(原来的备节点)也故障了,它的地址再飘移到左边的时候,能正常工作吗?不能............

所以有很多种机制来解决这个问题

比如一种方式,如下图,我们这里发现左边节点的服务故障,地址需要飘移的时候,自己就从主变成从的了,M-->B,,从主变成从的这一个状态上,我们可以给它执行脚本,让这个服务重启(启动)起来..... 以后vip地址再流转过来的时候就不会有问题了,,,,,,,,,,,不让它服务重启(启动)也可以,下一次一旦右节点故障了,右节点 从M-->B,vip地址就转移到左节点了,在转移的那一刻,我们可以让左节点的服务启动起来,

如果在主主模型下,我们做了两个虚拟路由,我们配置了两个ip(vip1 vip2),但是服务都是同一个服务,我们让两个节点同时向外提供服务,如下图图一,但是它们使用的是不同的ip地址(vip1 vip2),在双主模型下,两个虚拟ip(vip1 vip2),,服务是同一个,意味着两个节点运行着同一种服务,万一左节点故障了,地址飘移到右边节点上来,意味着右边有两个地址,而且服务既然是同一个服务,那么这两个地址获取的是同一个服务的相关应用,并且给客户端响应了,,,,,,,

图一

飘移右节点之后,左节点的服务怎么办?停掉,还是重启? 左边节点是双B了,右边节点是双M了, 在双M模型下,虽然能够正常工作,右边节点的压力会比较大,,,所以这个时候,一旦我们这样一个左节点从M-->B时,应该让其服务重启,而不是停止,,,,,,,,,,,,,,,,一旦重启成功了,一比较,假设我们的左边对虚拟路由2 (使用vip2)来讲,优先级是高于右边的,所以虚拟路由2的ip (vip2)又飘回到节点1上了,意味着双主模型又正常了,

这个过程严重依赖于脚本,

第一个脚本,我们要不停的监测服务是不是正常的,如果服务不正常,ip要立即向外飘,,,,

左节点的ip向外飘的同时,监测脚本监测到了服务故障,左节点就从一个虚拟路由中的M状态转化成了B状态了,,,,,,,,,,转化成B状态的时候,对这个服务来讲,我们不能任由它荡下去, 应该重启这个服务,,,,,

这个时候,它又依赖于另外一个脚本,

所以我们要不停的进行check(check就是检查当前节点上所依赖的服务是不是正常的),,,,如果不正常,就降低优先级,并且不停的进行检测,

一旦状态转换,实在是监测不到了,状态机的转换,地址飘移,左节点上ip飘移出去的时候,左节点就从M变成了B,变成B的同时,要执行额外的其它服务监测脚本(或者说通知脚本)

keepalived指令, notify master,notify backup,notify fault

keepalived 三种状态

刚刚启动的时候,两个节点开始启动,服务启动service keepalived start 它俩之间,对于某一个虚拟路由来讲,如果它俩配置成成为同一个路由组中的虚拟路由对应的两个物理设备,刚开始还不知道谁主谁从,于是双方都开始向对方传递通告信息,开始比较自己的优先级,,,,,,,,,,这个过程中,双方处于初始化状态,,,,,,初始化状态成功了,找出谁的优先级高,谁的优先级低了,于是一个变成了主节点,另一个变成了备节点,,,,,,,,,,,,,,主备模型,,,,,,这就是它所谓的状态转换,

vip允许在同一组物理设备上使用多个虚拟路由器的,所以可以把它们扮演成主主模型

在主主模型下,我们要实现的功能可能更复杂一些,实现的配置更复杂一点

keepalived诞生的目的是为ipvs提供高可用功能的,它不需要任何额外的机制,keepalived的一个内部的配置文件足以完成所有功能,,,,

但是将keepalived应用于其它服务,比如说web服务,nginx,

将来用得比较多的

keepalived+nginx 提供nginx高可用

keepalived+haproxy 提供haproxy高可用

keepalived 比 corosync 更轻量级,如果我们不需要多个节点,而且这些节点之间又不需要用到共享存储,,,,,不需要用到更复杂功能的话,keepalived本身就足以满足我们的需求了,

keepalived 地址转移非常快

nginx haproxy,,,尤其是nginx是轻量级的web,又是轻量级的web反向代理, haproxy是一个非常轻量级的高性能的web反向代理 ,,,它们作为反向代理的时候,通常不需要用到任何共享存储,工作机制类似于ipvs

结合 keepalived+nginx keepalived+haproxy 在生产环境中提供高可用的前端,非常常见的

讲完nginx,要提供 keepalived+nginx 的高可用解决方案 同时要提供 corosync+nginx 的高可用解决方案,,,,无非是把web的httpd 换成 nginx 而已 或者换成 haproxy

只要会配置 nginx haproxy 就没问题

配置 keepalived 实现 ipvs 的高可用,并且让ipvs能够实现后端real server 的 health check (健康状况检查) ( 能实现某一个 real server 出现故障的时候,从real server 剔除 ,,,real server 上线时,还能加回来) (一旦所有的 real server故障,可以使用 fall_back server,,一个节点,告诉别人,服务器正在维护当中)

两个 real server

VIP 是 192.168.0.101

两个 rs 为 192.168.0.46 192.168.0.56

在第一个rs1 192.168.0.46 上

[root@rs1 ~]# cat /proc/sys/net/ipv4/conf/all/arp_ignore

0

[root@rs1 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@rs1 ~]# cat /proc/sys/net/ipv4/conf/all/arp_ignore #值设为1了

1

[root@rs1 ~]#

[root@rs1 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@rs1 ~]# cat /proc/sys/net/ipv4/conf/all/arp_announce #值设为2了

2

[root@rs1 ~]#

马哥 只验证了两个 arp_ignore arp_announce

另外两个 配置 lo 或 eth0 都可以

我验证了

[root@rs1 ~]# echo 1 > /proc/sys/net/ipv4/conf/eth0/arp_ignore

[root@rs1 ~]# echo 2 > /proc/sys/net/ipv4/conf/eth0/arp_announce

[root@rs1 ~]# cat /proc/sys/net/ipv4/conf/eth0/arp_ignore

1

[root@rs1 ~]# cat /proc/sys/net/ipv4/conf/eth0/arp_announce

2

[root@rs1 ~]#

[root@rs1 ~]# netstat -tnlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:2208 0.0.0.0:* LISTEN 4106/./hpiod

tcp 0 0 0.0.0.0:2049 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:935 0.0.0.0:* LISTEN 3721/rpc.statd

tcp 0 0 0.0.0.0:55369 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 3671/portmap

tcp 0 0 0.0.0.0:1014 0.0.0.0:* LISTEN 4226/rpc.rquotad

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 4129/sshd

tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 4143/cupsd

tcp 0 0 0.0.0.0:632 0.0.0.0:* LISTEN 4268/rpc.mountd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 4371/sendmail

tcp 0 0 0.0.0.0:3260 0.0.0.0:* LISTEN 4069/tgtd

tcp 0 0 127.0.0.1:2207 0.0.0.0:* LISTEN 4111/python

tcp 0 0 :::80 :::* LISTEN 5406/httpd # 80 ,,,web 服务正常

tcp 0 0 :::22 :::* LISTEN 4129/sshd

tcp 0 0 :::443 :::* LISTEN 5406/httpd

tcp 0 0 :::3260 :::* LISTEN 4069/tgtd

[root@rs1 ~]#

在第一个rs2 192.168.0.56 上

[root@rs2 ~]# netstat -tnlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:2208 0.0.0.0:* LISTEN 4112/./hpiod

tcp 0 0 0.0.0.0:2049 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:45126 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:941 0.0.0.0:* LISTEN 3727/rpc.statd

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 3677/portmap

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 4135/sshd

tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 4149/cupsd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 4377/sendmail

tcp 0 0 0.0.0.0:1020 0.0.0.0:* LISTEN 4232/rpc.rquotad

tcp 0 0 0.0.0.0:3260 0.0.0.0:* LISTEN 4075/tgtd

tcp 0 0 0.0.0.0:637 0.0.0.0:* LISTEN 4274/rpc.mountd

tcp 0 0 127.0.0.1:2207 0.0.0.0:* LISTEN 4117/python

tcp 0 0 :::80 :::* LISTEN 5450/httpd # 80 ,,,web 服务正常

tcp 0 0 :::22 :::* LISTEN 4135/sshd

tcp 0 0 :::443 :::* LISTEN 5450/httpd

tcp 0 0 :::3260 :::* LISTEN 4075/tgtd

[root@rs2 ~]#

[root@rs2 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@rs2 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@rs2 ~]# cat /proc/sys/net/ipv4/conf/all/arp_ignore #正常

1

[root@rs2 ~]# cat /proc/sys/net/ipv4/conf/all/arp_announce #正常

2

[root@rs2 ~]#

马哥 只验证了两个 arp_ignore arp_announce

另外两个 配置 lo 或 eth0 都可以

我验证了

[root@rs2 ~]# echo 1 > /proc/sys/net/ipv4/conf/eth0/arp_ignore

[root@rs2 ~]# echo 2 > /proc/sys/net/ipv4/conf/eth0/arp_announce

[root@rs2 ~]# cat /proc/sys/net/ipv4/conf/eth0/arp_ignore

1

[root@rs2 ~]# cat /proc/sys/net/ipv4/conf/eth0/arp_announce

2

[root@rs2 ~]#

在第一个rs1 192.168.0.46 上

[root@rs1 ~]# ifconfig lo:0 192.168.0.101 broadcast 192.168.0.101 netmask 255.255.255.255 up

[root@rs1 ~]#

[root@rs1 ~]# ifconfig # 地址正常

eth0 Link encap:Ethernet HWaddr 00:0C:29:82:E2:53

inet addr:192.168.0.46 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe82:e253/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:5817 errors:0 dropped:0 overruns:0 frame:0

TX packets:1850 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:562702 (549.5 KiB) TX bytes:246558 (240.7 KiB)

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:102 errors:0 dropped:0 overruns:0 frame:0

TX packets:102 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:11405 (11.1 KiB) TX bytes:11405 (11.1 KiB)

lo:0 Link encap:Local Loopback

inet addr:192.168.0.101 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:16436 Metric:1

[root@rs1 ~]# route add -host 192.168.0.101 dev lo:0

[root@rs1 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

192.168.0.101 0.0.0.0 255.255.255.255 UH 0 0 0 lo

192.168.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

0.0.0.0 192.168.0.1 0.0.0.0 UG 0 0 0 eth0

[root@rs1 ~]#

在第二个rs2 192.168.0.56 上

[root@rs2 ~]# ifconfig lo:0 192.168.0.101 broadcast 192.168.0.101 netmask 255.255.255.255 up

[root@rs2 ~]# ifconfig # 正常

eth0 Link encap:Ethernet HWaddr 00:0C:29:70:C0:67

inet addr:192.168.0.56 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe70:c067/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4474 errors:0 dropped:0 overruns:0 frame:0

TX packets:679 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:465726 (454.8 KiB) TX bytes:92834 (90.6 KiB)

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:103 errors:0 dropped:0 overruns:0 frame:0

TX packets:103 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:11398 (11.1 KiB) TX bytes:11398 (11.1 KiB)

lo:0 Link encap:Local Loopback

inet addr:192.168.0.101 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:16436 Metric:1

[root@rs2 ~]#

[root@rs2 ~]# route add -host 192.168.0.101 dev lo:0

[root@rs2 ~]# route -n # 正常

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

192.168.0.101 0.0.0.0 255.255.255.255 UH 0 0 0 lo

192.168.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

0.0.0.0 192.168.0.1 0.0.0.0 UG 0 0 0 eth0

[root@rs2 ~]#

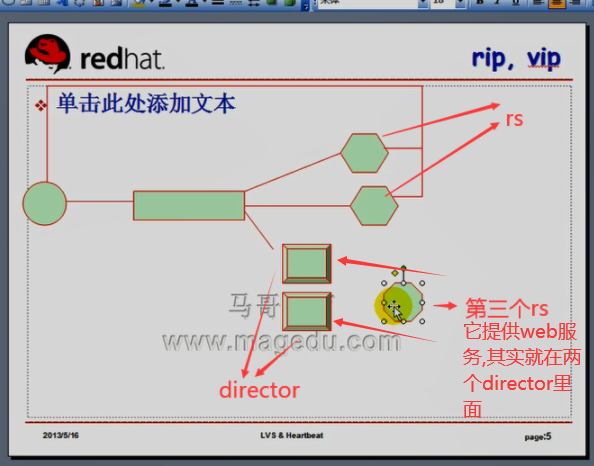

如下图,DR模型下,director的rs在同一个交换上,只有入站报文到达director,出站报文直接响应给客户端(前端路由),两个rs要直接跟外网通信,rs的网关不能指向director,要指向前端路由,rip与vip不在同一个网段,怎么办?报文从rs的接口出来以后,不能直接送给前端的路由器,(不是路由器上进来的这个接口),而是另外一个接口,通常很可能是另外一个路由(或同一个路由器上另外的接口),,,(rs报文进来,报文出去很可能是同一个网卡,一个网卡可以配置多个地址,而多个地址不在同一个网段是正常的,)

两个director上装上keepalived,能够高可用ipvs的vip地址,并且能够监控这两个rs,哪一个rs故障了,就剔除哪个rs,,,还需要第三个rs,用于作fallback,万一两个rs都挂了,第三个rs加入到rs里面去,来响应客户端请求,,,第三个rs空闲很可惜,所以在director上(两个director 都要)配置本地提供web服务,,,这个web服务只需要提供一个报错页面就行了,,,当然两个director上的web服务不需要进行高可用,也不需要通过keepalived进行监测,只是提供在特殊场景下能够直接响应客户端请求的错误页面而已.....

同时它们又能够通过DIP和RIP联系的时候,监测每一个rs的健康状态

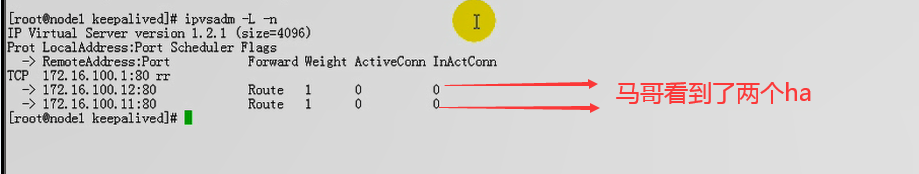

两个 HA

ha1 192.168.0.47

ha2 192.168.0.57

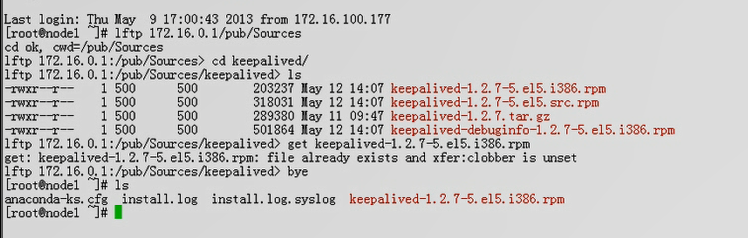

马哥自己亲自做的keepalived包

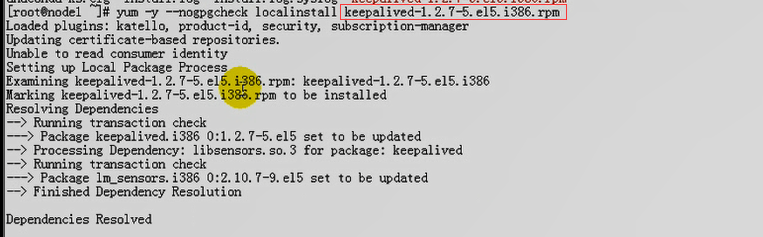

# 马哥 执行 # yum -y --nogpgcheck localinstall keepalived-1.2.7-5.el5.i386.rpm

# 马哥 执行 yum -y install ipvsadm

我在网上好不容易找到了 keepalived-1.1.20-1.2.i386.rpm ,存在了百度网盘里面了

在第一个ha1 192.168.0.47 上

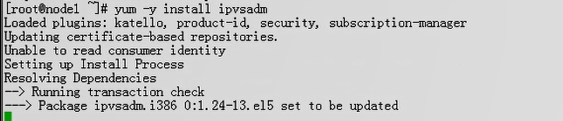

[root@rs3 ~]# yum -y --nogpgcheck install keepalived-1.1.20-1.2.i386.rpm

[root@ha1 ~]# scp keepalived-1.1.20-1.2.i386.rpm ha2:/root #复制到 ha2 上 (当然什么 # ssh-keygen , # ssh-copy-id 这些互信认证也要做一下)

keepalived-1.1.20-1.2.i386.rpm 100% 137KB 136.7KB/s 00:00

[root@ha1 ~]#

好像我这个是依赖于 ipvsadm的,所以ipvsadm 也同时安装好了

要挂载 /dev/cdrom 哦 # mount /dev/cdrom /media/cdrom

在第二个ha2 192.168.0.57 上

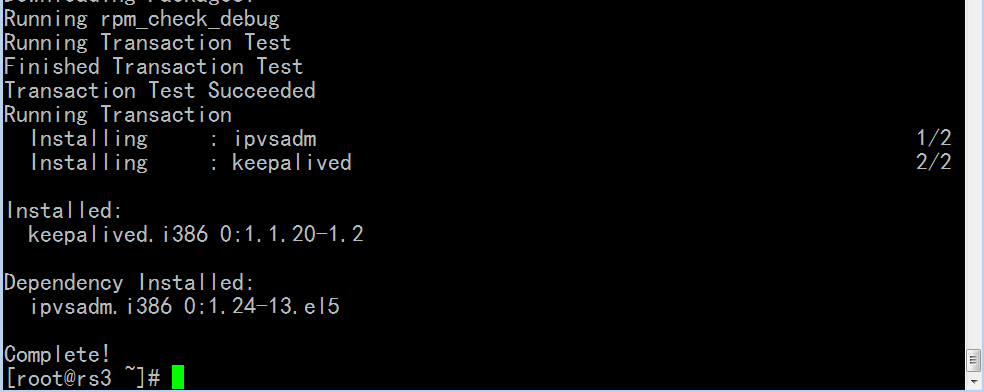

[root@ha2 ~]# yum -y --nogpgcheck install keepalived-1.1.20-1.2.i386.rpm #安装keepalived,同依赖安装 ipvsadm

装上 ipvsadm 方便监测我们服务的转移情况

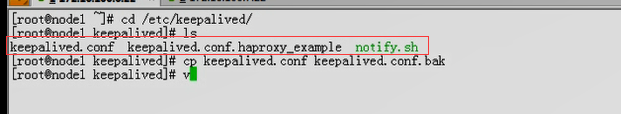

马哥配置目录里有三个文件

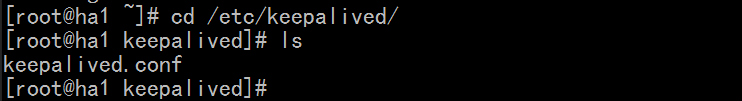

我这时只有一个,??难不成是keepalived的版本不一样,,,还是因为 马哥自己打的包,是马哥自己打包把 keepalived.conf.haproxy_example notify.sh 打进去了,,,,暂时我不管吧

ipvs规则事实上不用配置, 使用keepalived就可以配置

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# cd /etc/keepalived/

[root@ha1 keepalived]# ls

keepalived.conf

[root@ha1 keepalived]# cp keepalived.conf keepalived.conf.bak

[root@ha1 keepalived]#

[root@ha1 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email { #出现故障向谁发邮件通知的

}

notification_email_from keepalived@localhost # #谁发邮件的, 本地没有这个用户,要是实现本地发邮件的话,事实上这个用户存不存在都无所谓,不会验证发件人的

smtp_server 127.0.0.1 # smtp 的 server

smtp_connect_timeout 30 #连接超时时间

router_id LVS_DEVEL #router_id 从LVS_DEVEL获取的

}

vrrp_instance VI_1 { # vrrp_instance ( vrrp的instance实例 ) 是用来定义虚拟路由的,,,,,,虚拟路由的两端应该有什么特色,它的初始状态一端为Master(主),另一端为Backup(备),,两端的virtual_router_id 两个要一样,,,,priority要不一样,Master的那一端比Backup的那一端要大一点,,,,当某个服务出现故障的时候,要降低Master的priority的,降后要比Backup的priority小,

state MASTER

interface eth0 #表示通过信息基于哪个物理网卡接口进行发送,以及整个虚拟路由是工作在哪个物理接口上的,,,,,,,,,因为VIP本身就是为了配置地址的,,,所以它工作在哪个物理网卡接口上,,,我们必须要定义好,,,

virtual_router_id 51

priority 101

advert_int 1 # 每隔多长时间发一次通告信息

authentication { # authentication 表示认证,发送通告信息里面还要实现传递一个认证码的,客户端发现只要认证码不一样,我们就不予接受

auth_type PASS # PASS表示简单字符认证

auth_pass keepalivedpass # 加个密码

}

virtual_ipaddress {

192.168.0.101/24 dev eth0 label eth0:0 #这是虚拟地址,它要配置在网卡接口上的,如果我们这里不定义网卡接口的别名,它会使用ip addr直接配置在网卡上???????,如果想能看到那个别名,可以使用??????? label eth0:0 标签指定为eth0:0 或 eth0:1 都可以,当然两边最好保持一致 这里是VIP

}

}

#以上vip配置好了,没有监测功能,它不仅检测我们的ipvs规则????,因为ipvs规则,它作为ipvs的高可用功能的时候,底下的这些规则是自动生成的,所以它不需要提供任何额外的监测的功能,,,,,,,,,,,,,,,,,,但是主备节点发生变动的时候,,应该向管理员发送一个邮件,应该自己通过写一个脚本

virtual_server 192.168.0.101 80{ #80端口

delay_loop 6 #延迟环路???

lb_algo rr #算法rr

lb_kind DR #lvs类型就是DR

nat_mask 255.255.255.0 #掩码

#persistence_timeout 50 #如果不需要持久连接的话,验证它是不是rr的, 不需要用到持久功能

protocol TCP #协议TCP的

real_server 192.168.0.46 80 { #第一个rs

weight 1 #对于rr算法 的 rs 来讲,权重没有意义

HTTP_GET {

url { #事实上,可以同时监测多个url

path / #表示获取主页面的

status_code 200 #

}

#url {

#path /mrtg/

#digest 9b3a0c85a887a256d6939da88aabd8cd

#}

connect_timeout 2 #超时时长

nb_get_retry 3 #重试次数

delay_before_retry 1 #再次重试之前的等待时间

}

}

real_server 192.168.0.56 80 {

weight 1

HTTP_GET { # 这里应该使用 HTTP_GET,不应该使用 SSL_GET

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}

[root@ha1 keepalived]# man keepalived.conf

............................................................................................................

virtual_ipaddress {

<IPADDR>/<MASK> brd <IPADDR> dev <STRING> scope <SCOPE> label

<LABEL> #brd 是广播地址 dev 是设备 scope 是作用域 label标签(配置的别名)

192.168.200.17/24 dev eth1

192.168.200.18/24 dev eth2 label eth2:1 # 指定网卡设备,指定别名就可以了

}

............................................................................................................

[root@ha1 keepalived]# vim keepalived.conf.haproxy_example #自己建一个keepalived.conf.haproxy_example 内容是马哥的视频课件

! Configuration File for keepalived

global_defs {

notification_email {

}

notification_email_from kanotify@magedu.com

smtp_connect_timeout 3

smtp_server 127.0.0.1

router_id LVS_DEVEL

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 1

weight 2

}

vrrp_script chk_mantaince_down {

script "[ -f /etc/keepalived/down ] && exit 1 || exit 0"

interval 1

weight 2

}

vrrp_instance VI_1 {

interface eth0

state MASTER # BACKUP for slave routers

priority 101 # 100 for BACKUP

virtual_router_id 51

garp_master_delay 1

authentication {

auth_type PASS

auth_pass password

}

track_interface {

eth0

}

virtual_ipaddress {

172.16.100.1/16 dev eth0 label eth0:0

}

track_script {

chk_haproxy

chk_mantaince_down

}

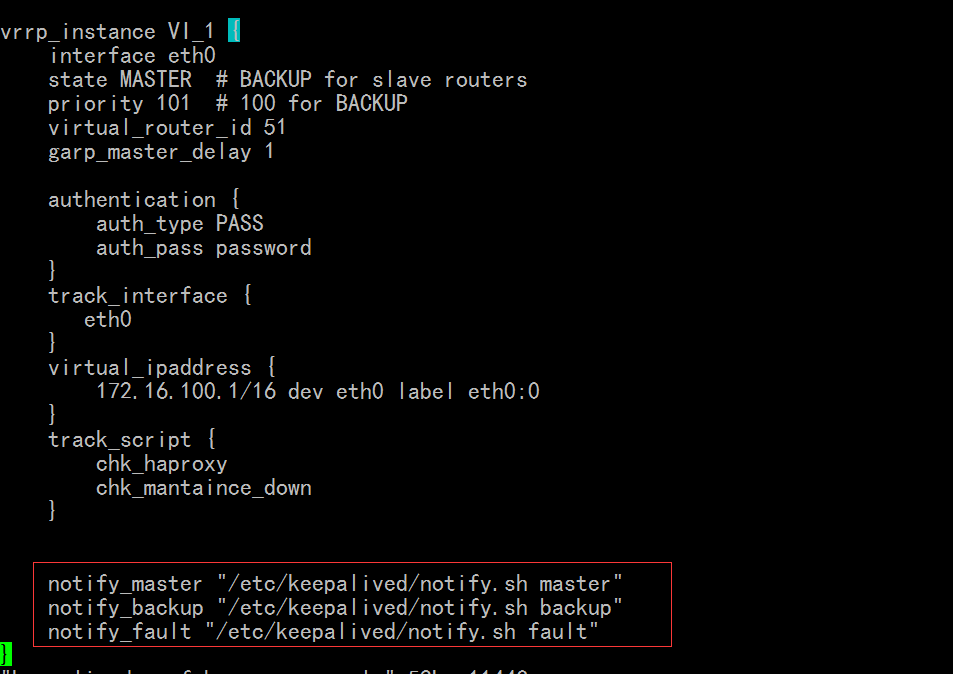

#一旦某一个vrrp_instance发生故障的时候,有一大堆的notify "/etc/keepalived/notify.sh" 这个脚本可能是里面发邮件或者通过短信机器人跟管理员发短信,都是可以的

notify_master "/etc/keepalived/notify.sh master" #当 当前节点转换为主节点的时候,执行 脚本"/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup" #当 当前节点转换为备节点的时候,执行 脚本"/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault" #当 当前节点出现错误(无法启动的时候)(无法成为主或备的时候),执行 脚本 "/etc/keepalived/notify.sh fault"

}

[root@ha1 keepalived]# pwd

/etc/keepalived

[root@ha1 keepalived]#

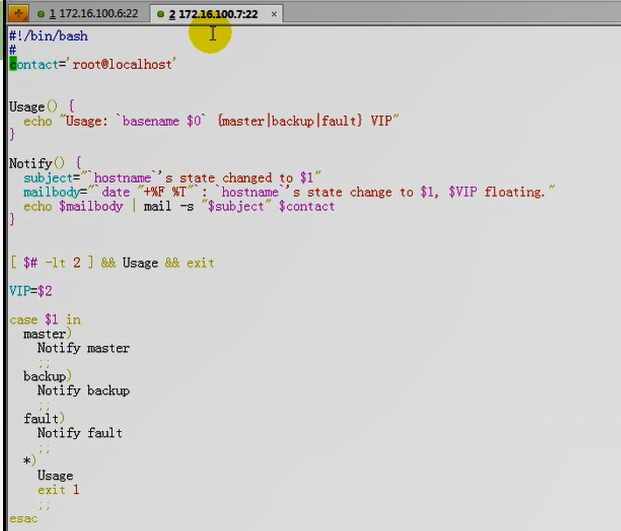

[root@ha1 keepalived]# vim notify.sh # notify.sh是自己建的,从马哥的视频里截取的,可能不适用于ipvs的这种状态,因为里面还重启 haproxy 相关的功能,不具有通用性,还需要进行一些简单的修改,,,,,,使其有通用性,想启动什么服务,传递一个选项就成,想发邮件,传递一个选项就成,

!/bin/bash

# Author: MageEdu <linuxedu@foxmail. com>

# description: An example of notify script

ifalias=${2:-eth0:0}

interface=$(echo $ifalias | awk F:'(print $1}')

vip=$(ip addr show $interface| grep $ifalias| awk '{print $2]')

#contact='linuxedu@foxmail.com

contact='root@localhost'

workspace=$(dirname $0)

notify() {

subject="$ip change to $1"

body= "$ip change to $1 $(date '+%F %H:%MH:%S' )"

echo $body | mail -s "$1 transition" $contact

}

case "$1"in

master )

notify master

exit 0

;;

backup)

notify backup

/etc/rc.d/init.d/haproxy restart

exit 0

;;

fault)

notify fault

exit 0

;;

*)

echo 'Usage: $(basename $0) {master|backup|fault}'

exit 1

;;

esac

[root@ha1 keepalived]# vim notify-2.sh # notify-2.sh是自己建的,从马哥的课件里复制的

#!/bin/bash

# Author: MageEdu <linuxedu@foxmail.com>

# description: An example of notify script

#

vip=172.16.100.1

contact='root@localhost'

Notify() {

mailsubject="`hostname` to be $1: $vip floating"

mailbody="`date '+%F %H:%M:%S'`: vrrp transition, `hostname` changed to be $1"

echo $mailbody | mail -s "$mailsubject" $contact

}

case "$1" in

master)

Notify master

/etc/rc.d/init.d/haproxy start

exit 0

;;

backup)

Notify backup

/etc/rc.d/init.d/haproxy restart

exit 0

;;

fault)

Notify fault

exit 0

;;

*)

echo 'Usage: `basename $0` {master|backup|fault}'

exit 1

;;

esac

[root@ha1 keepalived]# export

[root@ha1 keepalived]# man keepalived.conf

....................................................................................................

# one entry for each realserver

real_server <IPADDR> <PORT>

{

# relative weight to use, default: 1

weight <INT>

# Set weight to 0

# when healthchecker detects failure

inhibit_on_failure

# Script to launch when healthchecker

# considers service as up.

notify_up <STRING>|<QUOTED-STRING>

# Script to launch when healthchecker

# considers service as down.

notify_down <STRING>|<QUOTED-STRING>

# pick one healthchecker #实现健康状况检查的时候,支持的方法有如下

# HTTP_GET|SSL_GET|TCP_CHECK|SMTP_CHECK|MISC_CHECK

# HTTP_GET 表示 对 HTTP协议所对应的服务做健康状况检查,我们正好是http协议,所以要用它

# SSL_GET 表示https协议做健康状况检查,

# lvs不仅能够负载均衡web服务,还能够负载均衡mysql,只要是tcp,udp的都可以,假如现在负载均衡mysql的话,HTTP_GET和HTTP_GET检测都没法用了,,,那就使用 TCP_CHECK,,,如果是邮件服务的话,可以使用SMTP_CHECK(当然指的是smtp协议,pop3协议不行,pop3协议还得使用TCP_CHECK),,,,还有MISC_CHECK检查方式,马哥没讲

# HTTP and SSL healthcheckers #不同的check方式配置方法不一样

HTTP_GET|SSL_GET

{

# A url to test

# can have multiple entries here

url { # 表示基于url做监测的

#eg path / , or path /mrtg2/

path <STRING> #指的是所获取的测试页面的url路径,哪个url

# healthcheck needs status_code

# or status_code and digest

# Digest computed with genhash

# eg digest 9b3a0c85a887a256d6939da88aabd8cd

digest <STRING> #测试页面所获得的数据,应该是什么结果, digest 指的是获得的数据的结果,或者是状态响应码的结果,状态响应码的摘要码

# status code returned in the HTTP header

# eg status_code 200

status_code <INT> #可以使用status_code,表示期望我们获取页面的时候,对方返回的状态码是什么,,,如果页面存在,服务正常,应该返回 200

}

#IP, tcp port for service on realserver

connect_port <PORT> #我们连接的时候,连接服务的哪个端口,http协议默认都是80;;;;如果http协议使用了8080,那么这里必须指定为8080

bindto <IPADDR> #要绑定的地址,要检测的那个rs的ip地址是什么,,,,,real server上可能有多个地址,除了rip可能有其它地址,,,,也可以这里不使用,那么就是检测上面的 real_server 的地址(rip),,,,换句话说可以不检测rip,可以检测其它额外的地址?????

# Timeout connection, sec

connect_timeout <INT>

# number of get retry

nb_get_retry <INT> # 如果发生错误了,最多重试的次数

# delay before retry

delay_before_retry <INT> #每次重试之前,过几秒钟再次进行重试 delay是延迟的意思

} #HTTP_GET|

#TCP healthchecker (bind to IP port)

TCP_CHECK # TCP_CHECK 只支持三个参数

{

connect_port <PORT> #如果是mysql的话,检测的就是 3306,,pop3的话,就是110

bindto <IPADDR> #如果不需要检测其它地址,只检测real server 本身的rip的话,bindto 是可以省略的

connect_timeout <INT> #最多监测多长时间,不可能一直无休止的等下去

} #TCP_CHECK

....................................................................................................

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# scp keepalived.conf ha2:/etc/keepalived/ # 复制 keepalived.conf 到 ha2上

keepalived.conf 100% 1165 1.1KB/s 00:00

[root@ha1 keepalived]#

在第二个ha2 192.168.0.57 上

[root@ha2 ~]# cd /etc/keepalived/

[root@ha2 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP # 把MASTER改为 BACKUP

interface eth0

virtual_router_id 51

priority 100 # 把101 改为 100

advert_int 1

authentication {

auth_type PASS

auth_pass keepalivedpass

}

virtual_ipaddress {

192.168.0.101/24 dev eth0 lable eth0:0

}

}

virtual_server 192.168.0.101 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

# persistence_timeout 50

protocol TCP

real_server 192.168.0.46 80 {

weight 1

SSL_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.0.56 80 {

weight 1

SSL_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}

在第二个ha2 192.168.0.57 上

[root@ha2 keepalived]# service keepalived status

keepalived 已停

[root@ha2 keepalived]# service keepalived start #启动keepalived 服务

启动 keepalived: [确定]

[root@ha2 keepalived]#

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# service keepalived status

keepalived is stopped

[root@ha1 keepalived]# service keepalived start #启动keepalived 服务

Starting keepalived: [ OK ]

[root@ha1 keepalived]#

启动keepalived 服务后,可能两个ha需要协商几秒钟

在第一个ha1 192.168.0.47 上

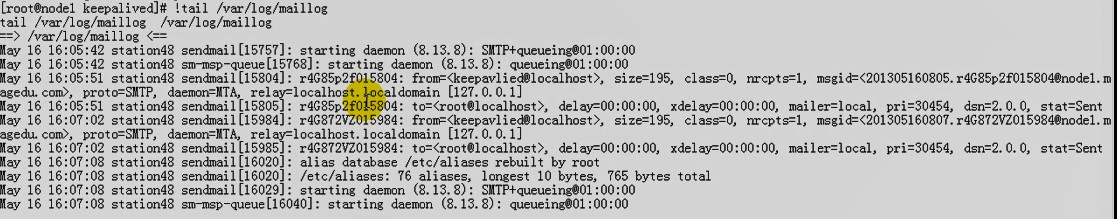

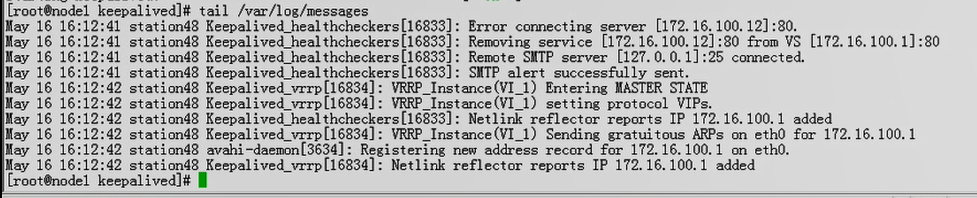

[root@ha1 ~]# tail /var/log/messages #看日志 启动keepalived 服务后,可能两个ha需要协商几秒钟,所以多执行几次tail /var/log/messages 看看

Feb 28 15:38:18 ha1 Keepalived_healthcheckers: SMTP alert successfully sent.

Feb 28 15:38:19 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE

Feb 28 15:38:19 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.

Feb 28 15:38:19 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Feb 28 15:38:19 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 added

Feb 28 15:38:19 ha1 avahi-daemon[4281]: Registering new address record for 192.168.0.101 on eth0.

Feb 28 15:38:19 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 added

Feb 28 15:38:24 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Feb 28 15:40:42 ha1 ntpd[3940]: synchronized to LOCAL(0), stratum 8

Feb 28 15:40:42 ha1 ntpd[3940]: kernel time sync enabled 0001

马哥多执行几次正常了 (可能是程序执行时间差的问题吧,毕竟程序执行是需要时间的)

[root@ha1 ~]#

[root@ha1 keepalived]# ifconfig #没看到 192.168.0.101

eth0 Link encap:Ethernet HWaddr 00:0C:29:68:EE:69

inet addr:192.168.0.47 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe68:ee69/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:12538 errors:0 dropped:0 overruns:0 frame:0

TX packets:14237 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1023270 (999.2 KiB) TX bytes:912253 (890.8 KiB)

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:8834 errors:0 dropped:0 overruns:0 frame:0

TX packets:8834 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:446824 (436.3 KiB) TX bytes:446824 (436.3 KiB)

[root@ha1 keepalived]#

[root@ha1 keepalived]# ip addr show #看到了 192.168.0.101

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 00:0c:29:68:ee:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.47/24 brd 192.168.0.255 scope global eth0

inet 192.168.0.101/24 scope global secondary eth0

inet6 fe80::20c:29ff:fe68:ee69/64 scope link

valid_lft forever preferred_lft forever

3: sit0: <NOARP> mtu 1480 qdisc noop

link/sit 0.0.0.0 brd 0.0.0.0

[root@ha1 keepalived]#

马哥这里# ifconfig 就看到了 172.16.100.1

[root@ha1 keepalived]# ipvsadm -L -n #我没有看到ha

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

[root@ha1 keepalived]#

这什么也马哥看到了 两个 ha 且有80端口呢

[root@ha1 keepalived]# tail -20 /var/log/messages

Feb 28 16:56:52 ha1 Keepalived_vrrp: Configuration is using : 36324 Bytes

Feb 28 16:56:52 ha1 Keepalived_vrrp: Using LinkWatch kernel netlink reflector...

Feb 28 16:56:52 ha1 Keepalived_vrrp: VRRP sockpool: [ifindex(2), proto(112), fd(10,11)]

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: Error connecting server [192.168.0.47:80].

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: Removing service [192.168.0.47:80] from VS [192.168.0.101:80]

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: Remote SMTP server [127.0.0.1:25] connected.

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: Error connecting server [192.168.0.57:80]. #这里难道 80 端口未启动

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: Removing service [192.168.0.57:80] from VS [192.168.0.101:80]

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: Lost quorum 1-0=1 > 0 for VS [192.168.0.101:80]

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: Remote SMTP server [127.0.0.1:25] connected.

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: SMTP alert successfully sent.

Feb 28 16:56:53 ha1 Keepalived_healthcheckers: SMTP alert successfully sent.

Feb 28 16:56:53 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATE

Feb 28 16:56:54 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE

Feb 28 16:56:54 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.

Feb 28 16:56:54 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Feb 28 16:56:54 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 added

Feb 28 16:56:54 ha1 avahi-daemon[4281]: Registering new address record for 192.168.0.101 on eth0.

Feb 28 16:56:54 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 added

Feb 28 16:56:59 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

[root@ha1 keepalived]#

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# service httpd status

httpd 已停

[root@ha1 keepalived]# chkconfig httpd on

[root@ha1 keepalived]# chkconfig --list httpd

httpd 0:关闭 1:关闭 2:启用 3:启用 4:启用 5:启用 6:关闭

[root@ha1 keepalived]# service httpd start

启动 httpd: [确定]

[root@ha1 keepalived]#

在第二个ha2 192.168.0.57 上

[root@ha2 ~]# service httpd status

httpd 已停

You have new mail in /var/spool/mail/root

[root@ha2 ~]# chkconfig httpd on

[root@ha2 ~]# chkconfig --list httpd

httpd 0:关闭 1:关闭 2:启用 3:启用 4:启用 5:启用 6:关闭

[root@ha2 ~]#

[root@ha2 ~]# service httpd start

启动 httpd: [确定]

[root@ha2 ~]#

[root@ha1 keepalived]# ipvsadm -L -n #还是看不到两个ha

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

[root@ha1 keepalived]#

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# service keepalived restart #再重启keepalived

停止 keepalived: [确定]

启动 keepalived: [确定]

[root@ha1 keepalived]#

在第二个ha2 192.168.0.57 上

[root@ha2 ~]# service keepalived restart #再重启keepalived

停止 keepalived: [确定]

启动 keepalived: [确定]

[root@ha2 ~]#

[root@ha1 keepalived]# tail -20 /var/log/messages #再看日志

Feb 28 17:06:17 ha1 Keepalived_vrrp: Using LinkWatch kernel netlink reflector...

Feb 28 17:06:17 ha1 Keepalived_vrrp: VRRP sockpool: [ifindex(2), proto(112), fd(10,11)]

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: SSL handshake/communication error connecting to server (openssl errno: 1) [192.168.0.47:80]. #为什么这里 ssl 报错?我们使用了 ssl 吗?

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: Removing service [192.168.0.47:80] from VS [192.168.0.101:80]

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: Remote SMTP server [127.0.0.1:25] connected.

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: SSL handshake/communication error connecting to server (openssl errno: 1) [192.168.0.57:80].

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: Removing service [192.168.0.57:80] from VS [192.168.0.101:80]

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: Lost quorum 1-0=1 > 0 for VS [192.168.0.101:80]

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: Remote SMTP server [127.0.0.1:25] connected.

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: SMTP alert successfully sent.

Feb 28 17:06:18 ha1 Keepalived_healthcheckers: SMTP alert successfully sent.

Feb 28 17:06:18 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATE

Feb 28 17:06:19 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE

Feb 28 17:06:19 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.

Feb 28 17:06:19 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 added

Feb 28 17:06:19 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Feb 28 17:06:19 ha1 avahi-daemon[4281]: Registering new address record for 192.168.0.101 on eth0.

Feb 28 17:06:19 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 added

Feb 28 17:06:24 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Feb 28 17:08:04 ha1 ntpd[3940]: synchronized to 193.182.111.12, stratum 2

[root@ha1 keepalived]#

...........................................................................

real_server 192.168.0.46 80 {

weight 1

HTTP_GET { #把 SSL_GET 改成 HTTP_GET 吧

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.0.56 80 {

weight 1

HTTP_GET { #把 SSL_GET 改成 HTTP_GET 吧

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

...........................................................................

[root@ha1 keepalived]# scp keepalived.conf ha2:/etc/keepalived/ #再复制到 第二个ha2 192.168.0.57 上

keepalived.conf 100% 1167 1.1KB/s 00:00

[root@ha1 keepalived]#

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# service keepalived restart

停止 keepalived: [确定]

启动 keepalived: [确定]

[root@ha1 keepalived]#

在第二个ha2 192.168.0.57 上

[root@ha2 ~]# service keepalived restart

停止 keepalived: [确定]

启动 keepalived: [确定]

[root@ha2 ~]#

[root@ha1 keepalived]# ipvsadm -L -n #还是看不到两个 ha

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

[root@ha1 keepalived]#

[root@ha1 keepalived]# tail -n 30 /var/log/messages

Feb 28 17:25:52 ha1 Keepalived_vrrp: Registering Kernel netlink command channel

Feb 28 17:25:52 ha1 Keepalived_vrrp: Registering gratutious ARP shared channel

Feb 28 17:25:52 ha1 Keepalived_vrrp: Opening file '/etc/keepalived/keepalived.conf'.

Feb 28 17:25:52 ha1 Keepalived_vrrp: Configuration is using : 36328 Bytes

Feb 28 17:25:52 ha1 Keepalived_vrrp: Using LinkWatch kernel netlink reflector...

Feb 28 17:25:52 ha1 Keepalived_vrrp: VRRP sockpool: [ifindex(2), proto(112), fd(10,11)]

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: HTTP status code error to [192.168.0.47:80] url(/), status_code [403]. #403无权访问?

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: Removing service [192.168.0.47:80] from VS [192.168.0.101:80]

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: Remote SMTP server [127.0.0.1:25] connected.

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: HTTP status code error to [192.168.0.57:80] url(/), status_code [403].

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: Removing service [192.168.0.57:80] from VS [192.168.0.101:80]

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: Lost quorum 1-0=1 > 0 for VS [192.168.0.101:80]

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: Remote SMTP server [127.0.0.1:25] connected.

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: SMTP alert successfully sent.

Feb 28 17:25:53 ha1 Keepalived_healthcheckers: SMTP alert successfully sent.

Feb 28 17:25:53 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATE

Feb 28 17:25:54 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE

Feb 28 17:25:54 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.

Feb 28 17:25:54 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Feb 28 17:25:54 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 added

Feb 28 17:25:54 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 added

Feb 28 17:25:54 ha1 avahi-daemon[4281]: Registering new address record for 192.168.0.101 on eth0.

Feb 28 17:25:59 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Feb 28 17:26:05 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Received higher prio advert

Feb 28 17:26:05 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering BACKUP STATE

Feb 28 17:26:05 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) removing protocol VIPs.

Feb 28 17:26:05 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 removed

Feb 28 17:26:05 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 removed

Feb 28 17:26:05 ha1 avahi-daemon[4281]: Withdrawing address record for 192.168.0.101 on eth0.

Feb 28 17:27:01 ha1 ntpd[3940]: synchronized to 193.182.111.12, stratum 2

[root@ha1 keepalived]#

[root@ha1 ~]# cd /var/www/html

[root@ha1 html]#

[root@ha1 html]# vim index.html

ha1

在第二个ha2 192.168.0.57 上 同样的地方,给index.html的内容为 ha2

[root@ha2 ~]# cd /var/www/html

[root@ha2 html]# vim index.html

ha2

在第一个ha1 192.168.0.47 上

[root@ha1 html]# ipvsadm -L -n #看到了两个ha,正常了

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

-> 192.168.0.46:80 Local 1 0 0

-> 192.168.0.56:80 Route 1 0 0

[root@ha1 html]#

[root@ha1 ~]# ifconfig #为什么看不到eth0:0 的VIP # ip addr show 这个命令还有测一下,看下

eth0 Link encap:Ethernet HWaddr 00:0C:29:68:EE:69

inet addr:192.168.0.47 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe68:ee69/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4629 errors:0 dropped:0 overruns:0 frame:0

TX packets:810 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:445650 (435.2 KiB) TX bytes:86059 (84.0 KiB)

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:560 errors:0 dropped:0 overruns:0 frame:0

TX packets:560 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:50000 (48.8 KiB) TX bytes:50000 (48.8 KiB)

[root@ha1 ~]#

马哥 看到了 eth0 的 VIP

[root@ha1 ~]# tail -n 20 /var/log/messages

Mar 2 08:36:29 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 added

Mar 2 08:36:29 ha1 avahi-daemon[4330]: Registering new address record for 192.168.0.101 on eth0.

Mar 2 08:36:29 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Mar 2 08:36:29 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 added

Mar 2 08:36:34 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Mar 2 08:36:41 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Received higher prio advert #在ha1上看到其了其它更高优先级??

Mar 2 08:36:41 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering BACKUP STATE

Mar 2 08:36:41 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) removing protocol VIPs.

Mar 2 08:36:41 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 removed

Mar 2 08:36:41 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 removed

Mar 2 08:36:41 ha1 avahi-daemon[4330]: Withdrawing address record for 192.168.0.101 on eth0.

Mar 2 08:37:41 ha1 avahi-daemon[4330]: Invalid query packet.

Mar 2 08:38:21 ha1 last message repeated 8 times

Mar 2 08:38:21 ha1 avahi-daemon[4330]: Invalid query packet.

Mar 2 08:40:40 ha1 ntpd[3966]: time reset +0.766346 s

Mar 2 08:41:15 ha1 avahi-daemon[4330]: Invalid query packet.

Mar 2 08:42:05 ha1 last message repeated 4 times

Mar 2 08:42:09 ha1 last message repeated 5 times

Mar 2 08:44:01 ha1 ntpd[3966]: synchronized to LOCAL(0), stratum 8

................................

在第二个ha1 192.168.0.57 上

[root@ha2 keepalived]# vim keepalived.conf

..............................................................

vrrp_instance VI_1 {

state BACKUP # 把MASTER 改成 BACKUP

interface eth0

virtual_router_id 51

priority 100 # 把priority 的值 由 101 改成 100

advert_int 1

authentication {

auth_type PASS

auth_pass keepalivedpass

}

virtual_ipaddress {

192.168.0.101/24 dev eth0 lable eth0:0

}

}

..............................................................

重启两个ha的 keepalived

在第一个ha1 192.168.0.47 上

[root@ha1 ~]# service keepalived restart

停止 keepalived: [确定]

启动 keepalived: [确定]

[root@ha1 ~]#

在第二个ha1 192.168.0.57 上

[root@ha2 keepalived]# service keepalived restart

停止 keepalived: [确定]

启动 keepalived: [确定]

[root@ha2 keepalived]#

[root@ha1 ~]# ifconfig #没看到 vip 为 192.168.0.101

eth0 Link encap:Ethernet HWaddr 00:0C:29:68:EE:69

inet addr:192.168.0.47 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe68:ee69/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:7783 errors:0 dropped:0 overruns:0 frame:0

TX packets:2498 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:736150 (718.8 KiB) TX bytes:263608 (257.4 KiB)

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:1793 errors:0 dropped:0 overruns:0 frame:0

TX packets:1793 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:162066 (158.2 KiB) TX bytes:162066 (158.2 KiB)

[root@ha1 ~]#

[root@ha1 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 00:0c:29:68:ee:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.47/24 brd 192.168.0.255 scope global eth0

inet 192.168.0.101/24 scope global secondary eth0 #看到了 eth0:0 为 192.168.0.101

inet6 fe80::20c:29ff:fe68:ee69/64 scope link

valid_lft forever preferred_lft forever

3: sit0: <NOARP> mtu 1480 qdisc noop

link/sit 0.0.0.0 brd 0.0.0.0

[root@ha1 ~]#

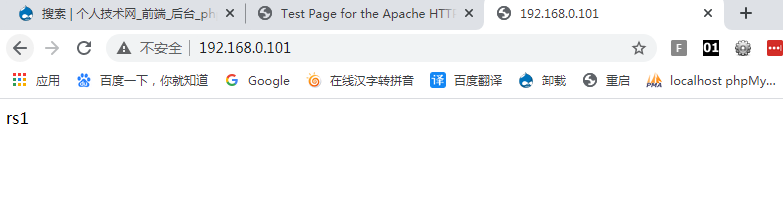

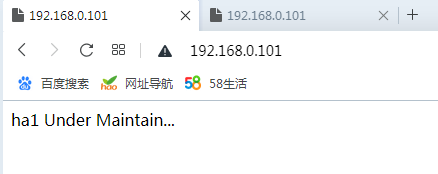

http://192.168.0.101/ 如下图,正常的 不过刷新几次,会有点卡

在第一个rs1 192.168.0.46 上

[root@rs1 html]# service httpd stop #停掉web服务

停止 httpd: [确定]

[root@rs1 html]#

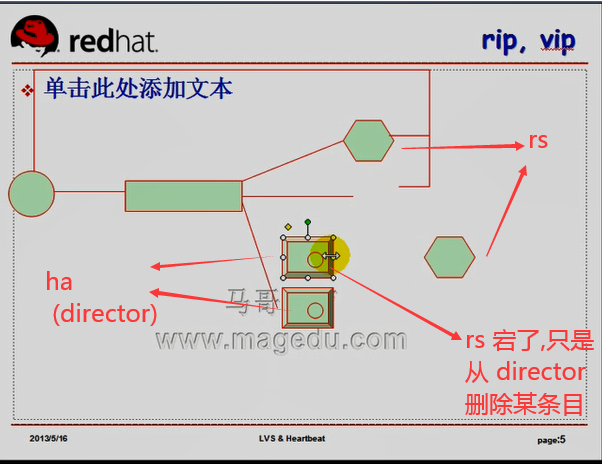

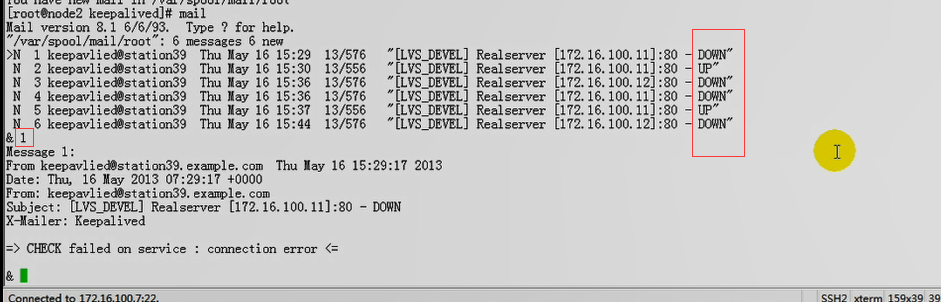

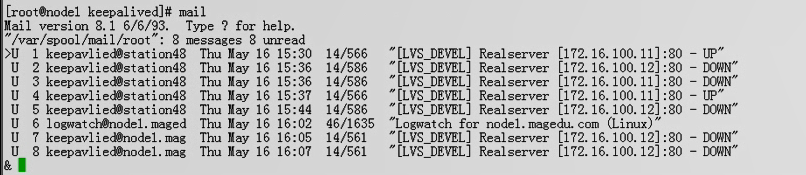

rs1 宕掉,并不会导致我们的 keepalived 的vip地址跳级,只是说后端的一个rs出现故障了,我们删掉了这个条目

在第一个ha1 192.168.0.47 上

[root@ha1 ~]# ipvsadm -L -n #没看到rs1了

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

-> 192.168.0.56:80 Route 1 0 0

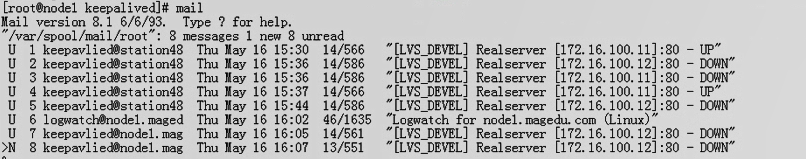

You have new mail in /var/spool/mail/root

[root@ha1 ~]# mail

Mail version 8.1 6/6/93. Type ? for help.

"/var/spool/mail/root": 48 messages 48 new

& 48

Message 48:

From keepalived@ha1.magedu.com Tue Mar 2 09:27:20 2021

Date: Tue, 02 Mar 2021 01:27:20 +0000

From: keepalived@ha1.magedu.com

Subject: [LVS_DEVEL] Realserver 192.168.0.46:80 - DOWN

X-Mailer: Keepalived

=> CHECK failed on service : connection error <=

& q

http://192.168.0.101/ 看到下图的结果

在第一个rs1 192.168.0.46 上

[root@rs1 html]# service httpd start #重新上线

启动 httpd: [确定]

[root@rs1 html]#

keepalived 监测速度很快,而且监测成功了,它会立即上来的

[root@ha1 ~]# ipvsadm -L -n #看到了 rs1 192.168.0.46

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

-> 192.168.0.46:80 Route 1 0 0

-> 192.168.0.56:80 Route 1 0 5

You have new mail in /var/spool/mail/root

[root@ha1 ~]#

万一两个rs都down了,所以应该有个fallback server

在第一个ha1 192.168.0.47 上

在第二个ha1 192.168.0.57 上

都装上 httpd ,,,,其实我以前已经装过了 # yum -y install httpd

手动模拟 ha1 宕掉

rs的高可用功能事实上是由前端 director 上的健康状况检测的功能实现的,所以某个rs去了,并不会引起地址飘移,它只不过从 ha (director) 里面把ipvsadm 中的 某个rs的条目删掉而己

如果某个主ha 宕了,VIP地址必须飘移了,(假如主ha不宕,如何飘移?比如模拟在高可用中让它stand by 一下,如何飘移?)

使用 vrrp script 来监测

1,所有的rs都down了,如何处理

所有的rs都down了,我们期望在我们的服务器上,本机启动一个web服务,返回一个错误页面,,,但是我们是两个节点,在某一时刻工作的节点我们又不确定,所以本地的web服务在两个ha上都要安装

2,自写监测脚本,完成维护模式的切换 (手动切换一下,如果不能手动切换非得建个优先级吧)

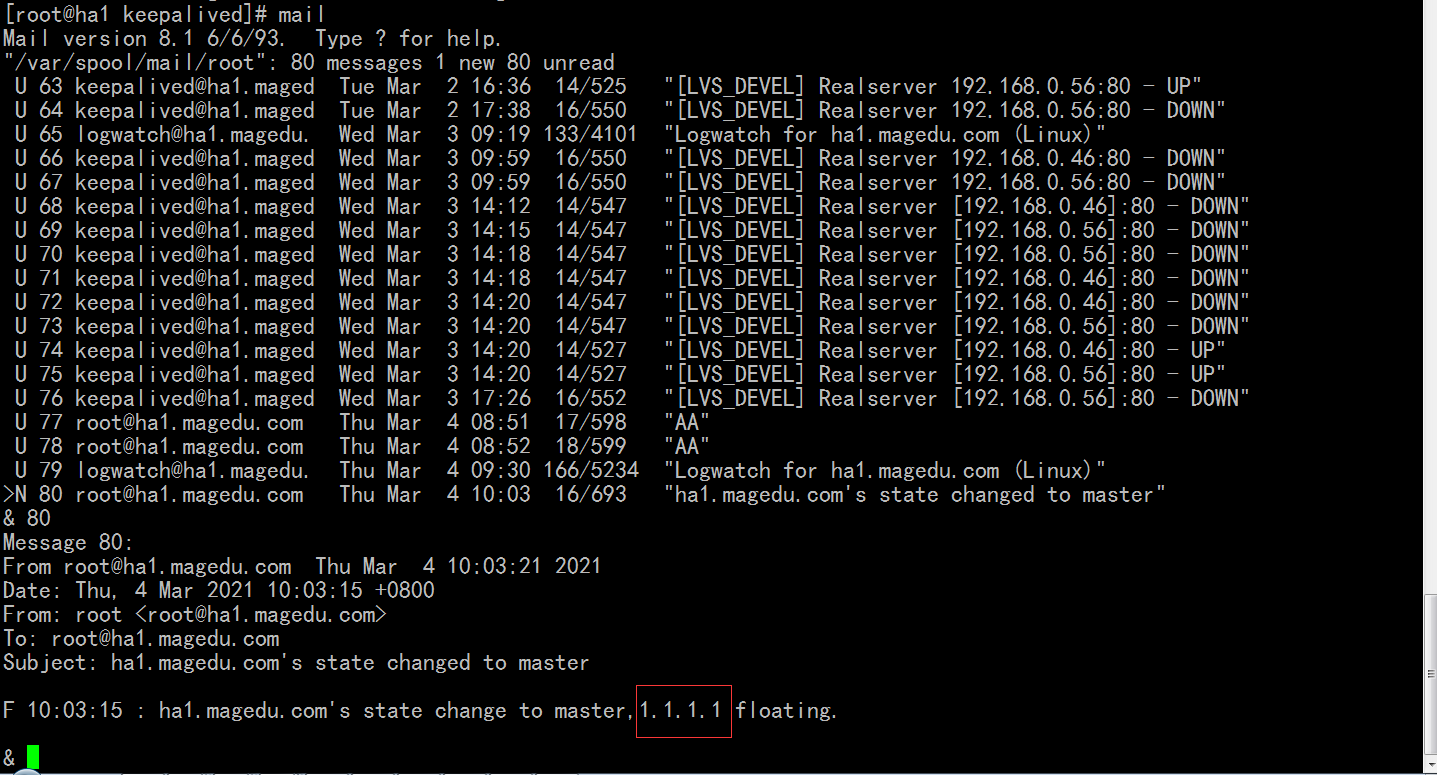

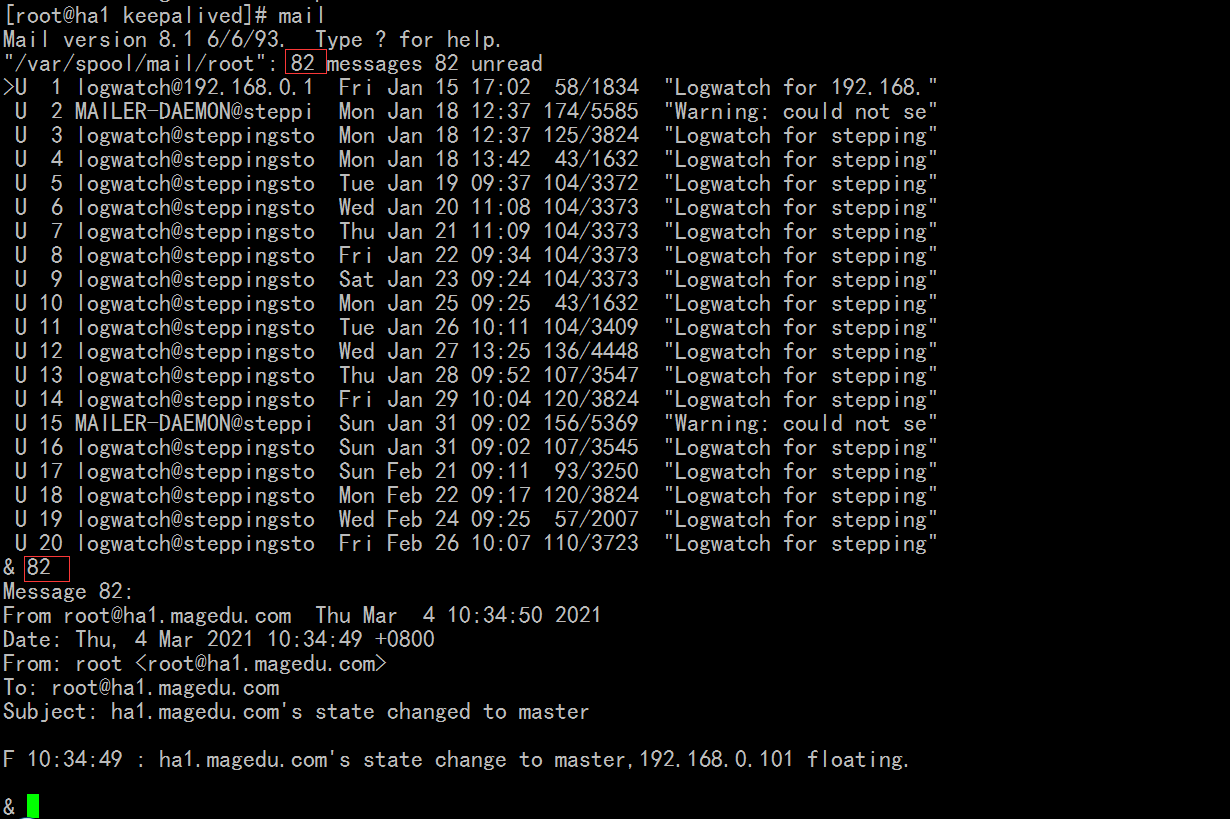

3,如何在vrrp事务发生时(主备节点切换时,或者两个ha都故障时),发送警告邮件给指定的管理员

在第一个ha1 192.168.0.47 上

[root@ha1 ~]# cd /var/www/html/

[root@ha1 html]# ls

index.html

[root@ha1 html]# vim index.html

ha1 Under Maintain...

在第二个ha2 192.168.0.57 上

[root@ha2 keepalived]# cd /var/www/html/

[root@ha2 html]# vim index.html

ha2 Under Maintain...

所有的rs都宕机时,请求转到本ha的web服务上来

在第一个ha1 192.168.0.47 上

[root@ha1 html]# service httpd restart

停止 httpd: [确定]

启动 httpd: [确定]

[root@ha1 html]#

在第二个ha2 192.168.0.57 上

[root@ha2 html]# service httpd restart

停止 httpd: [确定]

启动 httpd: [确定]

[root@ha2 html]#

在第一个ha1 192.168.0.47 上

[root@ha1 html]# cd /etc/keepalived/

[root@ha1 keepalived]# vim keepalived.conf

.............................

virtual_server 192.168.0.101 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

# persistence_timeout 50

protocol TCP

sorry_server 127.0.0.1 80 #增加这行,万一所有rs发生故障的时候,,, 地址和端口都要提供好

.............................

在第二个ha2 192.168.0.57 上

[root@ha2 html]# cd /etc/keepalived/

[root@ha2 keepalived]# vim keepalived.conf

.............................

virtual_server 192.168.0.101 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

# persistence_timeout 50

protocol TCP

sorry_server 127.0.0.1 80 #增加这行,

.............................

两个 ha 的 keepalived 重启一下

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# service keepalived restart

停止 keepalived: [确定]

启动 keepalived: [确定]

[root@ha1 keepalived]#

在第二个ha2 192.168.0.57 上

[root@ha2 keepalived]# service keepalived restart

停止 keepalived: [确定]

启动 keepalived: [确定]

[root@ha2 keepalived]#

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# tail /var/log/messages

Mar 2 14:26:40 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATE

Mar 2 14:26:41 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE

Mar 2 14:26:41 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.

Mar 2 14:26:41 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Mar 2 14:26:41 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 added

Mar 2 14:26:41 ha1 avahi-daemon[4330]: Registering new address record for 192.168.0.101 on eth0.

Mar 2 14:26:41 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 added #健康状态检查没有问题,,这不是检查后台 rs 的健康状况的进程,而是检查当前的接口是不是正常的??????????

Mar 2 14:26:42 ha1 ntpd[3966]: synchronized to LOCAL(0), stratum 8

Mar 2 14:26:46 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Mar 2 14:30:38 ha1 ntpd[3966]: synchronized to 162.159.200.123, stratum 3

[root@ha1 keepalived]#

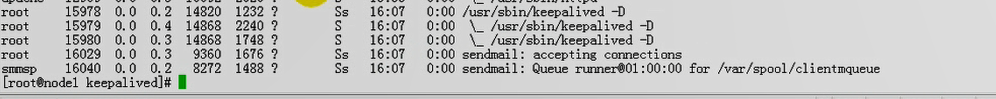

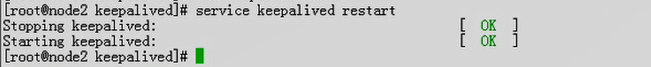

[root@ha1 keepalived]# ps auxf #可以看keepalived的相关进程 这里 f 可以显示进程树的

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.0 2176 640 ? Ss 08:18 0:00 init [3]

root 2 0.0 0.0 0 0 ? S< 08:18 0:00 [migration/0]

root 3 0.0 0.0 0 0 ? SN 08:18 0:00 [ksoftirqd/0]

root 4 0.0 0.0 0 0 ? S< 08:18 0:00 [migration/1]

root 5 0.0 0.0 0 0 ? SN 08:18 0:00 [ksoftirqd/1]

root 6 0.0 0.0 0 0 ? S< 08:18 0:00 [migration/2]

root 7 0.0 0.0 0 0 ? SN 08:18 0:00 [ksoftirqd/2]

root 8 0.0 0.0 0 0 ? S< 08:18 0:00 [migration/3]

root 9 0.0 0.0 0 0 ? SN 08:18 0:00 [ksoftirqd/3]

root 10 0.0 0.0 0 0 ? S< 08:18 0:00 [events/0]

root 11 0.0 0.0 0 0 ? S< 08:18 0:00 [events/1]

root 12 0.0 0.0 0 0 ? S< 08:18 0:00 [events/2]

root 13 0.0 0.0 0 0 ? S< 08:18 0:00 [events/3]

root 14 0.0 0.0 0 0 ? S< 08:18 0:00 [khelper]

root 15 0.0 0.0 0 0 ? S< 08:18 0:00 [kthread]

root 21 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kblockd/0]

root 22 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kblockd/1]

root 23 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kblockd/2]

root 24 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kblockd/3]

root 25 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kacpid]

root 194 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [cqueue/0]

root 195 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [cqueue/1]

root 196 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [cqueue/2]

root 197 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [cqueue/3]

root 200 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [khubd]

root 202 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kseriod]

root 285 0.0 0.0 0 0 ? S 08:18 0:00 \_ [khungtask]

root 286 0.0 0.0 0 0 ? S 08:18 0:00 \_ [pdflush]

root 287 0.0 0.0 0 0 ? S 08:18 0:00 \_ [pdflush]

root 288 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kswapd0]

root 289 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [aio/0]

root 290 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [aio/1]

root 291 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [aio/2]

root 292 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [aio/3]

root 510 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kpsmoused]

root 576 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [mpt_poll_]

root 577 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [mpt/0]

root 578 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [scsi_eh_0]

root 584 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [ata/0]

root 585 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [ata/1]

root 586 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [ata/2]

root 587 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [ata/3]

root 588 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [ata_aux]

root 599 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [kstriped]

root 620 0.0 0.0 0 0 ? S< 08:18 0:00 \_ [ksnapd]

root 643 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kjournald]

root 674 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kauditd]

root 1904 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kgameport]

root 2515 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kmpathd/0]

root 2516 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kmpathd/1]

root 2517 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kmpathd/2]

root 2518 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kmpathd/3]

root 2519 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kmpath_ha]

root 2541 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [kjournald]

root 2742 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [iscsi_eh]

root 2806 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [cnic_wq]

root 2812 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [bnx2i_thr]

root 2813 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [bnx2i_thr]

root 2814 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [bnx2i_thr]

root 2815 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [bnx2i_thr]

root 2832 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [ib_addr]

root 2854 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [ib_mcast]

root 2855 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [ib_inform]

root 2856 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [local_sa]

root 2862 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [iw_cm_wq]

root 2868 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [ib_cm/0]

root 2869 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [ib_cm/1]

root 2870 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [ib_cm/2]

root 2871 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [ib_cm/3]

root 2877 0.0 0.0 0 0 ? S< 08:19 0:00 \_ [rdma_cm]

root 3530 0.0 0.0 0 0 ? S< 08:20 0:00 \_ [rpciod/0]

root 3531 0.0 0.0 0 0 ? S< 08:20 0:00 \_ [rpciod/1]

root 3532 0.0 0.0 0 0 ? S< 08:20 0:00 \_ [rpciod/2]

root 3533 0.0 0.0 0 0 ? S< 08:20 0:00 \_ [rpciod/3]

root 4044 0.0 0.0 0 0 ? S< 08:20 0:00 \_ [nfsd4]

root 707 0.0 0.1 3236 1664 ? S<s 08:19 0:00 /sbin/udevd -d

root 2899 0.0 2.1 22524 22516 ? S<Lsl 08:19 0:00 iscsiuio

root 2904 0.0 0.0 2372 416 ? Ss 08:19 0:00 iscsid

root 2905 0.0 0.2 2836 2832 ? S<Ls 08:19 0:00 iscsid

root 3056 0.0 0.0 2284 516 ? Ss 08:19 0:00 mcstransd

root 3339 0.0 0.0 12652 748 ? S<sl 08:20 0:00 auditd

root 3341 0.0 0.0 12184 700 ? S<sl 08:20 0:00 \_ /sbin/audis

root 3363 0.0 1.0 12804 11320 ? Ss 08:20 0:00 /usr/sbin/resto

root 3378 0.0 0.0 1832 580 ? Ss 08:20 0:00 syslogd -m 0

root 3381 0.0 0.0 1780 400 ? Ss 08:20 0:00 klogd -x

root 3459 0.0 0.0 2580 376 ? Ss 08:20 0:00 irqbalance

rpc 3492 0.0 0.0 1928 548 ? Ss 08:20 0:00 portmap

rpcuser 3542 0.0 0.0 1980 740 ? Ss 08:20 0:00 rpc.statd

dbus 3612 0.0 0.1 13112 1096 ? Ssl 08:20 0:00 dbus-daemon --s

root 3629 0.0 1.2 45020 12808 ? Ssl 08:20 0:00 /usr/bin/python

root 3643 0.0 0.0 2272 776 ? Ss 08:20 0:00 /usr/sbin/hcid

root 3650 0.0 0.0 1848 512 ? Ss 08:20 0:00 /usr/sbin/sdpd

root 3683 0.0 0.0 0 0 ? S< 08:20 0:00 [krfcommd]

root 3731 0.0 0.1 12848 1296 ? Ssl 08:20 0:00 pcscd

root 3746 0.0 0.0 1776 524 ? Ss 08:20 0:00 /usr/sbin/acpid

68 3765 0.0 0.4 7076 5020 ? Ss 08:20 0:01 hald

root 3766 0.0 0.0 3284 988 ? S 08:20 0:00 \_ hald-runner

68 3775 0.0 0.0 2124 808 ? S 08:20 0:00 \_ hald-ad

68 3788 0.0 0.0 2124 800 ? S 08:20 0:00 \_ hald-ad

root 3797 0.0 0.0 2076 644 ? S 08:20 0:00 \_ hald-ad

root 3829 0.0 0.0 2024 452 ? Ss 08:20 0:00 /usr/bin/hidd -

root 3872 0.0 0.1 27388 1388 ? Ssl 08:20 0:00 automount --pid

root 3894 0.0 0.0 5280 512 ? Ss 08:20 0:00 ./hpiod

root 3899 0.0 0.4 13484 4332 ? S 08:20 0:00 /usr/bin/python

root 3917 0.0 0.1 7328 1048 ? Ss 08:20 0:00 /usr/sbin/sshd

root 4394 0.0 1.1 18268 11448 ? Ss 08:24 0:00 \_ sshd: root@

root 4396 0.0 0.1 5028 1544 pts/0 Ss 08:24 0:00 \_ -bash

root 5562 0.0 0.0 4720 956 pts/0 R+ 14:34 0:00 \_ ps

root 3931 0.0 1.0 18236 10448 ? Ss 08:20 0:00 cupsd

root 3949 0.0 0.0 2852 852 ? Ss 08:20 0:00 xinetd -stayali

ntp 3966 0.0 0.4 4532 4528 ? SLs 08:20 0:00 ntpd -u ntp:ntp

root 4014 0.0 0.0 4060 248 ? Ss 08:20 0:00 rpc.rquotad

root 4045 0.0 0.0 0 0 ? S 08:20 0:00 [lockd]

root 4046 0.0 0.0 0 0 ? S 08:20 0:00 [nfsd]

root 4047 0.0 0.0 0 0 ? S 08:20 0:00 [nfsd]

root 4048 0.0 0.0 0 0 ? S 08:20 0:00 [nfsd]

root 4049 0.0 0.0 0 0 ? S 08:20 0:00 [nfsd]

root 4050 0.0 0.0 0 0 ? S 08:20 0:00 [nfsd]

root 4051 0.0 0.0 0 0 ? S 08:20 0:00 [nfsd]

root 4052 0.0 0.0 0 0 ? S 08:20 0:00 [nfsd]

root 4053 0.0 0.0 0 0 ? S 08:20 0:00 [nfsd]

root 4056 0.0 0.0 2036 288 ? Ss 08:20 0:00 rpc.mountd

root 4136 0.0 0.0 2328 524 ? Ss 08:20 0:00 rpc.idmapd

root 4159 0.0 0.1 9384 1896 ? Ss 08:20 0:00 sendmail: accep

smmsp 4168 0.0 0.1 8292 1512 ? Ss 08:20 0:00 sendmail: Queue

root 4183 0.0 0.0 2012 376 ? Ss 08:20 0:00 gpm -m /dev/inp

root 4212 0.0 0.1 5396 1184 ? Ss 08:20 0:00 crond

xfs 4256 0.0 0.1 3956 1572 ? Ss 08:20 0:00 xfs -droppriv -

root 4283 0.0 0.0 2376 440 ? Ss 08:20 0:00 /usr/sbin/atd

root 4301 0.0 0.0 4612 652 ? Ss 08:20 0:00 /usr/bin/rhsmce

avahi 4330 0.0 0.1 2836 1304 ? Ss 08:20 0:00 avahi-daemon: r

avahi 4331 0.0 0.0 2716 328 ? Ss 08:20 0:00 \_ avahi-daemo

root 4362 0.0 0.0 5872 576 ? S 08:20 0:00 /usr/sbin/smart

root 4366 0.0 0.0 1764 440 tty1 Ss+ 08:20 0:00 /sbin/mingetty

root 4367 0.0 0.0 1764 440 tty2 Ss+ 08:20 0:00 /sbin/mingetty

root 4368 0.0 0.0 1764 444 tty3 Ss+ 08:20 0:00 /sbin/mingetty

root 4369 0.0 0.0 1764 444 tty4 Ss+ 08:20 0:00 /sbin/mingetty

root 4371 0.0 0.0 1764 444 tty5 Ss+ 08:20 0:00 /sbin/mingetty

root 4372 0.0 0.0 1764 444 tty6 Ss+ 08:20 0:00 /sbin/mingetty

root 4382 0.0 1.0 26072 10364 ? SN 08:20 0:00 /usr/bin/python

root 4384 0.0 0.1 2676 1136 ? SN 08:20 0:00 /usr/libexec/ga

root 5427 0.0 0.9 22764 9404 ? Ss 13:34 0:00 /usr/sbin/httpd

apache 5429 0.0 0.5 22764 5444 ? S 13:34 0:00 \_ /usr/sbin/h

apache 5430 0.0 0.4 22764 4772 ? S 13:34 0:00 \_ /usr/sbin/h

apache 5431 0.0 0.5 22764 5420 ? S 13:34 0:00 \_ /usr/sbin/h

apache 5432 0.0 0.4 22764 4772 ? S 13:34 0:00 \_ /usr/sbin/h

apache 5433 0.0 0.4 22764 4772 ? S 13:34 0:00 \_ /usr/sbin/h

apache 5434 0.0 0.4 22764 4772 ? S 13:34 0:00 \_ /usr/sbin/h

apache 5435 0.0 0.4 22764 4772 ? S 13:34 0:00 \_ /usr/sbin/h

apache 5436 0.0 0.4 22764 4772 ? S 13:34 0:00 \_ /usr/sbin/h

root 5539 0.0 0.0 5080 456 ? Ss 14:26 0:00 keepalived -D #keepalived是多进程的,,多个子进程的

root 5540 0.0 0.1 5128 1312 ? S 14:26 0:00 \_ keepalived

root 5541 0.0 0.0 5128 944 ? S 14:26 0:00 \_ keepalived

[root@ha1 keepalived]#

[root@ha1 keepalived]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:68:EE:69

inet addr:192.168.0.47 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe68:ee69/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:57952 errors:0 dropped:0 overruns:0 frame:0

TX packets:57616 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:6774838 (6.4 MiB) TX bytes:4319467 (4.1 MiB)

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:3379 errors:0 dropped:0 overruns:0 frame:0

TX packets:3379 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:305855 (298.6 KiB) TX bytes:305855 (298.6 KiB)

[root@ha1 keepalived]#

[root@ha1 keepalived]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 00:0c:29:68:ee:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.47/24 brd 192.168.0.255 scope global eth0

inet 192.168.0.101/24 scope global secondary eth0 #看到了 192.168.0.101

inet6 fe80::20c:29ff:fe68:ee69/64 scope link

valid_lft forever preferred_lft forever

3: sit0: <NOARP> mtu 1480 qdisc noop

link/sit 0.0.0.0 brd 0.0.0.0

[root@ha1 keepalived]#

[root@ha1 keepalived]# ipvsadm -L -n #两个rs 都在

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

-> 192.168.0.56:80 Route 1 0 0

-> 192.168.0.46:80 Route 1 0 0

[root@ha1 keepalived]#

在第二个rs2 192.168.0.56 上

[root@rs2 html]# service httpd stop #down掉第二个rs

停止 httpd: [确定]

[root@rs2 html]#

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# ipvsadm -L -n # rs2 没有了

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

-> 192.168.0.46:80 Route 1 0 0

You have new mail in /var/spool/mail/root

[root@ha1 keepalived]#

在第一个rs1 192.168.0.46 上

[root@rs1 html]# service httpd stop # down掉 rs1

停止 httpd: [确定]

[root@rs1 html]#

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# ipvsadm -L -n # rs1 也没有了 127.0.0.1加进来了

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

-> 127.0.0.1:80 Local 1 0 0

You have new mail in /var/spool/mail/root

[root@ha1 keepalived]#

马哥 这边是正常了, 显示了如下的页面

仔细研究了一下,总感觉 192.168.0.101 跑到 rs1,或 rs2 上了 ,

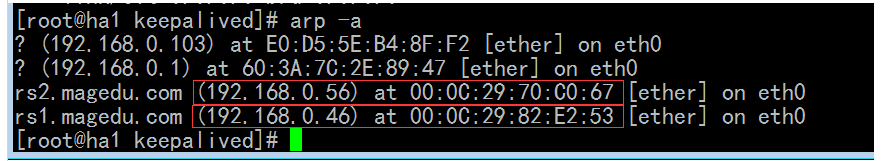

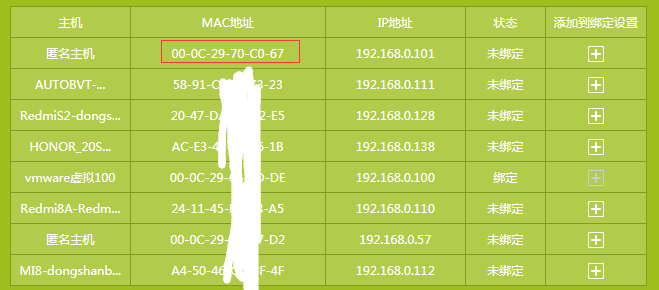

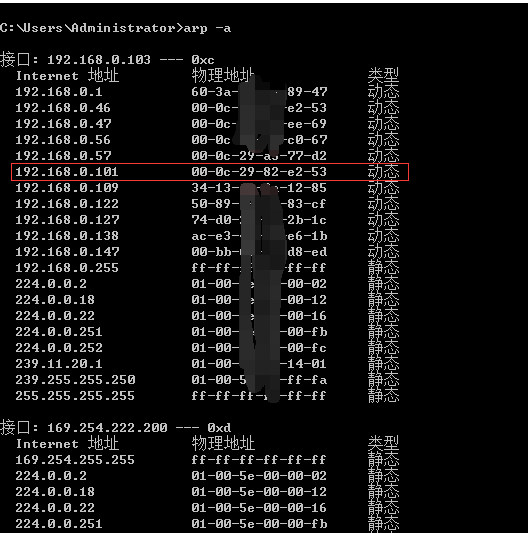

ha1 上看物理地址

路由器上 看出 192.168.0.101 在 rs2 (192.168.0.56)上

本机windows上 看出 192.168.0.101 在 rs1 (192.168.0.46)上

照我们的道理, 191.168.0.101 应该在 ha1 啊

我发觉到 把 rs1 rs2的 web服务停掉不行,还得 禁止 rs1 rs2 的 iptables 的 80 进来的端口 (当然把 rs1 rs2 关掉也可以 )

# iptables -A INPUT -p tcp --dport 80 -j DROP

http://192.168.0.101 此时 看到了 错误页面

为什么马哥的与我的不一样呢???

难道是我的keepalived的版本低 马哥的是 keepalived-1.2.7 我的是 keepalived-1.1.20

解禁两个rs的 iptables (# iptables -F) ,启动两个rs的web服务(# service httpd start)

在第一个ha1 192.168.0.47 上

[root@ha1 keepalived]# ipvsadm -L -n #此时fall_back错误的那个本地web没有了,,其它两个rs又上来了

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.101:80 rr

-> 192.168.0.56:80 Route 1 0 0

-> 192.168.0.46:80 Route 1 0 0

[root@ha1 keepalived]#

手动的调down掉某个rs,当然自己可以模拟,可以在虚拟机上直接模拟down掉接口(某个rs的vip地址),此时某个rs的vip地址不能生效了,也能转移过去

但是使用维护模式如何down掉呢

[root@ha1 keepalived]# man keepalived.conf

没的搜到 vrrp_script,, 说明帮助文件没有更新,,,这是一个新功能,但是man文件有点旧,2002年写的

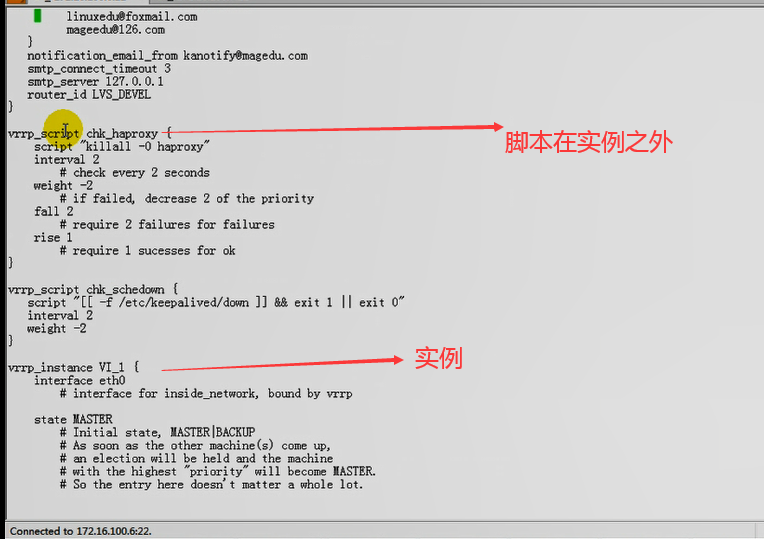

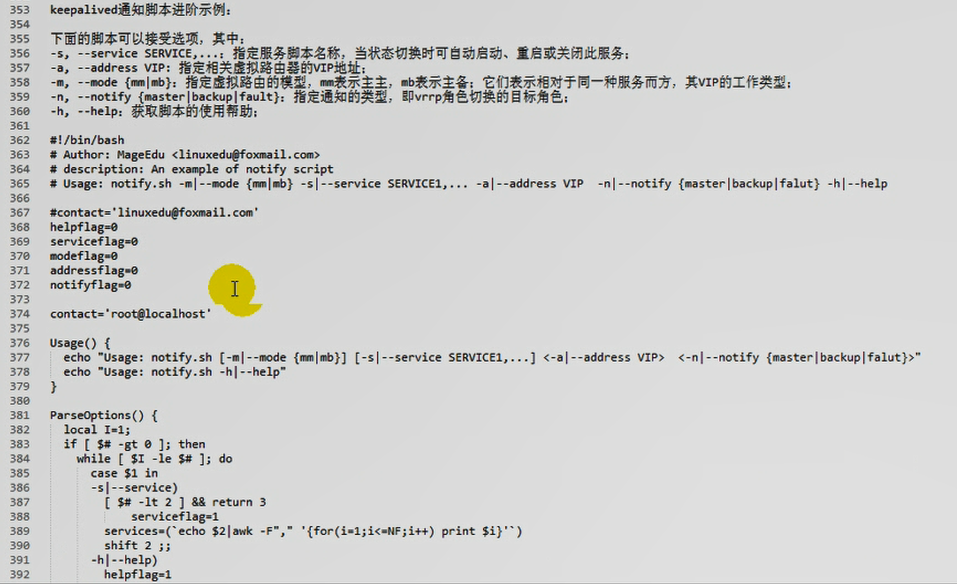

[root@ha1 keepalived]# vim keepalived.conf.haproxy_example

#在实例之外定义的两个脚本,起个名,就可以了,

每一个vrrp_script 里面,我们要用的指令有以下几项,

vrrp_script chk_haproxy {

script "killall -0 haproxy" # 要执行的外部脚本,这个脚本能完成某种服务的监测 要用双引号引起来

interval 2 #多长时间执行一下这个脚本进行监测

# check every 2 seconds

weight -2 #表示一旦监测失败了,让当前节点的优先级减 2

# if failed, decrease 2 of the priority

fall 2 # 检测2 次都失败了,才认为失败,,,这样避免误伤

# require 2 failures for failures

rise 1 # 一旦检测成功了1 次,就认为是成功的了

# require 1 sucesses for ok

}

vrrp_script chk_schedowm { #模拟监测成功失败,用它,

script "[ -f /etc/keepalived/dow ] && exit 1 || exit 0" #也是个脚本 要用双引号引起来

interval 2

weight -2

}

vrrp_script chk_name { #chk_name 是给检测起的名称

script "" #后面跟的是脚本,可以是个路径(比如web脚本文件的路径),也可以是个命令

interval 2 #每隔多久检测一次 ,后面跟秒

weight -2 #一旦监测失败了,权重的变化

fall 2 #可以不用写,有默认值

rise 1 #可以不用写,有默认值

}

在第一个 ha1 192.168.0.47 上

[root@ha1 ~]# cd /etc/keepalived/

[root@ha1 keepalived]# vim keepalived.conf

.............................

#添加如下的代码

vrrp_script chk_schedown { #脚本在下面的实例之外

script "[ -e /etc/keepalived/down ] && exit 1 || exit 0 " #文件存在(因为手动建这个文件,让它专门变成失败),就返回失败,,,,, 不存在,就返回正确 ##记住 -e 的左边要有空格

interval 1 # 1秒钟检测一次,,,keepalived性能好,这里弄成1秒

weight -5 # 失败了,优先等级减5,,,,只要减完之后小于备用节点的优先级就可以了

fall 2 #失败2次,才让失败,,,,,,这里意味着2 (这里是 2 乘以 interval 的1 )秒之后才算真正失败的吧

rise 1 #成功的话,1次就可以了

}

#下面是实例

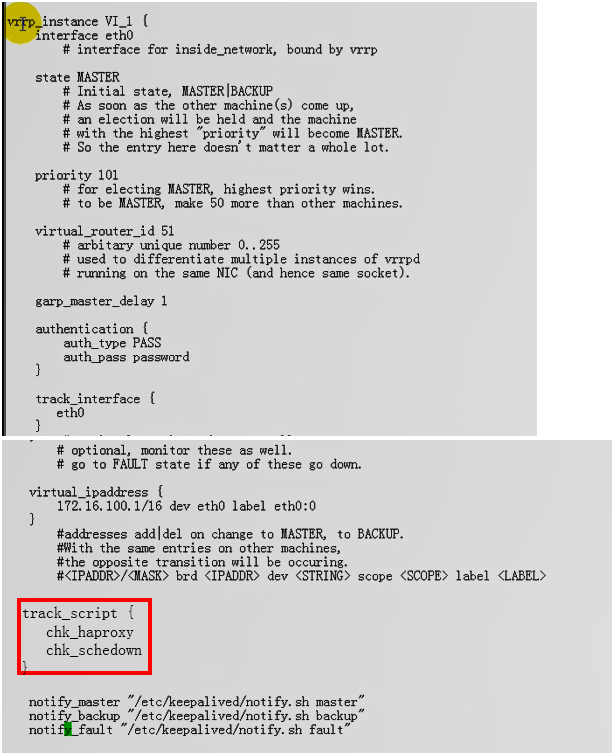

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 101

advert_int 1

authentication {

auth_type PASS

auth_pass keepalivedpass

}

.............................

在第二个 ha2 192.168.0.57 上

把上面添加的 keepalived.conf 的相关的脚本的代码再拷过去

脚本在哪里执行,什么时候执行

在每个实例里面,用这个脚本执行一下,意味着vrrp_instance当中,没事就执行track script的脚本

我们要想让每个实例发生变化(故障)的时候,让每个vip节点发生故障的时候,让实例受到影响,意味着脚本必须要在某个实例中对应执行

track_script { #是用来定义在什么时候执行相应的脚本的

chk_haproxy

chk_schedown

}

在第一个 ha1 192.168.0.47 上

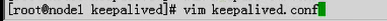

[root@ha1 keepalived]# vim keepalived.conf

.........................................................

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 101

advert_int 1

authentication {

auth_type PASS

auth_pass keepalivedpass

}

virtual_ipaddress {

192.168.0.101/24 dev eth0 lable eth0:0

}

#在实例里面,添加下面的代码,

track_script{

chk_schedown

}

}

.........................................................

把上面添加的代码复制到 第二个 ha2 192.168.0.57 上

在第一个 ha1 192.168.0.47 上

在第二个 ha2 192.168.0.57 上

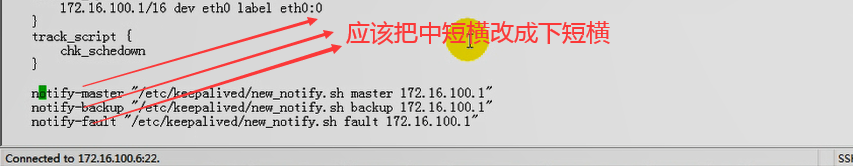

都重启 keepalived # service keepalived restart

[root@ha1 keepalived]# tail /var/log/messages #好像没有看到问题吧

Mar 3 09:55:46 ha1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.0.46:80]

Mar 3 09:55:46 ha1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.0.56:80]

Mar 3 09:55:47 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATE

Mar 3 09:55:48 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE

Mar 3 09:55:48 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.

Mar 3 09:55:48 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Mar 3 09:55:48 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 added

Mar 3 09:55:48 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 added

Mar 3 09:55:48 ha1 avahi-daemon[4335]: Registering new address record for 192.168.0.101 on eth0.

Mar 3 09:55:53 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

[root@ha1 keepalived]#

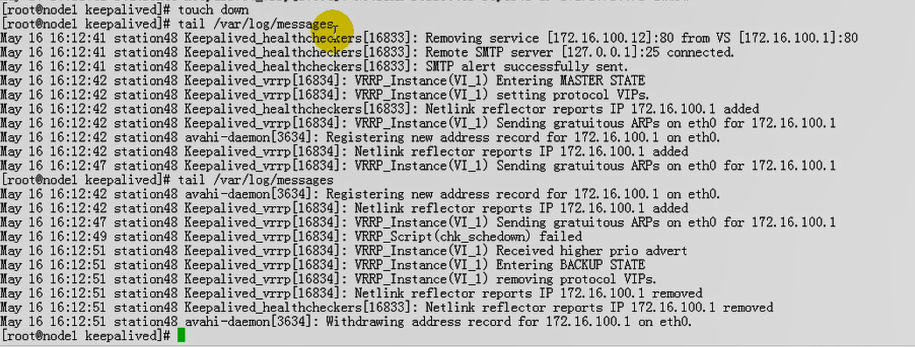

在第一个 ha1 192.168.0.47 上

[root@ha1 keepalived]# ls

keepalived.conf keepalived.conf.haproxy_example

keepalived.conf.bak notify.sh

[root@ha1 keepalived]# touch down

[root@ha1 keepalived]#

[root@ha1 keepalived]# tail -n 20 /var/log/messages #我的为什么与马哥不同

Mar 3 10:43:00 ha1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.0.46:80]

Mar 3 10:43:00 ha1 Keepalived_healthcheckers: Activating healtchecker for service [192.168.0.56:80]

Mar 3 10:43:00 ha1 Keepalived: Starting Healthcheck child process, pid=5329

Mar 3 10:43:00 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.47 added

Mar 3 10:43:00 ha1 Keepalived_vrrp: Registering Kernel netlink reflector

Mar 3 10:43:00 ha1 Keepalived_vrrp: Registering Kernel netlink command channel

Mar 3 10:43:00 ha1 Keepalived_vrrp: Registering gratutious ARP shared channel

Mar 3 10:43:00 ha1 Keepalived: Starting VRRP child process, pid=5330

Mar 3 10:43:00 ha1 Keepalived_vrrp: Opening file '/etc/keepalived/keepalived.conf'.

Mar 3 10:43:00 ha1 Keepalived_vrrp: Configuration is using : 37627 Bytes

Mar 3 10:43:00 ha1 Keepalived_vrrp: Using LinkWatch kernel netlink reflector...

Mar 3 10:43:01 ha1 Keepalived_vrrp: VRRP sockpool: [ifindex(2), proto(112), fd(10,11)]

Mar 3 10:43:02 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Transition to MASTER STATE

Mar 3 10:43:03 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Entering MASTER STATE

Mar 3 10:43:03 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) setting protocol VIPs.

Mar 3 10:43:03 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Mar 3 10:43:03 ha1 Keepalived_vrrp: Netlink reflector reports IP 192.168.0.101 added

Mar 3 10:43:03 ha1 Keepalived_healthcheckers: Netlink reflector reports IP 192.168.0.101 added

Mar 3 10:43:03 ha1 avahi-daemon[4335]: Registering new address record for 192.168.0.101 on eth0. # 地址撤回来了

Mar 3 10:43:08 ha1 Keepalived_vrrp: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

[root@ha1 keepalived]#

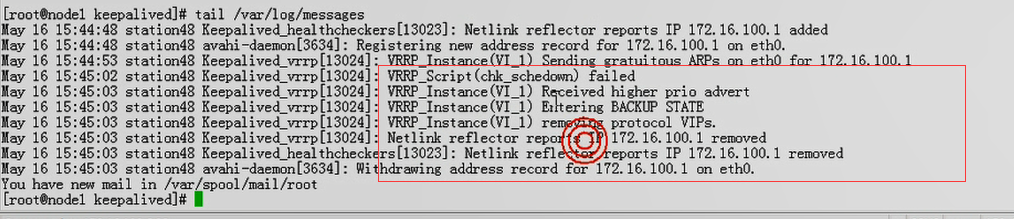

马图的 # tail /var/log/messages chk_schedown 监测失败,监测失败以后,优先级降低,,,收到了更高优先级的通告,,进入到备节点模式,,,移除当前的vip

[root@ha1 keepalived]# ifconfig #看不到 vip 192.168.0.101

eth0 Link encap:Ethernet HWaddr 00:0C:29:68:EE:69

inet addr:192.168.0.47 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe68:ee69/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:13614 errors:0 dropped:0 overruns:0 frame:0

TX packets:2233 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1497529 (1.4 MiB) TX bytes:224270 (219.0 KiB)

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:241 errors:0 dropped:0 overruns:0 frame:0

TX packets:241 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:23962 (23.4 KiB) TX bytes:23962 (23.4 KiB)

[root@ha1 keepalived]# ip addr show #仍看到 vip 192.168.0.101了

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 00:0c:29:68:ee:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.47/24 brd 192.168.0.255 scope global eth0

inet 192.168.0.101/24 scope global secondary eth0 # 为什么仍然看到 192.168.0.101

inet6 fe80::20c:29ff:fe68:ee69/64 scope link

valid_lft forever preferred_lft forever

3: sit0: <NOARP> mtu 1480 qdisc noop

link/sit 0.0.0.0 brd 0.0.0.0

[root@ha1 keepalived]#

马哥那边是看不到 vip 的

难道是因为 keepalived 的版本不一样,,,还是老老实实装 keep-alived-1.2.7的版本吧

卸载掉原来安装的 keepalived-1.1.20

在第一个 ha1 192.168.0.47 上

在第二个 ha2 192.168.0.57 上

# yum remove keepalived

在第一个 ha1 192.168.0.47 上

在第二个 ha2 192.168.0.57 上

见 /node-admin/15818 的最后部分,安装 1.2.7

安装了 1.2.7 好像还是不行

在 ha1 192.168.0.47 上 # touch down 之后

经过反复检查 ,发现 上面 script "[ -e /etc/keepalived/down ] && exit 1 || exit 0 " -e的左边没有空格,所以不会报错,,,我在左边加了空格,现在情况与马哥的情况一样了,,

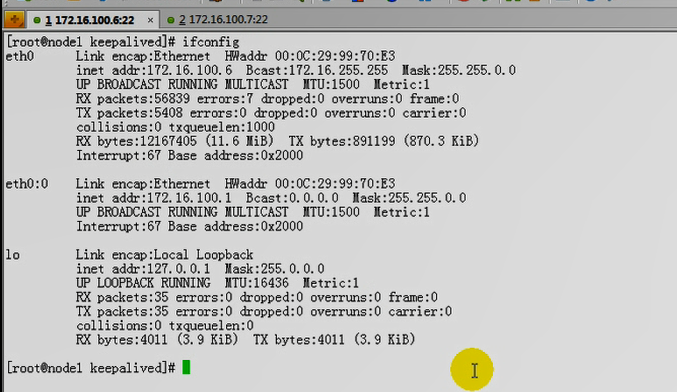

[root@ha1 keepalived]# ifconfig #没有 192.168.0.101 说明是正常的

eth0 Link encap:Ethernet HWaddr 00:0C:29:68:EE:69

inet addr:192.168.0.47 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe68:ee69/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:5894 errors:0 dropped:0 overruns:0 frame:0

TX packets:4565 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:609476 (595.1 KiB) TX bytes:426532 (416.5 KiB)

Interrupt:67 Base address:0x2000

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:216 errors:0 dropped:0 overruns:0 frame:0

TX packets:216 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:17486 (17.0 KiB) TX bytes:17486 (17.0 KiB)

[root@ha1 keepalived]# ip addr show #没有 192.168.0.101 更说明是正常的

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 00:0c:29:68:ee:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.47/24 brd 192.168.0.255 scope global eth0

inet6 fe80::20c:29ff:fe68:ee69/64 scope link

valid_lft forever preferred_lft forever

3: sit0: <NOARP> mtu 1480 qdisc noop

link/sit 0.0.0.0 brd 0.0.0.0

[root@ha1 keepalived]#

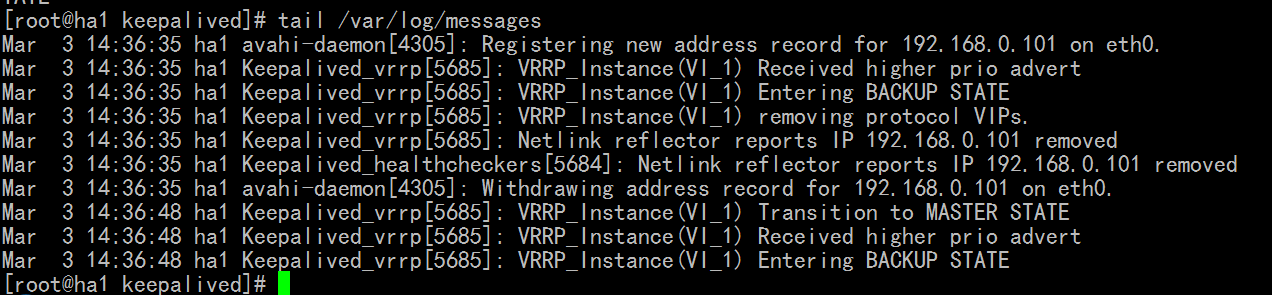

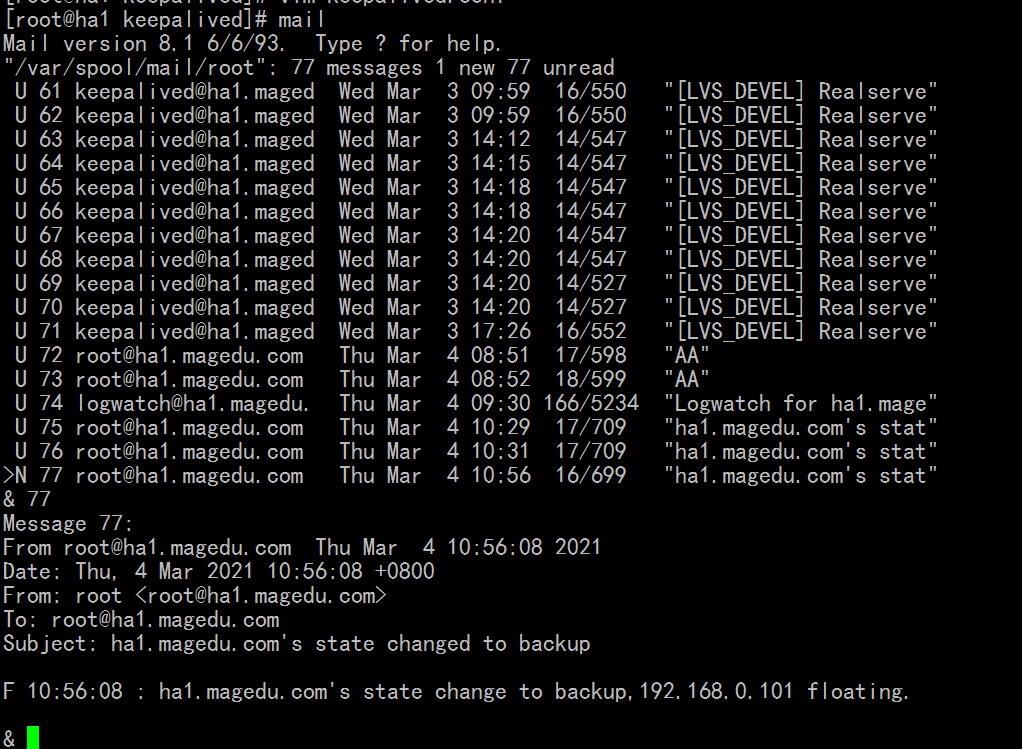

在第二个 ha2 192.168.0.57 上

[root@ha2 keepalived]# tail -30 /var/log/messages

Mar 3 14:36:47 ha2 Keepalived_vrrp[4546]: Registering Kernel netlink command channel

Mar 3 14:36:47 ha2 Keepalived_healthcheckers[4545]: Using LinkWatch kernel netlink reflector...

Mar 3 14:36:47 ha2 Keepalived_vrrp[4546]: Registering gratuitous ARP shared channel

Mar 3 14:36:47 ha2 Keepalived_healthcheckers[4545]: Activating healthchecker for service [192.168.0.46]:80

Mar 3 14:36:47 ha2 Keepalived_healthcheckers[4545]: Activating healthchecker for service [192.168.0.56]:80

Mar 3 14:36:47 ha2 Keepalived_vrrp[4546]: Opening file '/etc/keepalived/keepalived.conf'.

Mar 3 14:36:47 ha2 Keepalived_vrrp[4546]: Truncating auth_pass to 8 characters

Mar 3 14:36:47 ha2 Keepalived_vrrp[4546]: Configuration is using : 38534 Bytes

Mar 3 14:36:47 ha2 Keepalived_vrrp[4546]: Using LinkWatch kernel netlink reflector...

Mar 3 14:36:47 ha2 Keepalived_vrrp[4546]: VRRP_Instance(VI_1) Entering BACKUP STATE #以前是BACKUP 模式

Mar 3 14:36:47 ha2 Keepalived_vrrp[4546]: VRRP sockpool: [ifindex(2), proto(112), fd(11,12)]

Mar 3 14:36:48 ha2 Keepalived_vrrp[4546]: VRRP_Instance(VI_1) forcing a new MASTER election #选举MASTER状态

Mar 3 14:36:49 ha2 Keepalived_vrrp[4546]: VRRP_Instance(VI_1) Transition to MASTER STATE

Mar 3 14:36:50 ha2 Keepalived_vrrp[4546]: VRRP_Instance(VI_1) Entering MASTER STATE #进行MASTER状态

Mar 3 14:36:50 ha2 Keepalived_vrrp[4546]: VRRP_Instance(VI_1) setting protocol VIPs. #设置VIP

Mar 3 14:36:50 ha2 Keepalived_vrrp[4546]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Mar 3 14:36:50 ha2 avahi-daemon[4311]: Registering new address record for 192.168.0.101 on eth0. #注册了 VIP

Mar 3 14:36:50 ha2 Keepalived_vrrp[4546]: Netlink reflector reports IP 192.168.0.101 added #生效了VIP

Mar 3 14:36:50 ha2 Keepalived_healthcheckers[4545]: Netlink reflector reports IP 192.168.0.101 added

Mar 3 14:36:55 ha2 Keepalived_vrrp[4546]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.0.101

Mar 3 14:40:23 ha2 ntpd[3947]: time reset +1.302671 s

Mar 3 14:44:09 ha2 ntpd[3947]: synchronized to LOCAL(0), stratum 8

Mar 3 14:46:17 ha2 ntpd[3947]: synchronized to 207.34.49.172, stratum 2

Mar 3 14:48:54 ha2 ntpd[3947]: synchronized to 202.118.1.130, stratum 1

Mar 3 14:54:18 ha2 ntpd[3947]: synchronized to LOCAL(0), stratum 8

Mar 3 14:57:31 ha2 ntpd[3947]: synchronized to 202.118.1.130, stratum 1

Mar 3 15:02:35 ha2 avahi-daemon[4311]: Invalid query packet.

Mar 3 15:03:21 ha2 last message repeated 14 times

Mar 3 15:03:34 ha2 last message repeated 7 times

Mar 3 15:12:32 ha2 ntpd[3947]: synchronized to 207.34.49.172, stratum 2

[root@ha2 keepalived]#

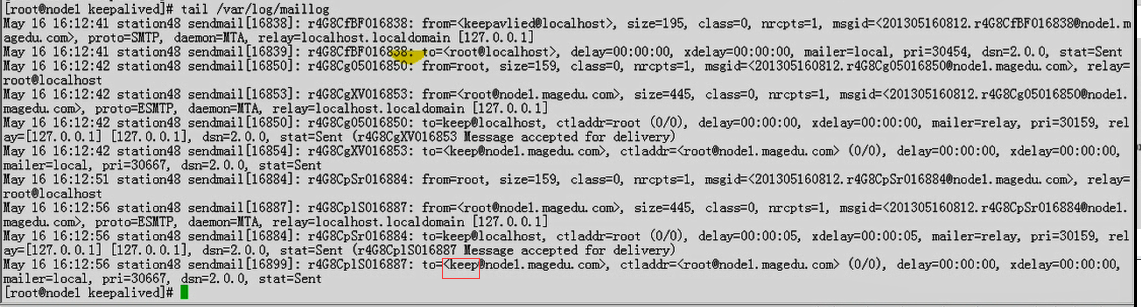

vrrp??? 执行 gratuitous ARP ,自问自答的方式,通知 mac与VIP的关系

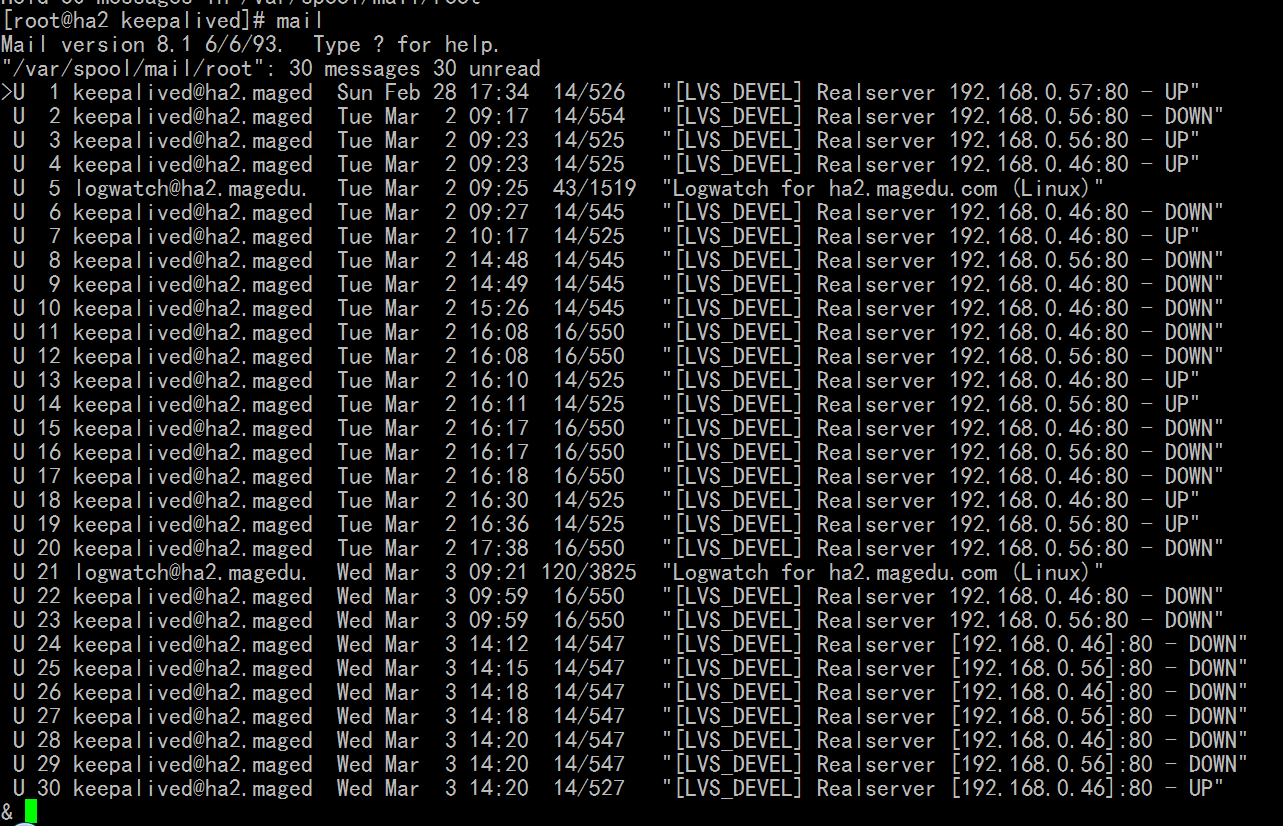

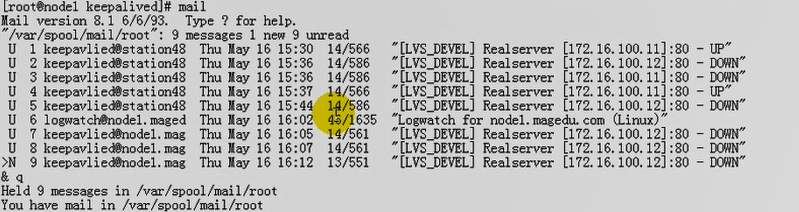

[root@ha2 keepalived]# mail #好像里面太多的mail,看不清里面要的内容

Mail version 8.1 6/6/93. Type ? for help.

"/var/spool/mail/root": 83 messages 83 unread

>U 1 logwatch@localhost.l Fri Jul 12 11:10 43/1599 "Logwatch for localhost.localdomain (Linux)"

U 2 logwatch@localhost.l Wed Dec 9 04:02 56/1853 "Logwatch for localhost.localdomain (Linux)"

U 3 logwatch@localhost.l Fri Dec 11 09:41 43/1599 "Logwatch for localhost.localdomain (Linux)"

U 4 logwatch@192.168.0.7 Tue Dec 22 10:32 43/1500 "Logwatch for 192.168.0.75 (Linux)"

U 5 logwatch@192.168.0.7 Sun Dec 27 10:37 43/1500 "Logwatch for 192.168.0.75 (Linux)"

U 6 logwatch@192.168.0.7 Wed Dec 30 16:21 72/2265 "Logwatch for 192.168.0.75 (Linux)"

U 7 logwatch@192.168.0.1 Fri Jan 15 17:02 58/1834 "Logwatch for 192.168.0.148 (Linux)"

U 8 MAILER-DAEMON@steppi Mon Jan 18 12:37 174/5585 "Warning: could not send message for past 4 hours"

U 9 logwatch@steppingsto Mon Jan 18 12:37 125/3824 "Logwatch for steppingstone (Linux)"

U 10 logwatch@steppingsto Mon Jan 18 13:42 43/1632 "Logwatch for steppingstone.magedu.com (Linux)"

U 11 logwatch@steppingsto Tue Jan 19 09:37 104/3372 "Logwatch for steppingstone.magedu.com (Linux)"

U 12 logwatch@steppingsto Wed Jan 20 11:08 104/3373 "Logwatch for steppingstone.magedu.com (Linux)"

U 13 logwatch@steppingsto Thu Jan 21 11:09 104/3373 "Logwatch for steppingstone.magedu.com (Linux)"

U 14 logwatch@steppingsto Fri Jan 22 09:34 104/3373 "Logwatch for steppingstone.magedu.com (Linux)"

U 15 logwatch@steppingsto Sat Jan 23 09:24 104/3373 "Logwatch for steppingstone.magedu.com (Linux)"

U 16 logwatch@steppingsto Mon Jan 25 09:25 43/1632 "Logwatch for steppingstone.magedu.com (Linux)"

U 17 logwatch@steppingsto Tue Jan 26 10:11 104/3409 "Logwatch for steppingstone.magedu.com (Linux)"

U 18 logwatch@steppingsto Wed Jan 27 13:25 136/4448 "Logwatch for steppingstone.magedu.com (Linux)"

U 19 logwatch@steppingsto Thu Jan 28 09:52 107/3547 "Logwatch for steppingstone.magedu.com (Linux)"

U 20 logwatch@steppingsto Fri Jan 29 10:04 120/3824 "Logwatch for steppingstone.magedu.com (Linux)"

U 21 MAILER-DAEMON@steppi Sun Jan 31 09:02 156/5369 "Warning: could not send message for past 4 hours"

U 22 logwatch@steppingsto Sun Jan 31 09:02 107/3545 "Logwatch for steppingstone.magedu.com (Linux)"

U 23 logwatch@steppingsto Sun Jan 31 10:28 110/4011 "Logwatch for steppingstone.magedu.com (Linux)"

U 24 logwatch@steppingsto Sat Feb 20 09:23 43/1632 "Logwatch for steppingstone.magedu.com (Linux)"

U 25 logwatch@steppingsto Sun Feb 21 09:11 93/3250 "Logwatch for steppingstone.magedu.com (Linux)"

U 26 logwatch@steppingsto Mon Feb 22 09:17 120/3824 "Logwatch for steppingstone.magedu.com (Linux)"

U 27 logwatch@steppingsto Wed Feb 24 09:25 57/2007 "Logwatch for steppingstone.magedu.com (Linux)"

U 28 logwatch@steppingsto Thu Feb 25 09:26 110/3727 "Logwatch for steppingstone.magedu.com (Linux)"

U 29 logwatch@steppingsto Fri Feb 26 10:07 110/3723 "Logwatch for steppingstone.magedu.com (Linux)"

U 30 logwatch@rs3.magedu. Sat Feb 27 11:17 110/3612 "Logwatch for rs3.magedu.com (Linux)"

U 31 logwatch@ha2.magedu. Sun Feb 28 09:34 176/6891 "Logwatch for ha2.magedu.com (Linux)"

&

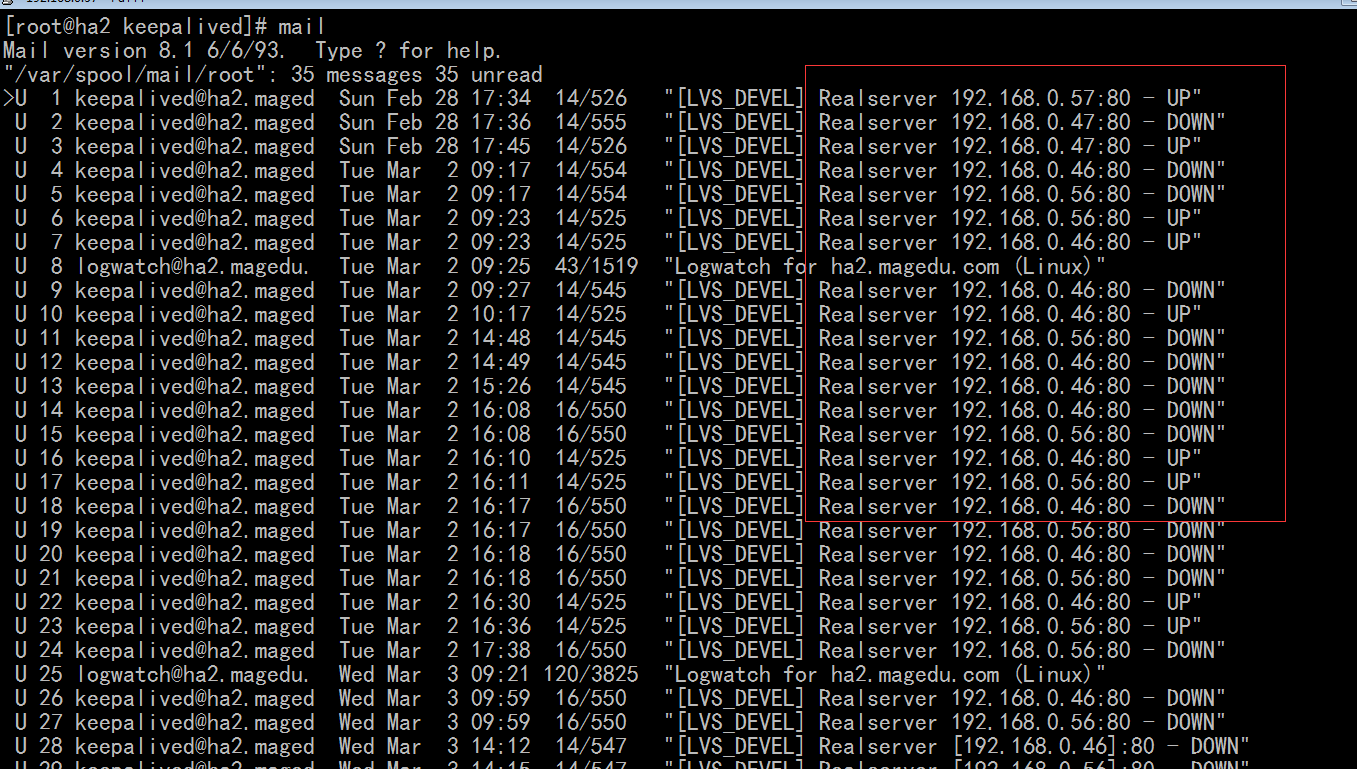

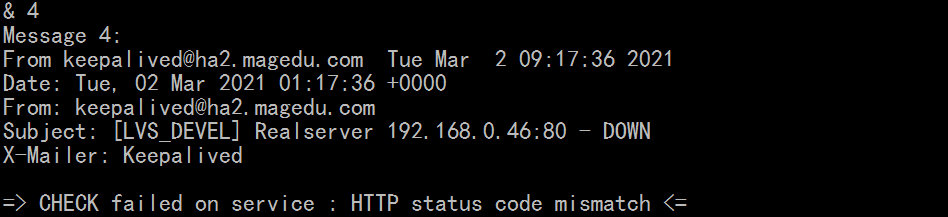

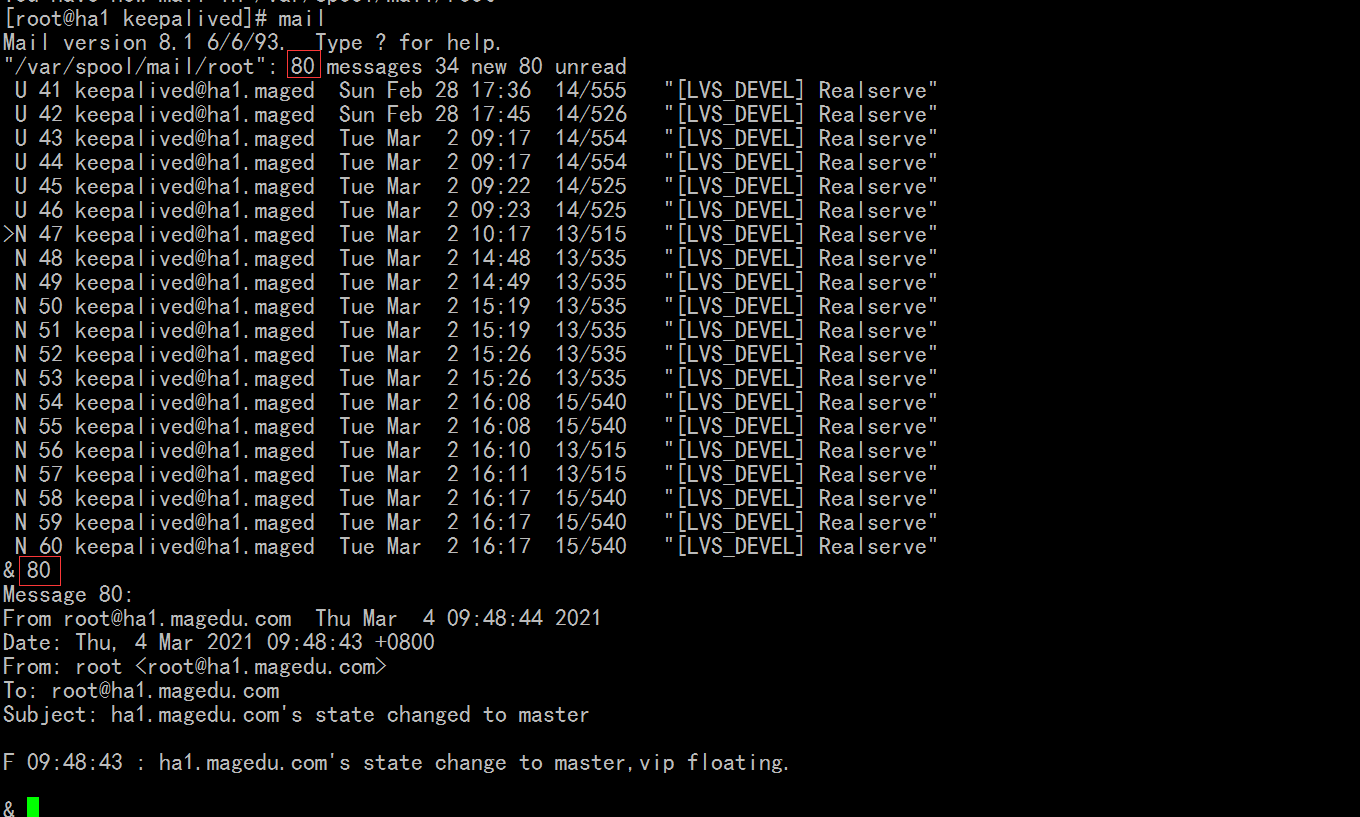

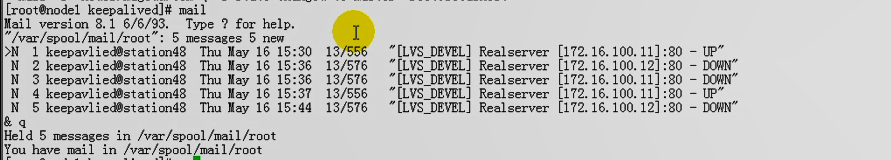

rs 的 up down 都会记录下来 因为 ha1,ha2 它有 fall_back的web服务吧,所以也当作rs 吧

它只说你的 real server 有没有问题,

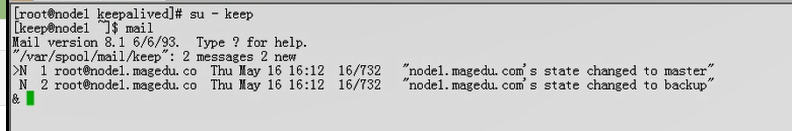

主备节点的切换 这里 # mail 没有给我们报错

rs 下线了,上线了,这种问题需要引起关注,但是远远没有前端的两个ha应该得到的关注更高

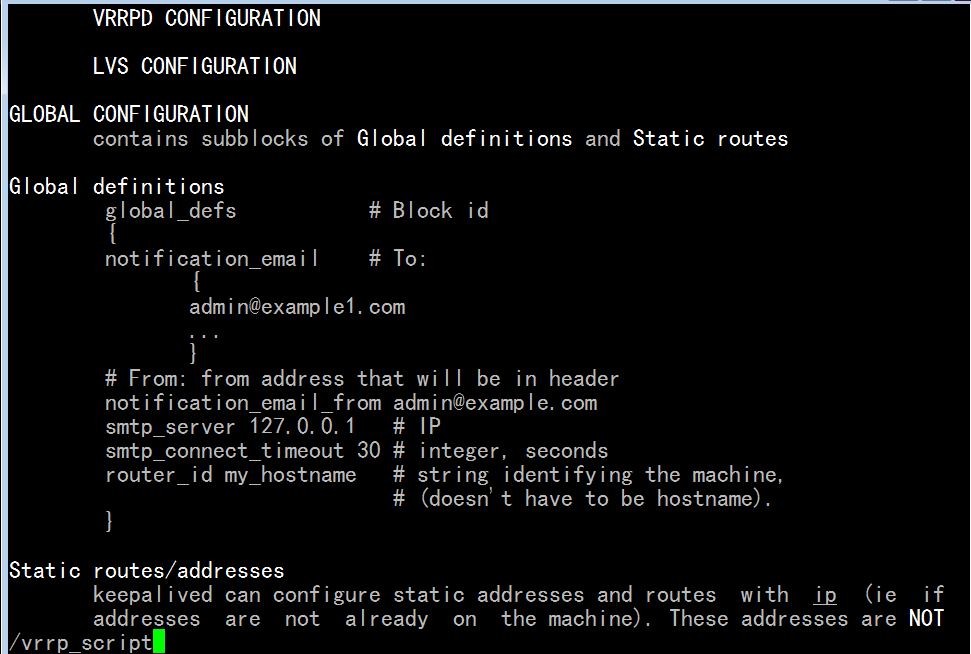

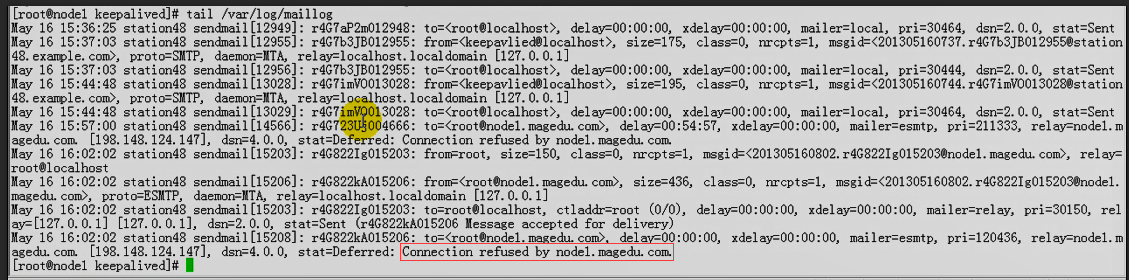

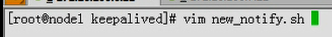

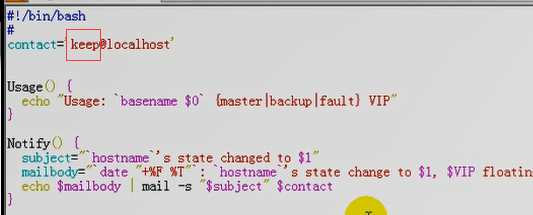

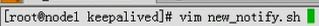

root@ha1 keepalived]# man -M /usr/local/keepalived/share/man keepalived.conf

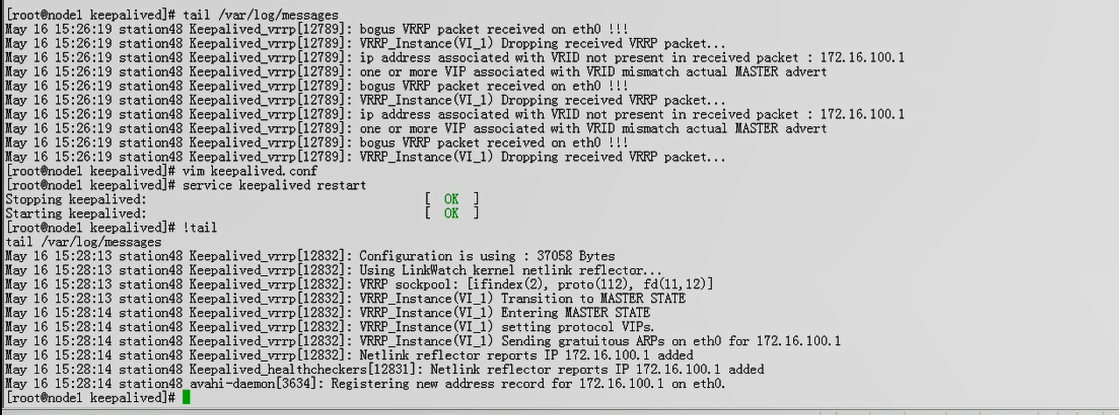

..........................................................................................................