You are here

马哥 47_02 _Tomcat系列之apache负载均衡请求至tomcat及DeltaManager的使用 有大用

在 tomcat2 192.168.0.3 上

[root@rs3 ~]# scp 192.168.10.2:/root/apache-tomcat-7.0.40.tar.gz ./ #复制tomcat的安装文件

root@192.168.10.2's password:

apache-tomcat-7.0.40.tar.gz 100% 7660KB 7.5MB/s 00:01

[root@rs3 ~]# ls

aaa.txt jdk-6u21-linux-i586-rpm.bin

anaconda-ks.cfg kmod-drbd83-8.3.15-3.el5.centos.i686.rpm

apache-tomcat-7.0.40.tar.gz sun-javadb-client-10.5.3-0.2.i386.rpm

Desktop sun-javadb-common-10.5.3-0.2.i386.rpm

drbd83-8.3.15-2.el5.centos.i386.rpm sun-javadb-core-10.5.3-0.2.i386.rpm

install.log sun-javadb-demo-10.5.3-0.2.i386.rpm

install.log.syslog sun-javadb-docs-10.5.3-0.2.i386.rpm

iscsid.conf sun-javadb-javadoc-10.5.3-0.2.i386.rpm

iscsi.sed test

jdk-6u21-linux-i586.rpm

[root@rs3 ~]#

[root@rs3 ~]# scp 192.168.10.2:/etc/profile.d/java.sh /etc/profile.d #复制java的环境变量的文件

root@192.168.10.2's password:

java.sh 100% 67 0.1KB/s 00:00

[root@rs3 ~]# scp 192.168.10.2:/etc/profile.d/tomcat.sh /etc/profile.d #复制tomcat的环境变量的文件

root@192.168.10.2's password:

tomcat.sh 100% 76 0.1KB/s 00:00

[root@rs3 ~]#

退出 tomcat2 192.168.0.3 的putty窗口,重新登录 tomcat2 192.168.0.3 的putty窗口,,应该环境变量就能生效了

[root@rs3 ~]# java -version

java version "1.6.0_21"

Java(TM) SE Runtime Environment (build 1.6.0_21-b06)

Java HotSpot(TM) Client VM (build 17.0-b16, mixed mode, sharing)

[root@rs3 ~]#

[root@rs3 ~]# ls

aaa.txt jdk-6u21-linux-i586-rpm.bin

anaconda-ks.cfg kmod-drbd83-8.3.15-3.el5.centos.i686.rpm

apache-tomcat-7.0.40.tar.gz sun-javadb-client-10.5.3-0.2.i386.rpm

Desktop sun-javadb-common-10.5.3-0.2.i386.rpm

drbd83-8.3.15-2.el5.centos.i386.rpm sun-javadb-core-10.5.3-0.2.i386.rpm

install.log sun-javadb-demo-10.5.3-0.2.i386.rpm

install.log.syslog sun-javadb-docs-10.5.3-0.2.i386.rpm

iscsid.conf sun-javadb-javadoc-10.5.3-0.2.i386.rpm

iscsi.sed test

jdk-6u21-linux-i586.rpm

[root@rs3 ~]#

[root@rs3 ~]# tar xf apache-tomcat-7.0.40.tar.gz -C /usr/local

[root@rs3 ~]# cd /usr/local

[root@rs3 local]# ln -sv apache-tomcat-7.0.40 tomcat

创建指向“apache-tomcat-7.0.40”的符号链接“tomcat”

[root@rs3 local]#

[root@rs3 local]# scp 192.168.10.2:/usr/local/tomcat/conf/server.xml /usr/local/tomcat/conf/ #server.xml复制过来

root@192.168.10.2's password:

server.xml 100% 6640 6.5KB/s 00:00

[root@rs3 local]#

[root@rs3 local]# cd tomcat/conf/

[root@rs3 conf]# pwd

/usr/local/tomcat/conf

[root@rs3 conf]# vim server.xml

...............................................................................................

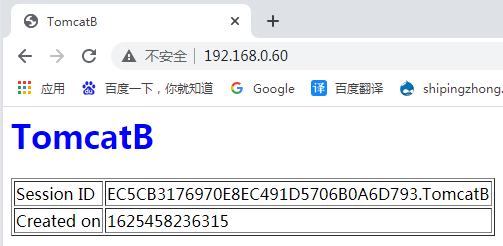

<!--TomcatB 在 几个tomcat做集群的时候有作用,必须要用到jvmRoute -->

<Engine name="Catalina" defaultHost="www.magedu.com" jvmRoute="TomcatB" >

...............................................................................................

[root@rs3 conf]# mkdir -pv /web/webapps

mkdir: 已创建目录 “/web/webapps”

[root@rs3 conf]#

[root@rs3 conf]# scp 192.168.10.2:/web/webapps/index.jsp /web/webapps

root@192.168.10.2's password:

index.jsp 100% 434 0.4KB/s 00:00

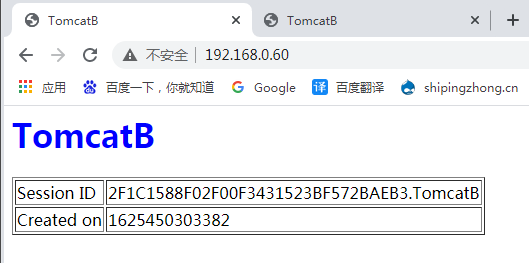

[root@rs3 conf]# vim /web/webapps/index.jsp

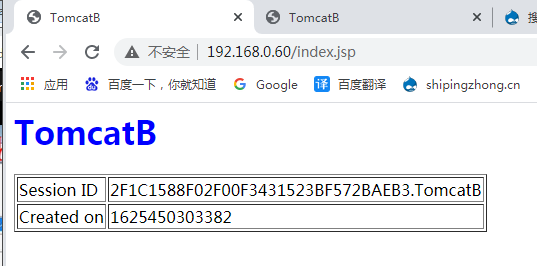

<%@ page language="java" %>

<html>

<head><title>TomcatB</title></head>

<body>

<h1><font color="blue">TomcatB </font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("abc","abc"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

[root@rs3 conf]# catalina.sh configtest #测试

Using CATALINA_BASE: /usr/local/tomcat

Using CATALINA_HOME: /usr/local/tomcat

Using CATALINA_TMPDIR: /usr/local/tomcat/temp

Using JRE_HOME: /usr/java/latest

Using CLASSPATH: /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar

2021-7-3 9:12:12 org.apache.catalina.core.AprLifecycleListener init

信息: The APR based Apache Tomcat Native library which allows optimal performance in production environments was not found on the java.library.path: /usr/java/jdk1.6.0_21/jre/lib/i386/client:/usr/java/jdk1.6.0_21/jre/lib/i386:/usr/java/jdk1.6.0_21/jre/../lib/i386:/usr/java/packages/lib/i386:/lib:/usr/lib

2021-7-3 9:12:13 org.apache.coyote.AbstractProtocol init

信息: Initializing ProtocolHandler ["http-bio-8080"]

2021-7-3 9:12:13 org.apache.coyote.AbstractProtocol init

信息: Initializing ProtocolHandler ["ajp-bio-8009"]

2021-7-3 9:12:13 org.apache.catalina.startup.Catalina load

信息: Initialization processed in 924 ms

[root@rs3 conf]#

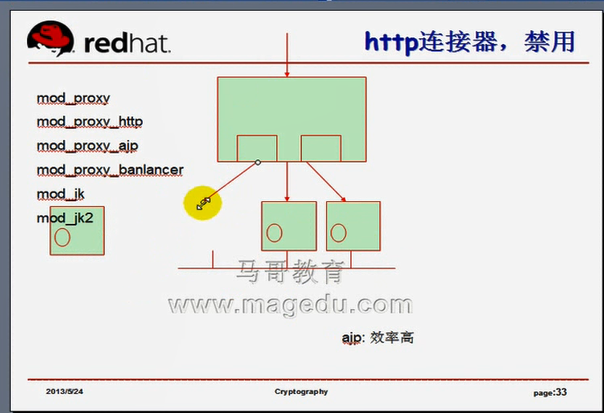

mod_proxy模块

关于如上apache指令的说明:

ProxyPreserveHost {On|Off}:如果启用此功能,代理会将用户请求报文中的Host:行发送给后端的服务器,而不再使用ProxyPass指定的服务器地址。如果想在反向代理中支持虚拟主机,则需要开启此项,否则就无需打开此功能。

ProxyVia {On|Off|Full|Block}:用于控制在http首部是否使用Via:,主要用于在多级代理中控制代理请求的流向。默认为Off,即不启用此功能;On表示每个请求和响应报文均添加Via:;Full表示每个Via:行都会添加当前apache服务器的版本号信息;Block表示每个代理请求报文中的Via:都会被移除。

ProxyRequests {On|Off}:是否开启apache正向代理的功能;启用此项时为了代理http协议必须启用mod_proxy_http模块。同时,如果为apache设置了ProxyPass,则必须将ProxyRequests设置为Off。

ProxyPass [path] !|url [key=value key=value ...]]:将后端服务器某URL与当前服务器的某虚拟路径关联起来作为提供服务的路径,path为当前服务器上的某虚拟路径,url为后端服务器上某URL路径。使用此指令时必须将ProxyRequests的值设置为Off。需要注意的是,如果path以“/”结尾,则对应的url也必须以“/”结尾,反之亦然。前后端路径要保持一致,,例如 proxy_pass /forum htp://92.168.1.88/forum 的前后forum要保持一致

另外,mod_proxy模块在httpd 2.1的版本之后支持与后端服务器的连接池功能,连接在按需创建在可以保存至连接池中以备进一步使用。连接池大小或其它设定可以通过在ProxyPass中使用key=value的方式定义。常用的key如下所示:

◇ min:连接池的最小容量,此值与实际连接个数无关,仅表示连接池最小要初始化的空间大小。

◇ max:连接池的最大容量,每个MPM都有自己独立的容量;都值与MPM本身有关,如Prefork的总是为1,而其它的则取决于ThreadsPerChild指令的值。

◇ loadfactor:用于负载均衡集群配置中,定义对应后端服务器的权重,取值范围为1-100。

◇ retry:当apache将请求发送至后端服务器得到错误响应时等待多长时间以后再重试。单位是秒钟。

如果Proxy指定是以balancer://开头,即用于负载均衡集群时,其还可以接受一些特殊的参数,如下所示:

◇lbmethod:apache实现负载均衡的调度方法,默认是byrequests (round-robbin),即基于权重将统计请求个数进行调度,bytraffic则执行基于权重的流量计数调度,bybusyness通过考量每个后端服务器的当前负载进行调度。

◇ maxattempts:放弃请求之前实现故障转移的次数,默认为1,其最大值不应该大于总的节点数。

◇ nofailover:取值为On或Off,设置为On时表示后端服务器故障时,用户的session将损坏;因此,在后端服务器不支持session复制时可将其设置为On。

◇ stickysession:调度器的sticky session的名字,根据web程序语言的不同,其值为JSESSIONID或PHPSESSIONID。

上述指令除了能在banlancer://或ProxyPass中设定之外,也可使用ProxySet指令直接进行设置,如:

<Proxy balancer://hotcluster>

BalancerMember http://www1.magedu.com:8080 loadfactor=1

BalancerMember http://www2.magedu.com:8080 loadfactor=2

ProxySet lbmethod=bytraffic

</Proxy>

ProxyPassReverse:用于让apache调整HTTP重定向响应报文中的Location、Content-Location及URI标签所对应的URL,在反向代理环境中必须使用此指令避免重定向报文绕过proxy服务器。

mod_proxy模块

在httpd.conf的全局配置中配置如下内容:

配置代理与nginx中也一样,upstream是要定义在server之外的,balancer是是定义在VirtualHost之外的

ProxyRequests Off #定义在全局

<proxy balancer://lbcluster1> #定义在全局

BalancerMember ajp://172.16.100.1:8009 loadfactor=10 route=TomcatA

BalancerMember ajp://172.16.100.2:8009 loadfactor=10 route=TomcatB

</proxy>

<VirtualHost *:80>

ServerAdmin admin@magedu.com

ServerName www.magedu.com

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid

ProxyPassReverse / balancer://lbcluster1/

</VirtualHost>

<Proxy balancer://hotcluster>

BalancerMember http://www1.magedu.com:8080 loadfactor=1

BalancerMember http://www2.magedu.com:8080 loadfactor=2

ProxySet lbmethod=bytraffic

</Proxy>

在前端apache 192.168.0.60 另一网卡 192.168.10.10

[root@localhost ~]# vim /etc/httpd/httpd.conf

...............................................................................................

Include /etc/httpd/extra/httpd-proxy.conf

#Include /etc/httpd/extra/httpd-jk.conf

...............................................................................................

[root@localhost ~]# cp /etc/httpd/extra/httpd-proxy.conf /etc/httpd/extra/httpd-proxy.conf.bak

[root@localhost ~]#

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

ProxyVia On

ProxyPass / balancer://lbcluster1/

ProxyPassReverse / balancer://lbcluster1/

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# service httpd configtest

Syntax OK

[root@localhost ~]#

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

ProxySet lbmethod=byrequests #比上面多了这行

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

ProxyVia On

ProxyPass / balancer://lbcluster1/

ProxyPassReverse / balancer://lbcluster1/

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

在 tomcat1 192.168.0.2 上

[root@node1 ~]# catalina.sh start

Using CATALINA_BASE: /usr/local/tomcat

Using CATALINA_HOME: /usr/local/tomcat

Using CATALINA_TMPDIR: /usr/local/tomcat/temp

Using JRE_HOME: /usr/java/latest

Using CLASSPATH: /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar

[root@node1 ~]#

在 tomcat2 192.168.0.3 上

[root@rs3 conf]# catalina.sh start

Using CATALINA_BASE: /usr/local/tomcat

Using CATALINA_HOME: /usr/local/tomcat

Using CATALINA_TMPDIR: /usr/local/tomcat/temp

Using JRE_HOME: /usr/java/latest

Using CLASSPATH: /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin

[root@rs3 conf]#

http://192.168.0.60/index.jsp #我的可以了

若有问题,看日志

[root@localhost ~]# tail /usr/local/apache/logs/error_log #看里面的报错

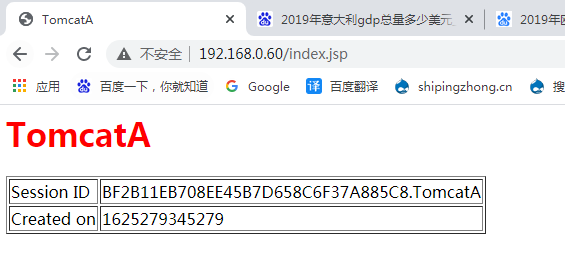

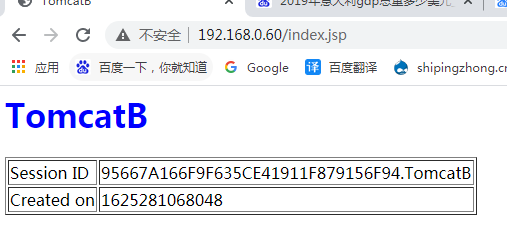

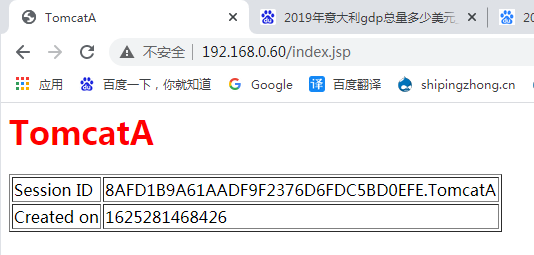

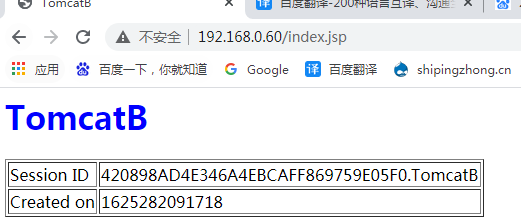

Session ID 每次都会发生改变

在前端apache 192.168.0.60 另一网卡 192.168.10.10

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf #有问题

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA stickysession=jsessionid #stickysession=jsessionid写在这里有问题

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB stickysession=jsessionid #stickysession=jsessionid写在这里有问题

ProxySet lbmethod=byrequests

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

ProxyVia On

ProxyPass / balancer://lbcluster1/

ProxyPassReverse / balancer://lbcluster1/

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# service httpd configtest

AH00526: Syntax error on line 3 of /etc/httpd/extra/httpd-proxy.conf:

BalancerMember unknown Worker parameter

[root@localhost ~]#

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

ProxySet lbmethod=byrequests

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid #stickysession=jsessionid写在这里是对的

ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid #stickysession=jsessionid写在这里是对的

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# service httpd configtest

Syntax OK

[root@localhost ~]#

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

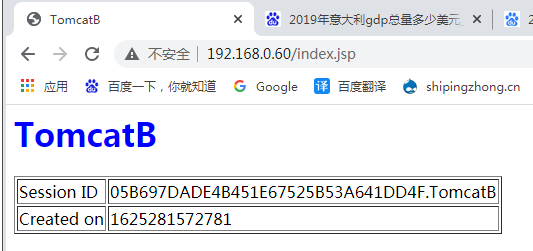

由上面几个图,sessionId不停的变化,所以没有绑定

如上图,正常情况下,session不一样了,所以出现故障session肯定不会转移的

由下图,每次都是请求的session与响应的session不一样,每次都是新请求,cookie不一样,所以session不一样,,,

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

ProxySet lbmethod=byrequests

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid nofailover=on #加个nofailover=on

ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid #这里不加nofailover=on

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

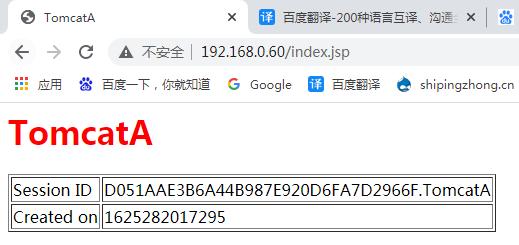

由上面几个图,session仍然不能绑定

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

#ProxySet lbmethod=byrequests # 去了,经测试,还不能会话绑定

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid nofailover=on

ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

上面的配置保存后,重启httpd,仍然不能会话绑定

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

ProxySet lbmethod=bytraffic #这处修改

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

# ProxyVia On #这处注掉

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid nofailover=on

ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

上面的配置保存后,重启httpd,仍然不能会话绑定

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 #route=TomcatA 去掉route

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 #route=TomcatB 去掉route

ProxySet lbmethod=bytraffic

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

# ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid nofailover=on

ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

上面的配置保存后,重启httpd,仍然不能会话绑定

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 #route=TomcatA 去掉route

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 #route=TomcatB 去掉route

ProxySet lbmethod=bytraffic

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

# ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid nofailover=on

# ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid #去掉它

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

上面的配置保存后,重启httpd,仍然不能会话绑定

马哥多次测试 stickysession 在mod_proxy中不起作用,

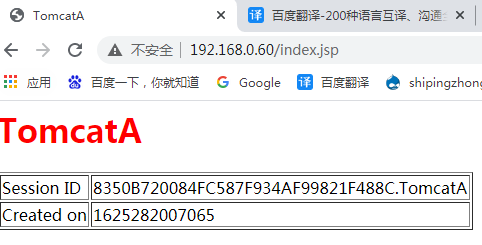

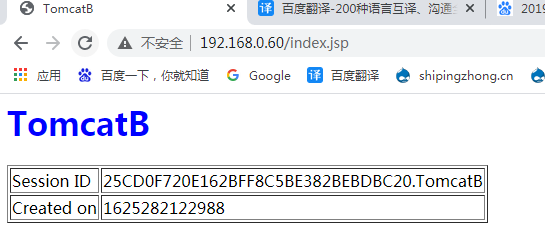

如下图,当一个后端服务器发生故障时,用户的请求会转发至其它节点上,发生故障的服务器的用户的session信息就会丢失,

tomcat有一个组件叫cluster,这个组件内部有好几种会话管理器,

会话管理:

标准会话管理器和持久会话管理器

标准会话管理器(StandardManager):

<Manager className="org.apache.catalina.session.StandardManager"

maxInactiveInterval="7200"/> <!--maxInactiveInterval是最大非活动间隔时间,一旦会话建立后,持久时间是2小时,建立会话后,2小时没有访问,会话才会被清除,,,2小时之内是同一会话,也就是视为同一用户访问的吧-->

默认保存于$CATALINA_HOME/work/Catalina/<hostname>/<webapp-name>/下的SESSIONS.ser文件中。(就是tomcat重启了,此前session依然存在) 标准会话管理器可以每隔一定的时间,按照一定的频率,将位于内存中的session信息同步至磁盘文件中,

假如主机崩溃了,session是不能恢复的,,,,如果session刚在内存中建立,未来得及存储于文件中,一重启,session也会丢失的,,,,由于保存在本地文件,所以无法与其它主机共享,,,,

maxActiveSessions:最多允许的活动会话数量,默认为-1,表示不限制;

maxInactiveInterval:非活动的会话超时时长,默认为60s;

pathname:会话文件的保存目录;

持久会话管理器(PersistentManager):

将会话数据保存至持久存储中 (不通过内存,直接存储????),并且能在服务器意外中止后重新启动时重新加载这些会话信息。持久会话管理器支持将会话保存至文件存储(FileStore)或JDBC存储(JDBCStore)中。

保存至文件中的示例:

<Manager className="org.apache.catalina.session.PersistentManager"

saveOnRestart="true">

<Store className="org.apache.catalina.session.FileStore"

directory="/data/tomcat-sessions"/>

</Manager>

每个用户的会话会被保存至directory指定的目录中的文件中,文件名为<session id>.session,并通过后台线程每隔一段时间(checkInterval参数定义,默认为60秒)检查一次超时会话???????。

保存至JDBCStore中的示例:

<Manager className="org.apache.catalina.session.PersistentManager"

saveOnRestart="true">

<Store className="org.apache.catalina.session.JDBCStore"

driverName="com.mysql.jdbc.Driver"

connectionURL="jdbc:mysql://localhost:3306/mydb?user=jb;password=pw"/>

</Manager>

还有

DeltaManager

如下图,让几个tomcat后端服务器建立类似于高可用的集群,各节点之间可以相互传递心跳信息,,,,,,任何用户通过前端连到某tomcat上创建的会话信息,它会通过心跳信息所能够识别的活动节点,每一个都传给它一份,所以每一个节点中都有一份,,,,假如某一节点故障了,但会话信息依然存在,,,,,,,这就是tomcat内部的会话集群,能实现会话内部共享的集群,,,,,,,,,,,每隔多久传一次心跳信息,使用什么组播地址,传给哪些节点,怎么知道哪些节点是活动的,这些都要定义,还要定义这些节点监控在哪些节点上向别人传心跳,从哪个地方接受别人的心跳,在本地怎么作出决策,知道哪些主机是在线,哪些主机是不在线,当前成员还有哪些等,必须要通过某一个cluster组件,将后台几个tomcat组织起来,建立内部集群才可以,,,,,Delta会话管理器依赖于这种集群,而这种集群本身都需要通过组播的方式将自己的心跳传播出去,如果网络忙,传递会话信息会占据大量的带宽的,并且使得我们的服务器性能下降,我们可以使用backup集群(backup会话管理器)

BackupManager

如下图,几个节点也可以做成集群,每一个节点提供一至两个备用节点,每一个节点只同步到它的备份节点,所以如果某节点故障了,请求就重新定位到备用节点,如果备用节点故障了呢?(我们其实可以两个节点互相备份,或者1节点以2节点为备份,2节点以3节点为备份,3节点以4节点为备份,,,,,,)(这样的话,1节点的会话信息可以传到2节点,2节点的会话信息可以传到3节点,3节点的会话信息可以传到4节点,,,,这样的话,1节点只有自己的数据,4节点有全部的数据,,,,,这样子不太理想,所以我们可以把两个节点互相作为备份,,,,(4个节点的话,两两建集群,,,类似于划分了故障转移域的高可用集群),,,,可以理解为高可用集群中的两节点的双活动模型,,)

tomcat部署大规模集群的可能性小,,,,,,,,,,,,,,Delta会话管理器还有个缺陷,万一所有节点都挂了,怎么办?会话信息因为都在内存,所以就都没有了,,,,我们可以找个共享的nfs,让tomcat把会话信息导出到nfs服务器上,

mod_jk,mod_proxy都可以输出状态信息的,

mod_proxy可以通过某个路径输出状态信息

用于mod_proxy状态信息的输出

<Location /balancer-manager>

SetHandler balancer-manager

Order Deny,Allow #这里是不是应该用 Require all granted

Allow from all

</Location>

在前端apache 192.168.0.60 另一网卡 192.168.10.10

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

ProxySet lbmethod=bytraffic

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

# ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid nofailover=on

ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

<Location /balancer-manager> #事实上我们的状态信息是不应该让所有人可以看到的

SetHandler balancer-manager

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# service httpd configtest

Syntax OK

[root@localhost ~]#

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

http://192.168.0.60/balancer-manager #它代理到tomcat上去了 因为这里我们只有一个虚拟主机,所以 /balancer-manager 直接附加在虚拟主机上, 而这个虚拟主机的所有内容都代理至后端了

[root@localhost ~]# tail /usr/local/apache/logs/error_log

[Sat Jul 03 19:17:30.025621 2021] [mpm_event:notice] [pid 4743:tid 3077940928] AH00489: Apache/2.4.4 (Unix) OpenSSL/1.0.1e-fips configured -- resuming normal operations

[Sat Jul 03 19:17:30.025659 2021] [core:notice] [pid 4743:tid 3077940928] AH00094: Command line: '/u sr/local/apache/bin/httpd'

[Sat Jul 03 22:35:01.509282 2021] [mpm_event:notice] [pid 4743:tid 3077940928] AH00491: caught SIGTE RM, shutting down

[Sat Jul 03 22:35:02.000981 2021] [ssl:notice] [pid 5339:tid 3077846720] AH01886: SSL FIPS mode disa bled

[Sat Jul 03 22:35:02.040880 2021] [auth_digest:notice] [pid 5340:tid 3077846720] AH01757: generating secret for digest authentication ...

[Sat Jul 03 22:35:03.001815 2021] [ssl:notice] [pid 5340:tid 3077846720] AH01886: SSL FIPS mode disa bled

[Sat Jul 03 22:35:03.057728 2021] [ssl:warn] [pid 5340:tid 3077846720] AH01873: Init: Session Cache is not configured [hint: SSLSessionCache]

[Sat Jul 03 22:35:03.057794 2021] [lbmethod_heartbeat:notice] [pid 5340:tid 3077846720] AH02282: No slotmem from mod_heartmonitor

[Sat Jul 03 22:35:03.059979 2021] [mpm_event:notice] [pid 5340:tid 3077846720] AH00489: Apache/2.4.4 (Unix) OpenSSL/1.0.1e-fips configured -- resuming normal operations

[Sat Jul 03 22:35:03.060076 2021] [core:notice] [pid 5340:tid 3077846720] AH00094: Command line: '/u sr/local/apache/bin/httpd'

[root@localhost ~]# tail /usr/local/apache/logs/access_log

192.168.0.103 - - [03/Jul/2021:19:13:38 +0800] "GET /index.jsp HTTP/1.1" 200 366

192.168.0.103 - - [03/Jul/2021:19:13:50 +0800] "GET /index.jsp HTTP/1.1" 200 367

192.168.0.103 - - [03/Jul/2021:19:14:41 +0800] "-" 408 -

192.168.0.103 - - [03/Jul/2021:19:17:31 +0800] "GET /index.jsp HTTP/1.1" 200 366

192.168.0.103 - - [03/Jul/2021:19:17:33 +0800] "GET /index.jsp HTTP/1.1" 200 367

192.168.0.103 - - [03/Jul/2021:19:17:33 +0800] "GET /index.jsp HTTP/1.1" 200 366

192.168.0.103 - - [03/Jul/2021:19:17:34 +0800] "GET /index.jsp HTTP/1.1" 200 366

192.168.0.103 - - [03/Jul/2021:19:18:22 +0800] "-" 408 -

192.168.0.103 - - [03/Jul/2021:22:35:53 +0800] "GET /balancer-manager HTTP/1.1" 404 983

192.168.0.103 - - [03/Jul/2021:22:36:44 +0800] "-" 408 -

[root@localhost ~]#

好像是 404 路径不存在的意思

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

ProxySet lbmethod=bytraffic

</proxy>

<VirtualHost *:80>

servername www.magedu.com

<Location /balancer-manager>

SetHandler balancer-manager

Require all granted

</Location>

</VirtualHost>

<VirtualHost *:80>

ServerName localhost

ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid nofailover=on

ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

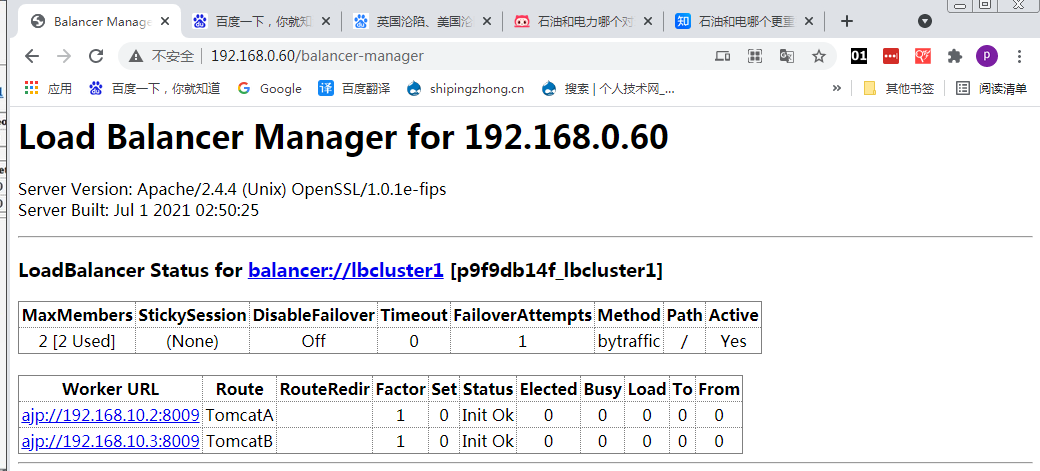

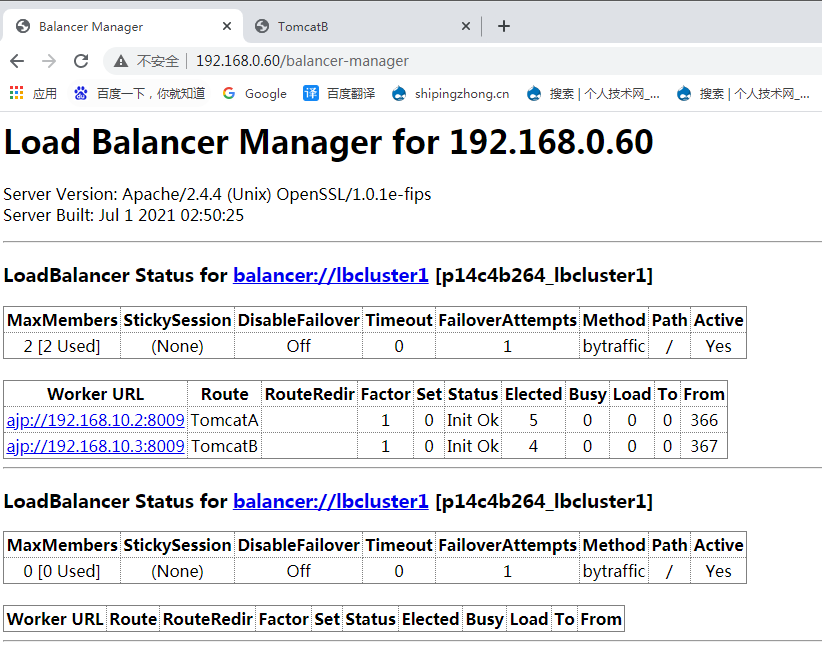

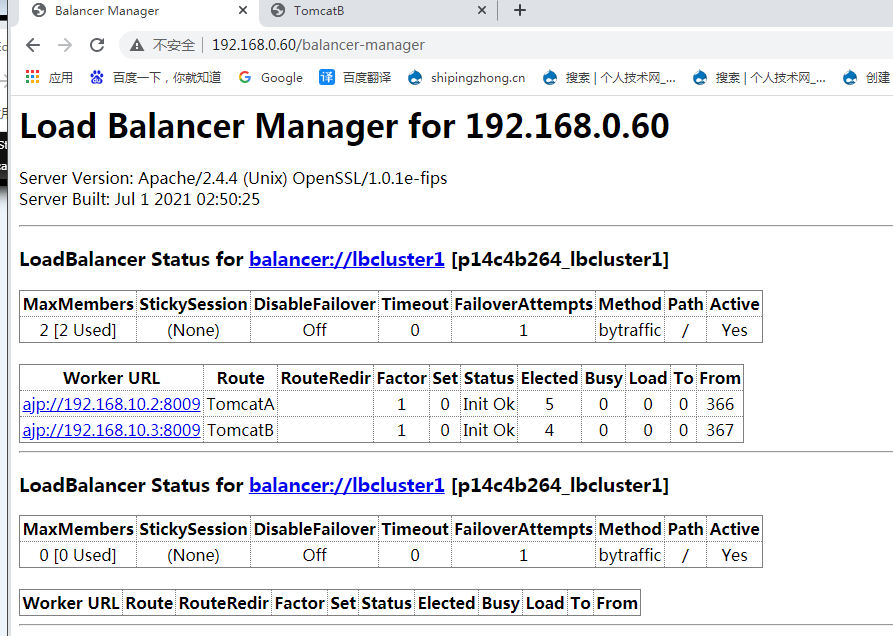

http://192.168.0.60/balancer-manager #可以了

下面是另一种方法 把 /balancer-manager 放到tomcat虚拟主机里面

http://httpd.apache.org/docs/2.4/mod/mod_proxy.html

或 http://httpd.apache.org/docs/2.4/mod/directives.html

http://httpd.apache.org/docs/2.4/mod/mod_proxy.html#proxypass

如果使用虚拟主机实现反向代理,如何在对应的虚拟主机上完成状态信息输出:

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.8:8009 loadfactor=1

BalancerMember ajp://192.168.10.9:8009 loadfactor=1

ProxySet lbmethod=bytraffic

</proxy>

<VirtualHost *:80>

ServerName localhost

ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=JSESSIONID|jsessionid nofailover=On

ProxyPassReverse / balancer://lbcluster1/

<Location /balancer-manager>

SetHandler balancer-manager

Proxypass !

Require all granted

</Location>

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# vim /etc/httpd/extra/httpd-proxy.conf

ProxyRequests Off

<proxy balancer://lbcluster1>

BalancerMember ajp://192.168.10.2:8009 loadfactor=1 route=TomcatA

BalancerMember ajp://192.168.10.3:8009 loadfactor=1 route=TomcatB

ProxySet lbmethod=bytraffic

</proxy>

<VirtualHost *:80>

ServerName www.magedu.com

# ProxyVia On

ProxyPass / balancer://lbcluster1/ stickysession=jsessionid nofailover=on

ProxyPassReverse / balancer://lbcluster1/ stickysession=jsessionid

<Location /balancer-manager>

SetHandler balancer-manager

Proxypass ! #加了这个好了 就是不做转发,这里是不做代理转发至后端的tomcat意思

Require all granted

</Location>

<Proxy *>

Require all granted

</Proxy>

<Location / >

Require all granted

</Location>

</VirtualHost>

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

http://192.168.0.60/balancer-manager

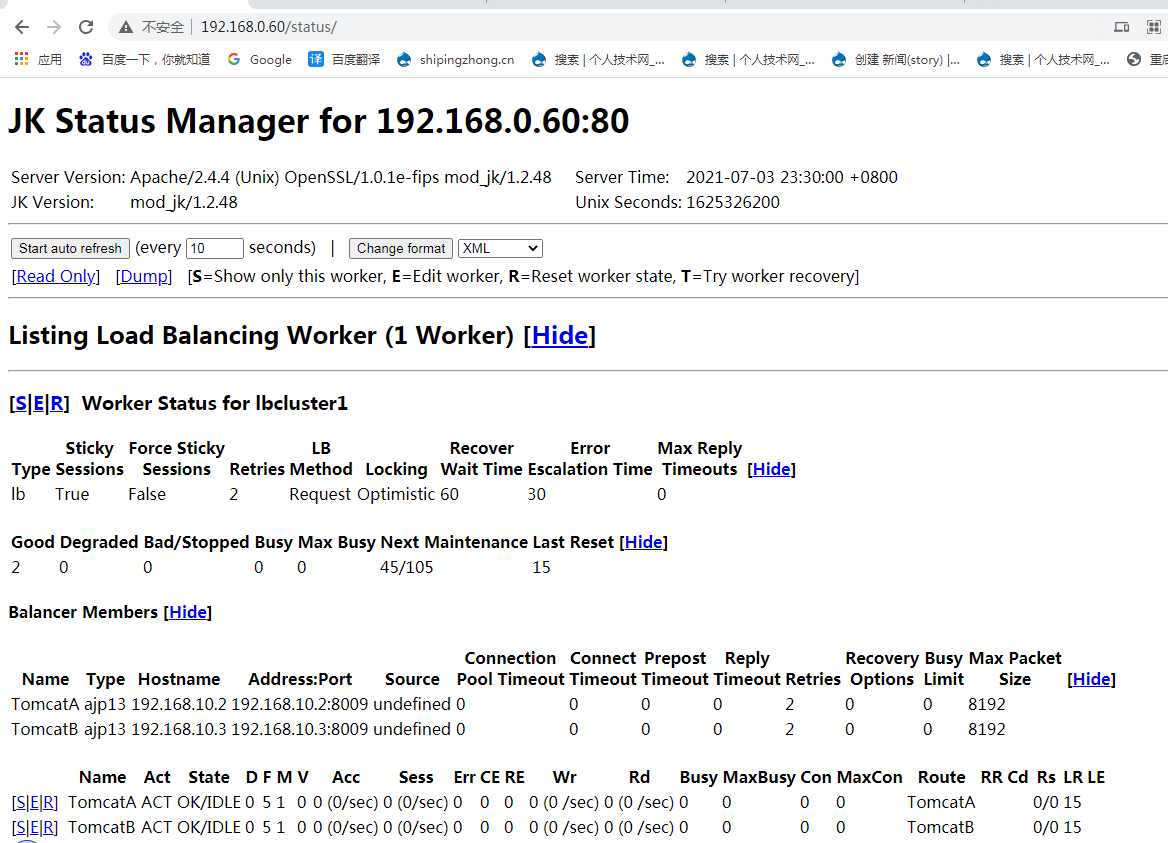

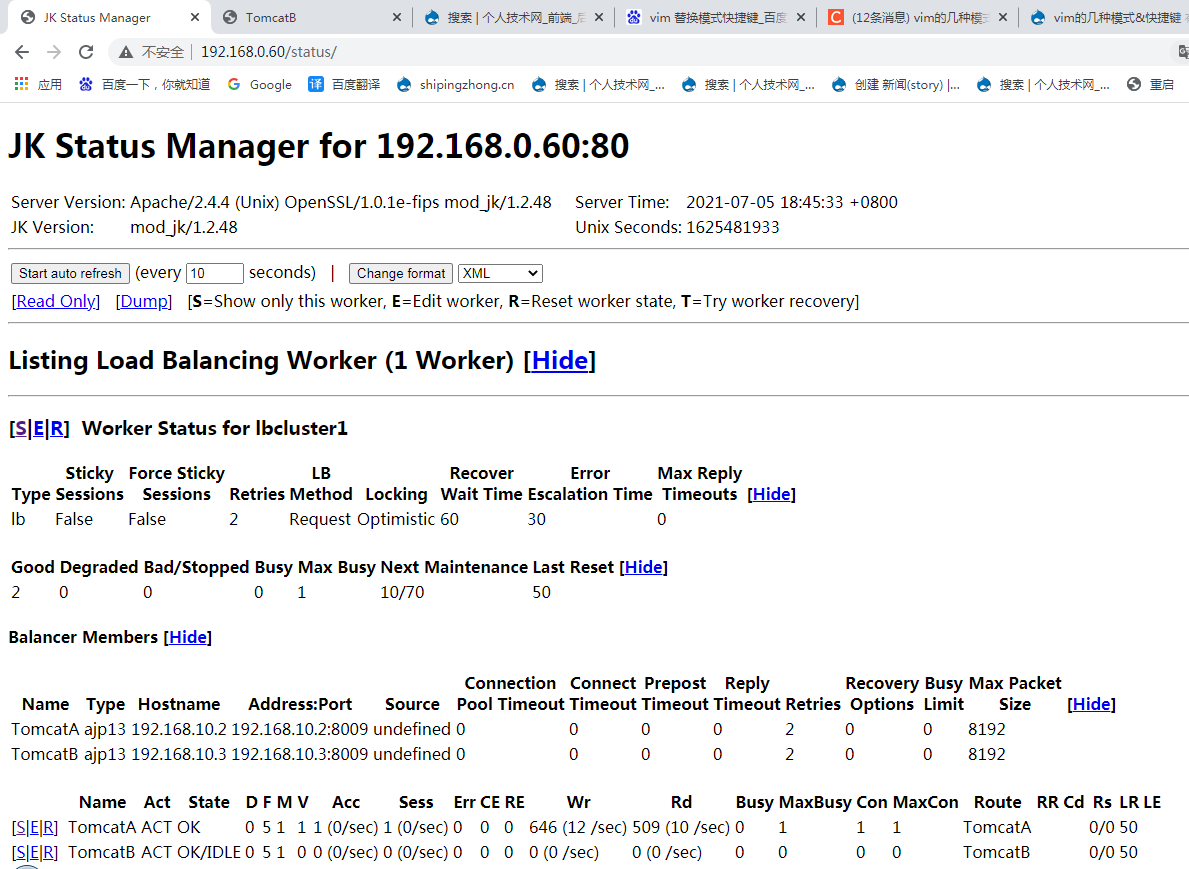

使用 mod_jk 模块输出状态信息

[root@localhost ~]# vim /etc/httpd/httpd.conf

...............................................................................................

#Include /etc/httpd/extra/httpd-proxy.conf

Include /etc/httpd/extra/httpd-jk.conf

...............................................................................................

[root@localhost ~]# cp /etc/httpd/extra/httpd-jk.conf /etc/httpd/extra/httpd-jk.conf.bak

[root@localhost ~]# vim /etc/httpd/extra/httpd-jk.conf

LoadModule jk_module modules/mod_jk.so

JkWorkersFile /etc/httpd/extra/workers.properties

JkLogFile logs/mod_jk.log

JkLogLevel debug

JkMount /* lbcluster1

JkMount /status/ stat1

[root@localhost ~]# cp /etc/httpd/extra/workers.properties /etc/httpd/extra/workers.properties.bak

[root@localhost ~]# vim /etc/httpd/extra/workers.properties

worker.list = lbcluster1,stat1

worker.TomcatA.type = ajp13

worker.TomcatA.host = 192.168.10.2

worker.TomcatA.port = 8009

worker.TomcatA.lbfactor = 5

worker.TomcatB.type = ajp13

worker.TomcatB.host = 192.168.10.3

worker.TomcatB.port = 8009

worker.TomcatB.lbfactor = 5

worker.lbcluster1.type = lb

worker.lbcluster1.sticky_session = 1

worker.lbcluster1.balance_workers = TomcatA, TomcatB

worker.stat1.type = status

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

再试试mod_proxy

[root@localhost ~]# vim /etc/httpd/httpd.conf

...............................................................................................

Include /etc/httpd/extra/httpd-proxy.conf

#Include /etc/httpd/extra/httpd-jk.conf

...............................................................................................

[root@localhost ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost ~]#

如何在两个tomcat 上做成会话共享集群?得定义成Cluster????

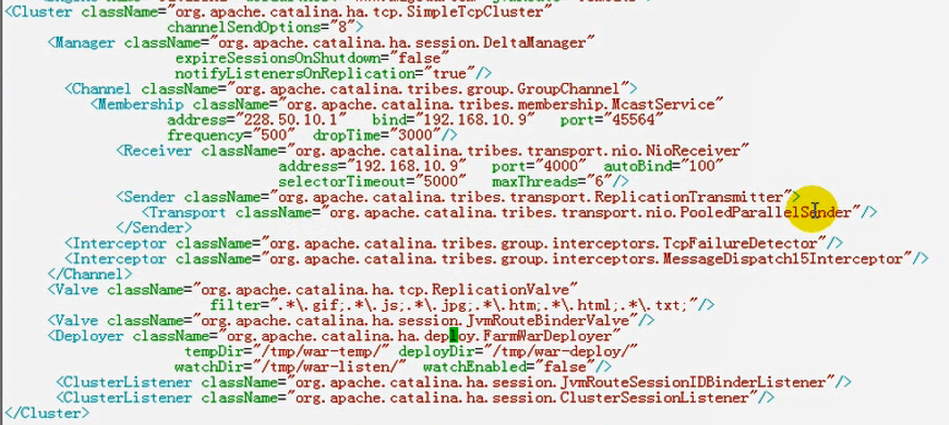

1)Tomcat基于内存复制的集群

<Engine name="Catalina" defaultHost="www.example.com" jvmRoute="tc1">里面是下面的内容,,,每一个节点上都要是下面这样的内容

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8"> <!--这是Cluster说明要启用集群功能了-->

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/><!--Manager是用来定义会话管理器的, 我们应该使用DeltaManager ,而不用BackupManager来实现会话信息的传递-->

<Channel className="org.apache.catalina.tribes.group.GroupChannel"> <!--Channel是定义一个底层通信信道的,在corosync,heartbeat时从来没有关心过这个问题--> <!--通过通道彼此之间传递心跳信息,在这个通道内确定成员关系,有几个节点,每个节点是什么等等-->

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.8.8.9" bind="172.16.100.1" port="45564"

frequency="500" dropTime="3000"/> <!--通道内的成员,,在多播地址(组播地址的45564端口上,每隔500毫秒发送一次心跳信息,,,如果3000毫秒内不发送的话,就把这个tomcat从集群中删除,,,,, )<!--address是多播地址,, 两个tomcat节点得一样,corosync,heartbeat时也是需要关心的-->

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="172.16.100.1" port="4000" autoBind="100"

selectorTimeout="5000" maxThreads="6"/><!--怎么接收别人传递过来的心跳,address是在哪个地址上进行接受这里是172.16.100.1,是这个地址监听的,也可以为auto,,,在4000端口上负责接受别人传递过来的心跳 autoBind="100" autoBind-端口的变化区间;如果port为4000,autoBind为100,接收器将在4000-4099间取一个端口,进行监听;;;selectorTimeout-NioReceiver???内轮询的超时时间,挑选集群成员的时候,大概多少时间,,maxThreads最大几个线程来同时工作 -->

<!--address="auto"时,是用来实现自己监听的ip上,接受别人传来的心跳或会话信息,很显然这个地址是自己的,所以,如果在多个ip上,不想使用auto,可以自己设定ip地址 -->

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter"><!--发送基于ReplicationTransmitter类-->

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/> <!--Transport 明确说明通过 PooledParallelSender类向外传输,是并行的传输,还是单个的传输--> <!--Cluster,Channel ,Receiver ,Sender ,Transport 都是tomcat自带的组件 -->

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/><!--tcp传输故障发生以后,如何决策成员的信息-->

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=".*\.gif;.*\.js;.*\.jpg;.*\.htm;.*\.html;.*\.txt;"/><!--完成会话信息传递的时候,还要定义一下过滤器是什么,只传递哪些会话信息,如果没有过滤的话,则所有会话信息都要向外传递了,不必深究-->

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/><!--是跟我们JvmRoute相关的一个BinderValve,一个阀门,有了这个阀门以后,那个jvmRouter的tomcatA,tomcatB才能正常向外传递,不必深究-->

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/" deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/" watchEnabled="false"/><!--这是部署,好像一个行了,就不必手动复制过去了这个配置文件了,我这里是手动复制的,所有不必深究--><!--自动部署一个应用程序???自动复制到另外一个节点上-->

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/><!--通过这个Listener就能正常传递了,不必深究-->

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/><!--集群监听器,完成会话信息的传递-->

</Cluster>

<!--我们只需关心Receiver,Sender,Membership以及Manager,其它内容只需照样写就可以了-->

以上内容定义在Engine容器中,则表示对所有主机均启动用集群功能。如果定义在某Host中,则表示仅对此主机启用集群功能。

2)此外,所有启用集群功能的web应用程序,其web.xml中都须添加<distributable/>才能实现集群功能。如果某web应用程序没有自己的web.xml,也可以通过复制默认的web.xml至其WEB-INF目录中实现。

在 tomcat1 192.168.0.2 上

[root@node1 ~]# cd /web/webapps/

[root@node1 webapps]# ls

index.jsp

[root@node1 webapps]# mkdir WEB-INF

[root@node1 webapps]# ls

index.jsp WEB-INF

[root@node1 webapps]# cp /usr/local/tomcat/conf/web.xml

[root@node1 webapps]# pwd

/web/webapps

[root@node1 webapps]# cp /usr/local/tomcat/conf/web.xml WEB-INF/ #复制全局的配置文件

[root@node1 webapps]#

[root@node1 webapps]# vim /web/webapps/WEB-INF/web.xml

....................................................................................................

<web-app xmlns="http://java.sun.com/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee

http://java.sun.com/xml/ns/javaee/web-app_3_0.xsd"

version="3.0">

<distributable/> #加上这行就可以了

....................................................................................................

[root@node1 webapps]# scp -r WEB-INF/ 192.168.10.3:/web/webapps/ #确保tomcat2 192.168.10.2 也有这个文件夹文件

The authenticity of host '192.168.10.3 (192.168.10.3)' can't be established.

RSA key fingerprint is ae:fe:80:46:96:5b:2a:94:5e:8e:0c:ec:86:eb:e1:ee.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.10.3' (RSA) to the list of known hosts.

web.xml 100% 159KB 159.1KB/s 00:00

[root@node1 webapps]# cd /usr/local/tomcat/conf/

[root@node1 conf]# pwd

/usr/local/tomcat/conf

[root@node1 conf]# vim server.xml

...........................................................................................................................................

<Engine name="Catalina" defaultHost="www.magedu.com" jvmRoute="TomcatA" >

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8"><!--这里cluster放在Engine里面,也可以放在Host当中-->

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.8.8.9" bind="192.168.10.2" port="45564"

frequency="500" dropTime="3000"/><!--bind是自己的地址-->

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="192.168.10.2" port="4000" autoBind="100"

selectorTimeout="5000" maxThreads="6"/><!--address是自己的地址,我用了address="auto"为啥不行-->

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=".*\.gif;.*\.js;.*\.jpg;.*\.htm;.*\.html;.*\.txt;"/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/" deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/" watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

...........................................................................................................................................

在 tomcat2 192.168.0.3 上

[root@rs3 ~]# cd /usr/local/tomcat/conf/

[root@rs3 conf]# pwd

/usr/local/tomcat/conf

[root@rs3 conf]# vim server.xml

....................................................................................................

<Engine name="Catalina" defaultHost="www.magedu.com" jvmRoute="TomcatB" >

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8"><!--这里cluster放在Engine里面,也可以放在Host当中-->

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.8.8.9" bind="192.168.10.3" port="45564"

frequency="500" dropTime="3000"/> <!--bind是自己的地址-->

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="192.168.10.3" port="4000" autoBind="100"

selectorTimeout="5000" maxThreads="6"/> <!--这里address用auto不行,不知为什么-->

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=".*\.gif;.*\.js;.*\.jpg;.*\.htm;.*\.html;.*\.txt;"/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/" deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/" watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

...........................................................................................................................................

[root@rs3 conf]# catalina.sh configtest

Using CATALINA_BASE: /usr/local/tomcat

Using CATALINA_HOME: /usr/local/tomcat

Using CATALINA_TMPDIR: /usr/local/tomcat/temp

Using JRE_HOME: /usr/java/latest

Using CLASSPATH: /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar

2021-7-5 8:59:18 org.apache.catalina.core.AprLifecycleListener init

信息: The APR based Apache Tomcat Native library which allows optimal performance in production environments was not found on the java.library.path: /usr/java/jdk1.6.0_21/jre/lib/i386/client:/usr/java/jdk1.6.0_21/jre/lib/i386:/usr/java/jdk1.6.0_21/jre/../lib/i386:/usr/java/packages/lib/i386:/lib:/usr/lib

2021-7-5 8:59:19 org.apache.coyote.AbstractProtocol init

信息: Initializing ProtocolHandler ["http-bio-8080"]

2021-7-5 8:59:19 org.apache.coyote.AbstractProtocol init

信息: Initializing ProtocolHandler ["ajp-bio-8009"]

2021-7-5 8:59:19 org.apache.catalina.startup.Catalina load

信息: Initialization processed in 892 ms

[root@rs3 conf]#

#马哥 执行 catalina.sh configtest, 却报错

马哥重新弄复制了,另一份代码,,才与我从马哥的文档中复制的代码一样

在 tomcat1 192.168.0.2 上

[root@node1 conf]# catalina.sh start

Using CATALINA_BASE: /usr/local/tomcat

Using CATALINA_HOME: /usr/local/tomcat

Using CATALINA_TMPDIR: /usr/local/tomcat/temp

Using JRE_HOME: /usr/java/latest

Using CLASSPATH: /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar

[root@node1 conf]#

[root@node1 conf]# netstat -tnlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:2208 0.0.0.0:* LISTEN 3901/./hpiod

tcp 0 0 0.0.0.0:2049 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:809 0.0.0.0:* LISTEN 4021/rpc.rquotad

tcp 0 0 0.0.0.0:3306 0.0.0.0:* LISTEN 4505/mysqld

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 3498/portmap

tcp 0 0 0.0.0.0:850 0.0.0.0:* LISTEN 4063/rpc.mountd

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 3924/sshd

tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 3938/cupsd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 4471/sendmail

tcp 0 0 0.0.0.0:762 0.0.0.0:* LISTEN 3548/rpc.statd

tcp 0 0 0.0.0.0:38654 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:2207 0.0.0.0:* LISTEN 3906/python

tcp 0 0 ::ffff:192.168.10.2:4000 :::* LISTEN 5257/java #4000端口

tcp 0 0 ::ffff:127.0.0.1:8005 :::* LISTEN 5257/java

tcp 0 0 :::8009 :::* LISTEN 5257/java

tcp 0 0 :::8080 :::* LISTEN 5257/java

tcp 0 0 :::80 :::* LISTEN 4554/httpd

tcp 0 0 :::22 :::* LISTEN 3924/sshd

tcp 0 0 :::443 :::* LISTEN 4554/httpd

[root@node1 conf]#

如果有问题看log 比这里大约是看 /usr/local/tomcat/logs/catalina.out

[root@node1 conf]# tail -50 /usr/local/tomcat/logs/catalina.2021-07-05.log

2021-7-5 9:33:22 org.apache.catalina.tribes.membership.McastServiceImpl waitForMembers #等待成员

信息: Sleeping for 1000 milliseconds to establish cluster membership, start level:4

2021-7-5 9:33:23 org.apache.catalina.ha.tcp.SimpleTcpCluster memberAdded

信息: Replication member added:org.apache.catalina.tribes.membership.MemberImpl[tcp://{192, 168, 10, 3}:4001,{192, 168, 10, 3},4001, alive=207436, securePort=-1, UDP Port=-1, id={85 45 -8 125 72 -110 68 -33 -106 26 -123 -116 -12 -122 107 -40 }, payload={}, command={}, domain={}, ]

2021-7-5 9:33:23 org.apache.catalina.tribes.membership.McastServiceImpl waitForMembers

信息: Done sleeping, membership established, start level:4

2021-7-5 9:33:23 org.apache.catalina.tribes.membership.McastServiceImpl waitForMembers

信息: Sleeping for 1000 milliseconds to establish cluster membership, start level:8

2021-7-5 9:33:23 org.apache.catalina.tribes.io.BufferPool getBufferPool

信息: Created a buffer pool with max size:104857600 bytes of type:org.apache.catalina.tribes.io.BufferPool15Impl

2021-7-5 9:33:24 org.apache.catalina.tribes.membership.McastServiceImpl waitForMembers

信息: Done sleeping, membership established, start level:8

2021-7-5 9:33:25 org.apache.catalina.ha.session.DeltaManager startInternal

信息: Register manager www.magedu.com# to cluster element Engine with name Catalina

2021-7-5 9:33:25 org.apache.catalina.ha.session.DeltaManager startInternal

信息: Starting clustering manager at www.magedu.com#

2021-7-5 9:33:25 org.apache.catalina.ha.session.DeltaManager getAllClusterSessions

信息: Manager [www.magedu.com#], requesting session state from org.apache.catalina.tribes.membership.MemberImpl[tcp://{192, 168, 10, 3}:4001,{192, 168, 10, 3},4001, alive=209443, securePort=-1, UDP Port=-1, id={85 45 -8 125 72 -110 68 -33 -106 26 -123 -116 -12 -122 107 -40 }, payload={}, command={}, domain={}, ]. This operation will timeout if no session state has been received within 60 seconds.

2021-7-5 9:33:25 org.apache.catalina.ha.session.DeltaManager waitForSendAllSessions

信息: Manager [www.magedu.com#]; session state send at 7/5/21 9:33 AM received in 109 ms.

2021-7-5 9:33:25 org.apache.catalina.startup.HostConfig deployDirectory

信息: Deploying web application directory /web/htdocs

2021-7-5 9:33:25 org.apache.catalina.startup.HostConfig deployDirectory

信息: Deploying web application directory /web/webapps

2021-7-5 9:33:25 org.apache.catalina.ha.session.DeltaManager startInternal

信息: Register manager www.magedu.com#/webapps to cluster element Engine with name Catalina

2021-7-5 9:33:25 org.apache.catalina.ha.session.DeltaManager startInternal

信息: Starting clustering manager at www.magedu.com#/webapps

2021-7-5 9:33:25 org.apache.catalina.ha.session.DeltaManager getAllClusterSessions

信息: Manager [www.magedu.com#/webapps], requesting session state from org.apache.catalina.tribes.membership.MemberImpl[tcp://{192, 168, 10, 3}:4001,{192, 168, 10, 3},4001, alive=209443, securePort=-1, UDP Port=-1, id={85 45 -8 125 72 -110 68 -33 -106 26 -123 -116 -12 -122 107 -40 }, payload={}, command={}, domain={}, ]. This operation will timeout if no session state has been received within 60 seconds.

2021-7-5 9:33:25 org.apache.catalina.ha.session.DeltaManager waitForSendAllSessions

信息: Manager [www.magedu.com#/webapps]; session state send at 7/5/21 9:33 AM received in 102 ms.

2021-7-5 9:33:25 org.apache.catalina.startup.HostConfig deployDirectory

信息: Deploying web application directory /usr/local/apache-tomcat-7.0.40/webapps/examples

2021-7-5 9:33:25 org.apache.catalina.startup.HostConfig deployDirectory

信息: Deploying web application directory /usr/local/apache-tomcat-7.0.40/webapps/manager

2021-7-5 9:33:25 org.apache.catalina.startup.HostConfig deployDirectory

信息: Deploying web application directory /usr/local/apache-tomcat-7.0.40/webapps/ROOT

2021-7-5 9:33:25 org.apache.catalina.startup.HostConfig deployDirectory

信息: Deploying web application directory /usr/local/apache-tomcat-7.0.40/webapps/docs

2021-7-5 9:33:25 org.apache.catalina.startup.HostConfig deployDirectory

信息: Deploying web application directory /usr/local/apache-tomcat-7.0.40/webapps/host-manager

2021-7-5 9:33:25 org.apache.catalina.ha.session.JvmRouteBinderValve startInternal

信息: JvmRouteBinderValve started

2021-7-5 9:33:25 org.apache.coyote.AbstractProtocol start

信息: Starting ProtocolHandler ["http-bio-8080"]

2021-7-5 9:33:25 org.apache.coyote.AbstractProtocol start

信息: Starting ProtocolHandler ["ajp-bio-8009"]

2021-7-5 9:33:25 org.apache.catalina.startup.Catalina start

信息: Server startup in 3053 ms

[root@node1 conf]#

在 tomcat2 192.168.0.3 上

[root@rs3 conf]# catalina.sh start

这里也得看一下 /usr/local/tomcat/logs/catalina.2021-07-05.log

在 tomcat1 192.168.0.2 上

[root@node1 conf]# tail -100 /usr/local/tomcat/logs/catalina.2021-07-05.log | grep 228 #可以看到组播套接字

信息: Attempting to bind the multicast socket to /228.50.10.1:45564

[root@node1 conf]#

[root@node1 conf]# tail -50 /usr/local/tomcat/logs/catalina.2021-07-05.log | grep -i add #可以看到成员添加了

2021-7-5 9:33:23 org.apache.catalina.ha.tcp.SimpleTcpCluster memberAdded

信息: Replication member added:org.apache.catalina.tribes.membership.MemberImpl[tcp://{192, 168, 10, 3}:4001,{192, 168, 10, 3},4001, alive=207436, securePort=-1, UDP Port=-1, id={85 45 -8 125 72 -110 68 -33 -106 26 -123 -116 -12 -122 107 -40 }, payload={}, command={}, domain={}, ]

[root@node1 conf]#

在前端apache 192.168.0.60 另一网卡 192.168.10.10

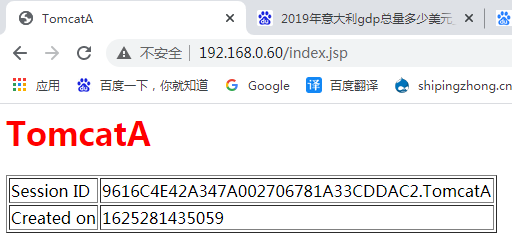

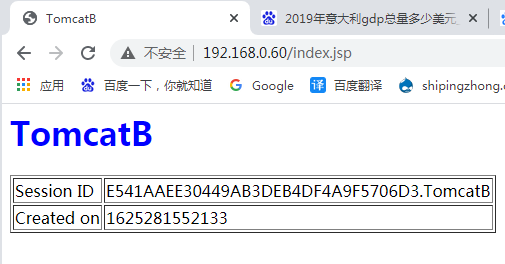

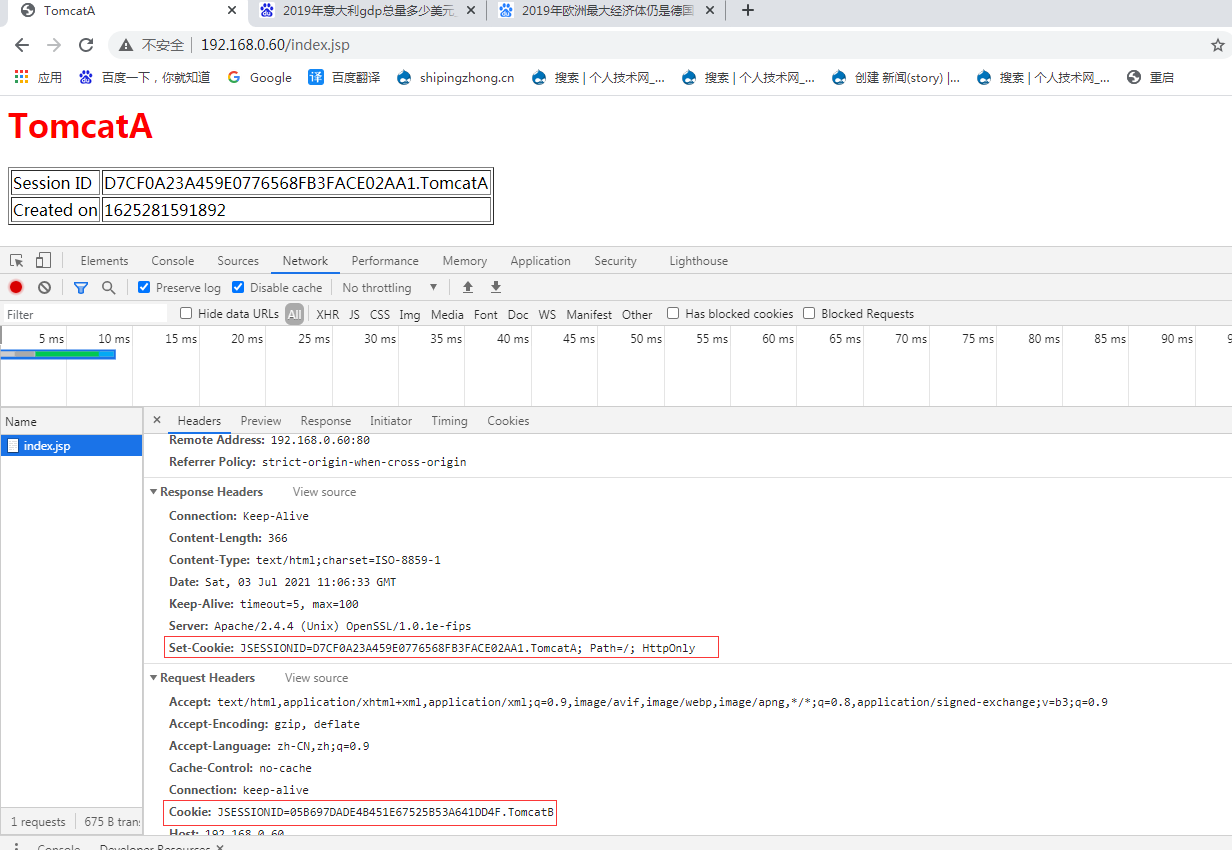

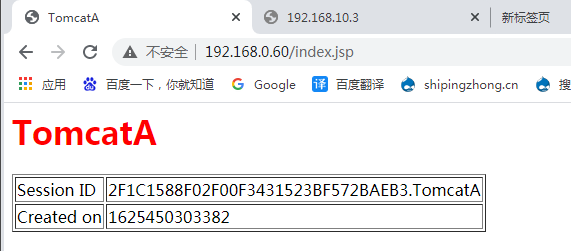

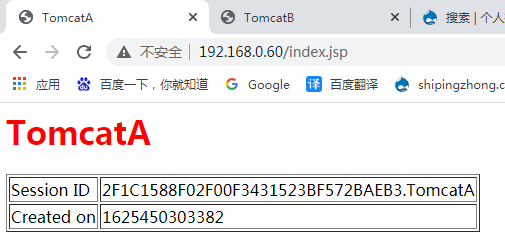

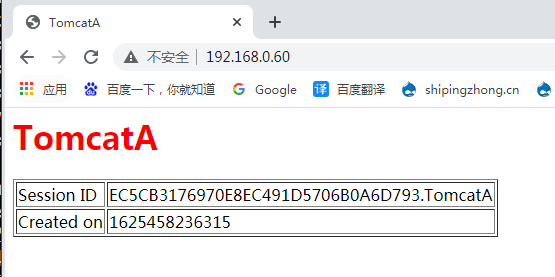

http://192.168.0.60/ 此时session不会变了,所以session共享了,

backup集群听起来比delta集群好听一点,但是backup集群不常用,tomcat的集群规模不是很大,所以delta集群常用,,,,我们也可实现sessionid共享的方式?????,将用户的请求始终定向到同一个主机,将那个主机持久会话,(挂了的话,重启tomcat实例就可以了,)

如下图,我们可以共享存储,会话

在前端apache 192.168.0.60 另一网卡 192.168.10.10

http://192.168.0.60/balancer-manager

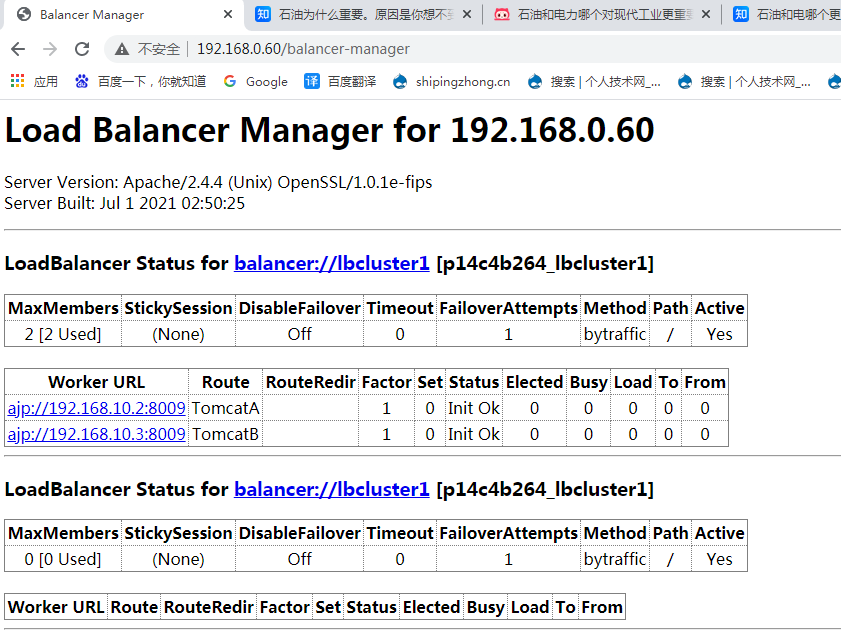

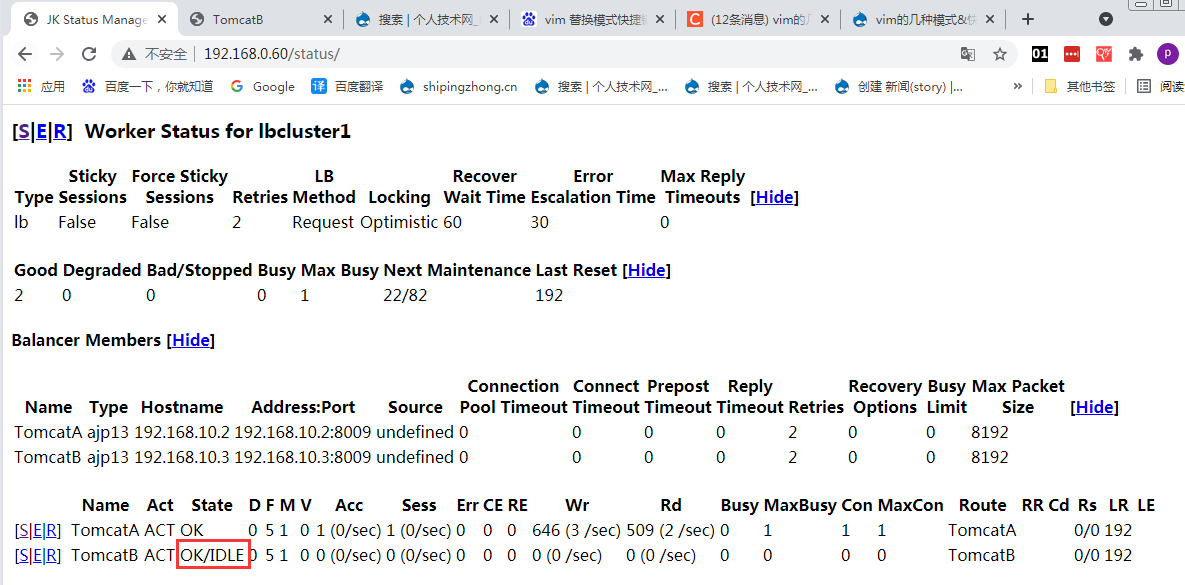

我们让 tomcat2 192.168.0.3 上 # catalina.sh stop

http://192.168.0.60/balancer-manager #如下图有点慢,为什么tomcat2 还是Init Ok? 这个页面显示不了,,,是不是反应有点慢

我们让 tomcat2 192.168.0.3 上 # catalina.sh start

在前端apache 192.168.0.60 另一网卡 192.168.10.10

把负载均衡方式由mod_proxy改成mod_jk

[root@localhost extra]# vim /etc/httpd/httpd.conf

....................................................................................................

#Include /etc/httpd/extra/httpd-proxy.conf

Include /etc/httpd/extra/httpd-jk.conf

....................................................................................................

[root@localhost extra]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost extra]#

http://192.168.0.60/index.jsp #session绑定,刷新一直没变化,都是指向tomcatA

既然能共享,那就不用绑定了

[root@localhost extra]# vim workers.properties

worker.list = lbcluster1,stat1

worker.TomcatA.type = ajp13

worker.TomcatA.host = 192.168.10.2

worker.TomcatA.port = 8009

worker.TomcatA.lbfactor = 5

worker.TomcatB.type = ajp13

worker.TomcatB.host = 192.168.10.3

worker.TomcatB.port = 8009

worker.TomcatB.lbfactor = 5

worker.lbcluster1.type = lb

worker.lbcluster1.sticky_session = 0 #改成0

worker.lbcluster1.balance_workers = TomcatA, TomcatB

worker.stat1.type = status

[root@localhost extra]# service httpd restart

停止 httpd: [确定]

正在启动 httpd: [确定]

[root@localhost extra]#

http://192.168.0.60/index.jsp session一直不变

我们让 tomcat2 192.168.0.3 上 # catalina.sh stop

在前端apache 192.168.0.60 另一网卡 192.168.10.10

http://192.168.0.60/status/ 这里tomcatB不工作了,,,但是显示出不工作还是有点慢,还是时间长

我们在后端两个tomcat上部署上sns????????意味着用户通过哪个节点登录都没有问题了,无论怎么刷新,无论怎么负载均衡,用户的会话信息都会存在,

让nginx实现对tomcat的负载均衡,

在前端apache 192.168.0.60 另一网卡 192.168.10.10

[root@node1 conf]# service httpd stop

停止 httpd: [确定]

[root@node1 conf]#

[root@localhost nginx-1.4.7]# cp /etc/nginx/nginx.conf /etc/nginx/nginx.conf.bak

[root@localhost nginx-1.4.7]#

[root@localhost nginx-1.4.7]# vim /etc/nginx/nginx.conf

....................................................................................................

#gzip on;

upstream tomcatsrvs{ #大约增加这里同容

server 192.168.10.2:8080;

server 192.168.10.3:8080;

}

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

#location / {

# root html;

# index index.html index.htm;

# }

location / { #大约增加这里内容

proxy_pass http://tomcatsrvs;

}

....................................................................................................

[root@localhost nginx-1.4.7]# service nginx configtest

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@localhost nginx-1.4.7]# service nginx restart

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

停止 nginx: [确定]

正在启动 nginx: [确定]

[root@localhost nginx-1.4.7]#

nginx 只能基于http协议实现反向代理