Have you ever opened Chrome's DevTools and wondered what everything meant?

I've had questions like:

What should I be looking at? What should I try to optimize, and how?Since I wanted answers, I set out on a mission to filter out the most important information. Then, I dug deeper to understand what needed to be fixed, and how to fix it.

This article is a result of this mission. Let's take a look at what

Waiting (TTFB) represents, and let's answer three important questions:

Should it be optimized? When should it be optimized? How can one optimize it?Does the TTFB (Time to First Byte) really matter?

Yes, it does.

So then the question becomes, should you focus your efforts on optimizing it for a faster website? It depends.

What does it depend on? How slow your TTFB is.

There's a point when any further optimization won't give you as much of a return. Also, as Ilya says in

his post, the timing isn't the only thing that matters—what's also important is what's in those first bytes.

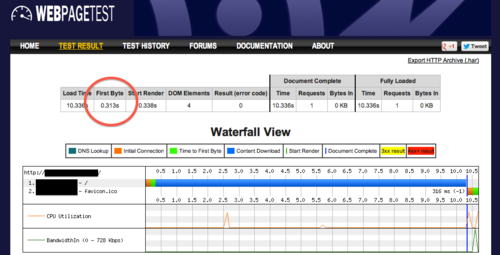

In the following example, the TTFB is pretty good. 160ms is a decent number, although it could be a bit better. (I'll explain how to make it better in a moment)

However, I go on sites with 1+ second TTFB for relatively simple pages and that, my friends, can be optimized.

Check your timing and see, but remember that just because you don't have a long TTFB doesn't mean your visitors don't.

How come? That's what we're about to find out. First, let's explain what the TTFB actually represents, and that will explain why the number changes depending on a number of factors.

What is TTFB?

Time to First Byte is how long the browser has to wait before receiving data. In other words, it is the time waiting for the initial response. That's why it's pretty important...in order for your page to be rendered, it needs to receive the necessary HTML data. The longer it takes to get that data, the longer it takes to display your page.

The thing is, just because your TTFB is 130ms doesn't mean that someone in Canada or Brazil will have the same number. In fact, they won't.

Here's why.

The

Waiting (TTFB) represents a number of things, like:

- Server response time

- Time to transfer bytes (a.k.a latency of the round trip)

Server response time: This is how long it takes for your server to generate a response and send it back. The good news, if this is the bottleneck, is that you have more control over it. Well, assuming you have access to the server.

The bad news is that you have some work to do. You have to figure out which part of your system is taking time to respond, and that could be a number of things.

By the way, according to Google's

PageSpeed Insights, your server response time should be

below 200ms.

If your response time is higher than that (we'll talk about figuring out your timing in the next section), there are a number of possible reasons, like:

- Slow database queries

- Slow logic

- Resource starvation

- Too many slow frameworks/libraries/dependencies

- Slow hardware

Time to transfer bytes: Internet can be pretty darn fast depending on where you are and what kind of connection you have access to, but even then we are limited by the distance packets have to travel. According to

Ilya Grigorik, we're already quite close to the speed of light so we shouldn't expect more speed there unless we bend physics. The way to speed things up, then, is to shorten distances.

That's where CDNs come in, and that's how you can improve this part.

If your server responds very quickly, and the bytes have to travel a very short distance, your TTFB should be low. That doesn't mean a user won't run into a slow TTFB if they have a crappy connection or live far away from any of your CDN nodes. That's one reason why the CDN provider that you choose is important.

Is it my server, or is it the network?

This is an important question to answer, because it will make all the difference in how you go about optimizing your TTFB.

If your server is fast enough but the network is causing delays, optimizing your server is a waste of time and won't give you the results you're looking for.

So how can you tell?

Measure, measure, measure.

Thankfully, there are tools out there to help with this.

Measuring network time

Option #1One tool available is called the

Navigation Timing API.

For example, using the

fetchStart and

responseStart will give you the round trip time for first byte.

See how to implement the API in

the introduction.

Option #2You may not know this, but Google Analytics actually includes a section that lists some of this information for you.

Go to Behavior -> Site Speed -> Overview, and you should be greeted with this:

Be careful of averages, though. They hide outliers that probably shouldn't be hidden.

Measuring server response time

While the Navigation Timing API has a requestStart option, which returns "the time immediately before the user agent starts requesting the current document from the server", it does not have a requestEnd due to a few reasons. That's too bad, though, because it means we will be getting a number that includes the trip back to the user's browser (since we'd have to use responseStart).

Hmm. Are there any other options?

There are definitely ways of profiling your data stores, web server response times, and other parts of your stack that data travels through in order to form a response. That's where

monitoring toolscome in.

But what about timing the overall response time instead of individual pieces? Honestly, this one is tricky to find info on. Looks like some of the services mentioned in the previously linked post offer this kind of visibility.

If you know of an accurate way to do this, I'd love to hear about it.

How can I measure my TTFB on different networks?

Like I said earlier, just because your

Waiting (TTFB) time is low doesn't mean all of your users will also have a low TTFB time.

How can you get a more accurate representation? By throttling your own network and seeing how long it takes. That's when the true colors of your optimization efforts come out.

Yes, it may be super fast when everything is cached and the origin is close to you, but what about when your user is halfway across the world?

Alright, so how do you throttle your connection? Thankfully, DevTools makes this quite easy.

Click on "No Throttling" and select the option you want.

I chose the GPRS which is awfully slow, and still had a pretty good time of ~600ms. Not too bad considering how bad that network is.

Of course, it would be better if you actually had real people testing this on different kinds of networks, devices, and in different locations.

Conclusion

While DevTools can be confusing at first sight (because web performance is complicated), I hope this article gave you a better understanding of the Waiting (TTFB) results.

Another important thought to retain from reading this is that even if your website loads very quickly on your own devices and networks, it doesn't mean that the same holds for all networks and devices. Measure as much as you can. That's the only way you can paint a more accurate picture of what's going on and whether you are prematurely optimizing or not.

Happy learning!

Has this helped you get a better understanding of Waiting (TTFB)?